notice

This is documentation for Rasa X/Enterprise Documentation v1.2.x, which is no longer actively maintained.

For up-to-date documentation, see the latest version (1.4.x).

Share Your Assistant

Why?

People will always surprise you with what they say. Too many teams spend months designing conversations that will never happen. By sharing your assistant with test users early on in the development process, you can orient your assistant’s behavior toward what real users say, rather than what you think they might say.

When?

Give your prototype to users to test as early as possible. As soon as you have a few basic things working, share your assistant with a few users from outside your team to see what they make of it. As you add skills and data to your assistant, share it with more test users, or ask users who have tested it previously to talk to it again.

How?

There are two ways to talk to your assistant using Rasa Enterprise: The Share your Bot link and the Talk to your Bot screen. You can also share your assistant on external channels like Facebook Messenger. All conversations, from within Rasa Enterprise and from any channels you have connected, will show up in your conversation screen. In the conversation screen, you will be able to see which channel the conversation came from and review the conversations.

Share Your Bot

The Share your Bot link provides a simple UI where anyone with the link can talk to the assistant. Share your assistant via the Share your Bot link with test users with no inside knowledge of the assistant.

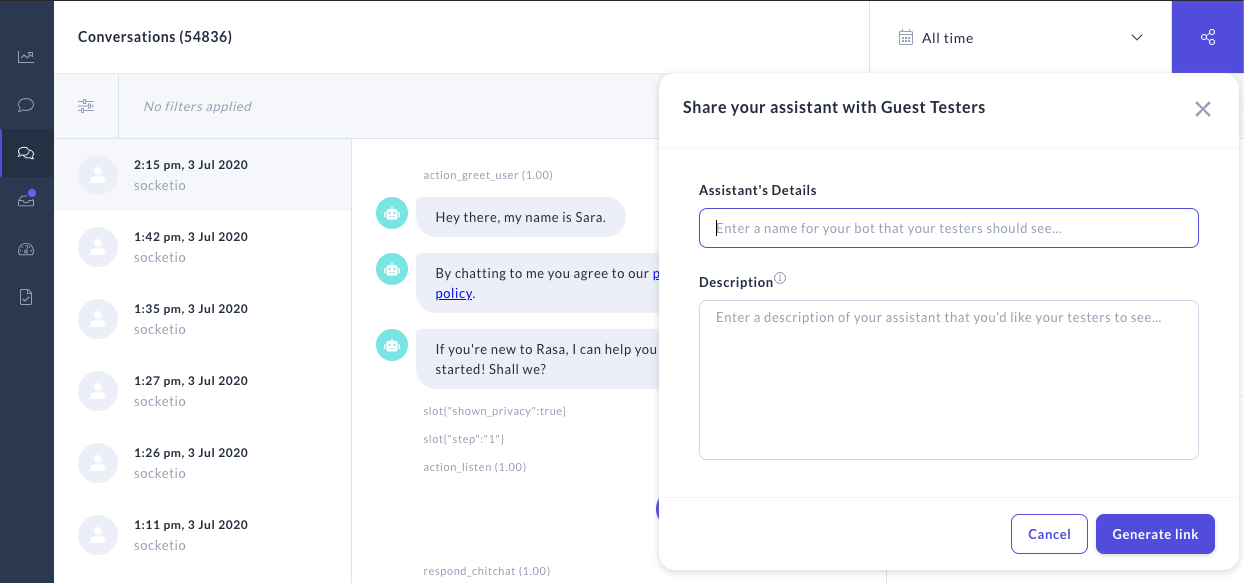

To share your assistant with someone, click on the Share with guest testers icon on the Conversations screen. In the popup, you can edit what testers will see.

Talk to Your Bot

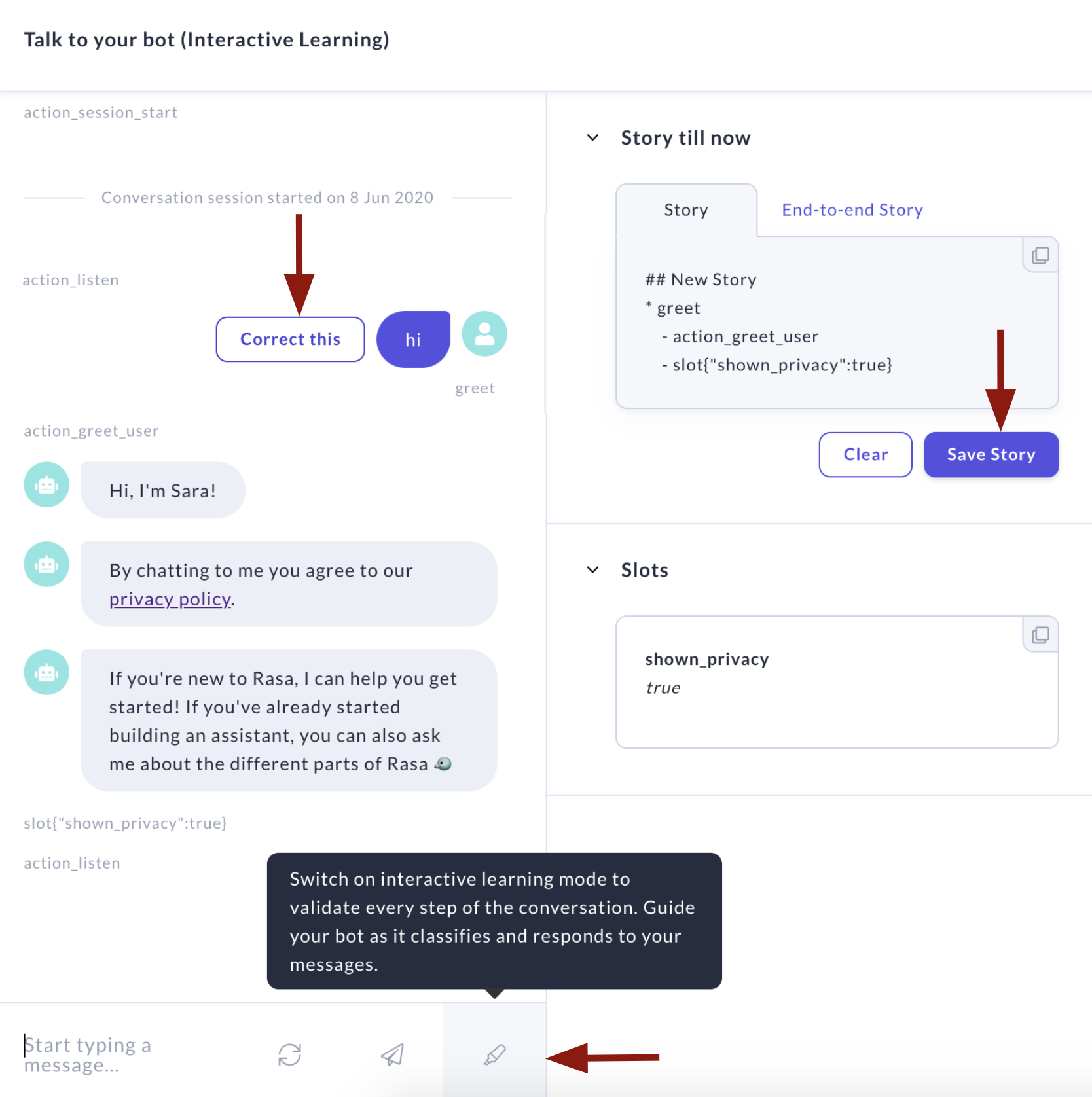

The Talk to your Bot screen shows much more detail, like the intents, entities, actions, and slots present in your conversation. This is helpful for debugging, and is a great way for everyone involved in building your assistant to talk to it. You can also correct any mistakes the bot makes and create new NLU training data and stories. Share your assistant via the Talk to your Bot screen with test users who know the assistant’s domain, and can accurately correct predictions it makes.

To correct predictions during a conversation, you can click Correct this next to a message, which will let you correct a certain input or action and continue the conversation from the corrected event. Or, to confirm or correct each message while talking to the assistant, turn on Interactive Learning mode.

In Interactive Learning mode, the messages with labeled intents are automatically added to your training data instead of going to the NLU Inbox screen. You can learn more about Interactive Learning in the Rasa Docs.

At any point in a conversation, you can click Save to add the story up to that point to your training data. You can also save it as a test story.

Rasa Enterprise allows multiple users whose access to the screen is determined by role based access control.

External Channels

The advantage of using Rasa Enterprise to share your bot is that you can get real users to try out your assistant, without having to set up any voice or chat channels like Slack or chat widget on your website.

Once you are ready, you can connect external channels, and conversations from all (Rasa Enterprise and external) channels will show up in the conversations screen. If you have any channel specific responses, you’ll definitely want to connect the external channel to test out these responses.

With who?

The first test users of your assistant will naturally be you and your teammates. You should absolutely talk to your assistant yourself, and make use of interactive learning to improve it as you go. However, for the purposes of Conversation-Driven Development, the people who build the assistant actually know too much. They know exactly what the assistant was made to do, how to work around what it doesn’t know, and how to break it. Real users won’t have any of this knowledge, and will use your assistant in a completely different way.

You don’t need to appoint official test users. Anyone can be a test user, as long as they can access the assistant via one of the channels it is available on. Colleagues who aren’t directly involved in building the assistant, members of other teams in your organization, even friends, acquaintances, or people in your social media network could act as test users. Think of your end users, and try to get a representative sample of test users, if possible.

Who you can share your assistant with might be limited by security concerns or organizational rules, but getting your assistant out to as many test users as possible should be a high priority in the development of your assistant. Real conversations are central to Conversation-Driven Development.

Instructing Your Test Users

When you share an assistant, it’s helpful to provide the test users with some (but not too much) information about how to use it. The key is to gather useful conversations that help you improve your assistant.

You can give test users a short introduction to your assistant as part of a “help” skill, or add a summary of the assistant’s skills in a greeting message that starts the conversation. To collect diverse training data, avoid directly telling users what they should say. It’s better to provide user goals they can accomplish, rather than example questions to ask. For example, to describe the helpdesk-assistant to test users, the greeting might say,

“Hi! I’m your IT helpdesk assistant! I can help you open IT helpdesk tickets, and check on the status of open tickets.”

You could also provide user profiles or user goals to some of your test users. For example:

“You are a customer of Iron Bank who wants to check up on their credit card balance and pay it off.”

“You are a long time customer of Digital Music and you notice the new assistant button on the website. You open it just to see what it can do.”

“You opened a ticket to get your password reset last week, and you don’t know what happened to it.”

These kinds of “user stories” can help your test users think creatively about what to ask the assistant, and can help you collect conversations covering all your assistant’s skills, not just the most obvious ones or the ones people will naturally try first.