Ready to deploy your model in the real world? What about the biases in data and, thus, the trained model? This series in the Rasa Learning Center deals with this critical issue of biases in embeddings.

In this tutorial series, you will learn how to measure bias and the mitigation techniques.

How to measure biases in word embeddings

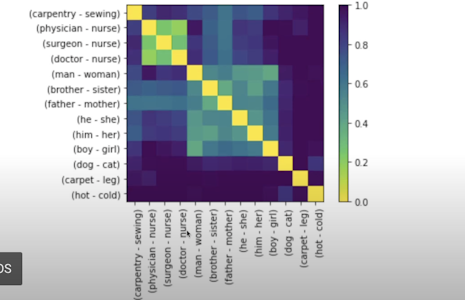

This tutorial covers embeddings and word vectors. Word vectors can be measured by direction and magnitude in 2d word embeddings. This also covers how the direction between pairs [He] [She] and [king] [queen] is parallel and has a gender direction.

If we have a gender direction, we can also know what should correlate with that gender. We can compare it with stereotypical jobs like doctors and nurses.

In the correlation chart, where we compared gender with stereotypical jobs and unrelated things like dogs and cats, we can see a high correlation between men and doctors.

For more details, click here.

Mitigating Bias:

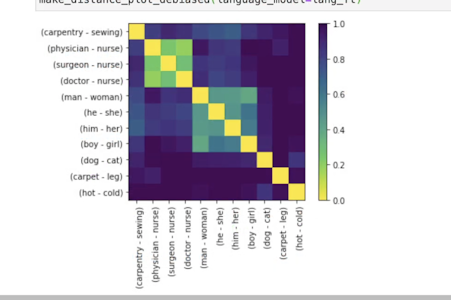

This tutorial is based on the paper debiasing with projection. It demonstrates how using projection perpendicular to the gender axis reduces biases.

In the previous tutorial, we talked about the gender axis; in this method, a neutral axis is drawn perpendicular to it, and all the vectors are projected on this axis. And as we know, cosine on perpendicular axes is zero; thus, there are no magnitudes on the gender axis.

And a correlation graph is shown between the stereotypical jobs and gender, which shows a faded correlation between men and doctors.

Lipstick on a Pig:

For more details, click here.

This tutorial is based on the paper by Hila Gonen, which states projecting it on an axis is just hiding the bias and not reducing it. The gender-related words could still cluster together when the projections were drawn on the 2d vector representations.

It follows an experiment where we try to see if the classifier can give a classification of 50 percent with different setups of embeddings and models.

Method I: Biased embedding and biased model

In this method, a model is trained on biased data and then tested on biased data for reference,

In the results, the classifier gives an f1-score of 90 percent accuracy. Now when we unbias the embedding or the model, this score should go to 50 percent, as there are only two gender classifications.

Now let’s see if training the model on unbiased data improves.

Method II: UnBiased Embedding, Biased Model

In the second method, we apply de-biased embeddings and expect the old model to no longer be able to predict gender. The schematic of the method has been provided.

But when we compare the results, the gender classifier still gives an accuracy of 81 percent, which is still not close to 50 percent.

Method III: UnBiased Embedding, UnBiased Model

In this, we train the model on de-biased train data and predict gender on debiased test data. We are hoping that it can’t classify gender.

We can also create a model that is trained and applied to unbiased vectors.

This gives us the following result.

conclusion: The classifier can still predict gender quite well, and debiasing techniques failed.

For more details, click here.

In projection maths

In this tutorial, we learn why projections are not working; even if we project these embeddings on a plane, there will still be ways to classify male and female gender as they form different clusters.

Cosine distance suggests we can remove the gender “direction” from our embeddings by using linear projections as a debiasing technique. But Euclidean distance doesn’t show the same results.

It is difficult to quantify biases in language models. If you have embeddings from a model trained on a large internet corpus, eliminating them is challenging. We should deploy such applications responsibly.

For more details, click here.