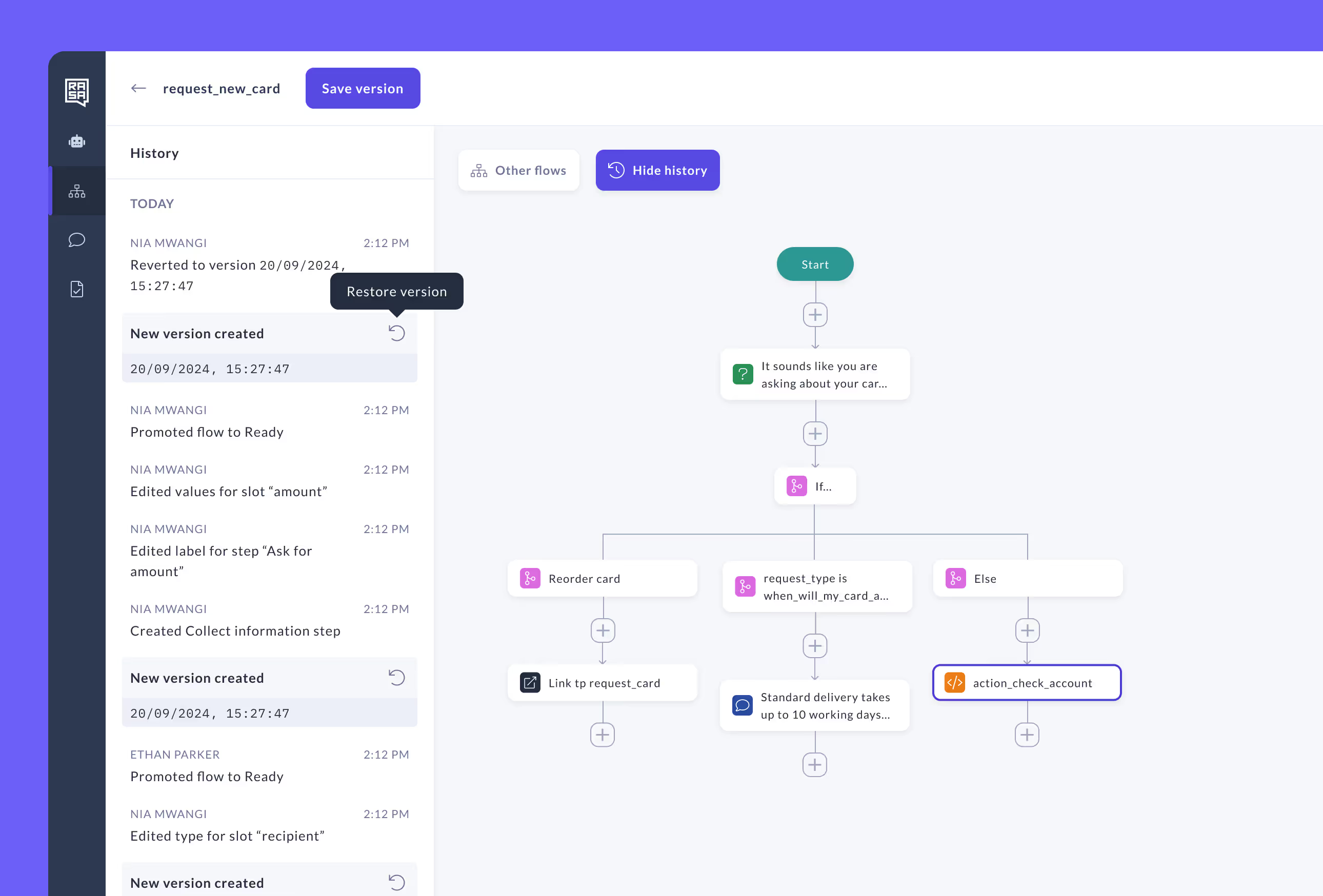

Increase Transparency with Rasa Flow Version Control

Have you ever adjusted a flow in your AI agent and thought, “Oops, where’s the undo button?” Whether it’s a quick fix gone wrong or an experiment that didn’t pan out, backtracking can save time and effort. That’s where Rasa's Flow Version Control steps in to streamline your workflow. Think of it as the “Google Doc Version History” for your conversational AI. With this feature, you can:

- Save flow versions at any point so you always have a safe backup.

- Easily revert to previous versions when something doesn’t go as planned.

- Track changes collaboratively by seeing who made updates and where.

Why It Matters

Managing conversational flows can be complex, especially in collaborative environments where multiple hands are tweaking designs. With Rasa Flow Version Control, you no longer need to wonder:

- “Did I break this flow?”

- “Wait, who changed this response?”

Instead, you can quickly review a version history that tells you exactly what was changed, when, and by whom. This level of transparency empowers teams to work confidently, knowing there’s always a way to undo, refine, or pinpoint any adjustments.

Take Control: Download your AI agent directly from the Rasa Studio UI

With Rasa’s new “Download Project” feature, you can export your conversational AI agent directly from the user interface. No extra steps, hassle, or command-line tools are required. With just one click, you can download your project, making it easy to store, share, or move your agent wherever needed.

Why It Matters

This feature gives you greater control and flexibility over your AI agent development by addressing key needs:

- Portability: You can now download and save your project as a file or share it with partners, clients, or your team.

- Backup and recovery: Safeguard your work by creating local backups of your project, ensuring you’re always prepared for unexpected changes or system issues.

- Learning: Taking a look and reviewing the .yaml version of your agent project helps you get to know how Rasa works under the hood.

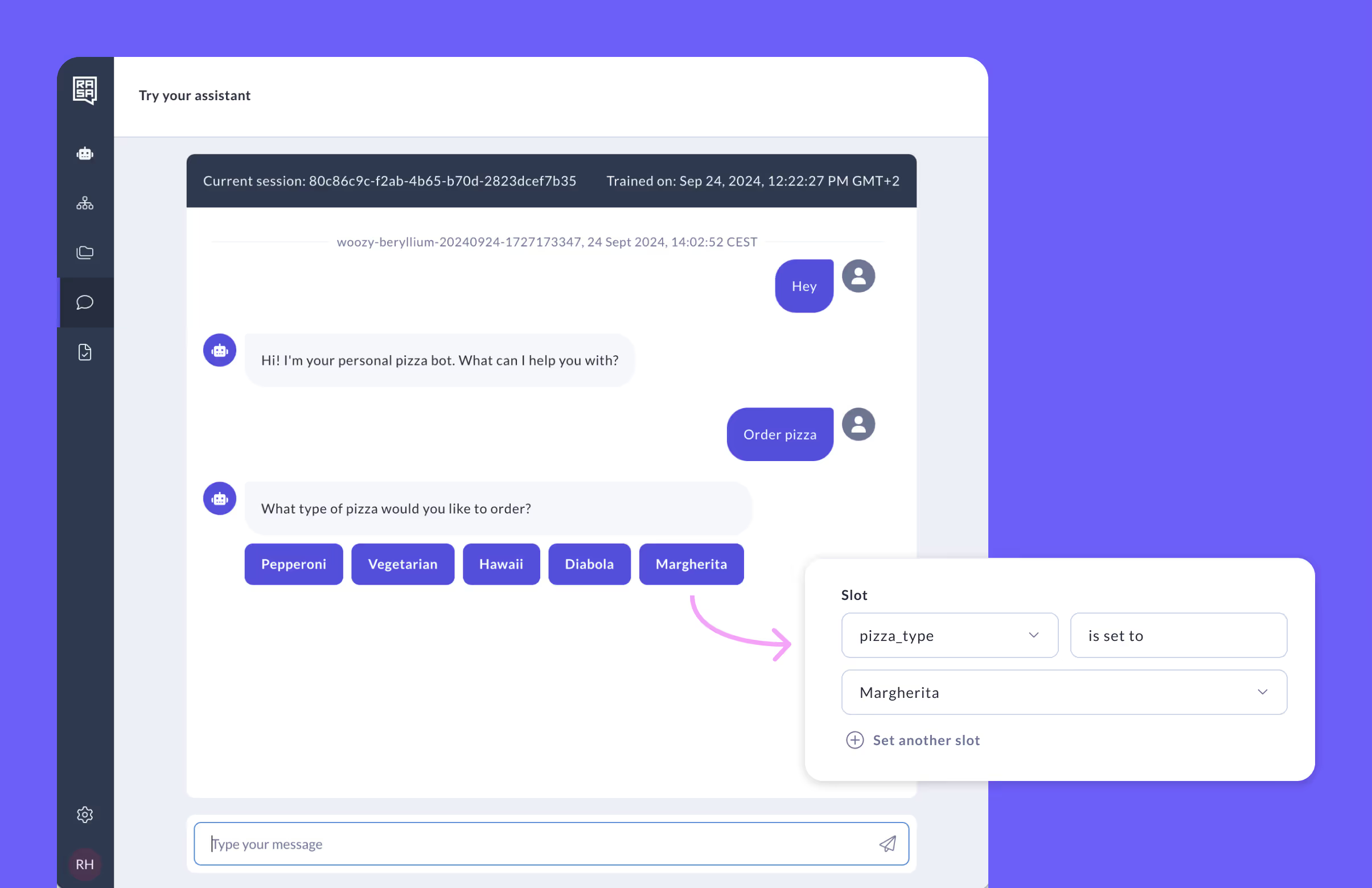

Let your users have it all at the click of a button with advanced button configuration

No matter how sophisticated your agent is, the humble button remains key to creating smooth and intuitive user experiences. Buttons make interactions easier by reducing effort, letting users quickly select options instead of typing long requests.

Advanced Button Configuration and Payloads extend this core capability. With this enhancement, conversational AI teams can do the following with a single click:

- Trigger intents directly, bypassing LLM calls to reduce costs.

- Pass entities to the system without invoking an LLM, again reducing costs.

- Set slots with predefined data based on button selections, ensuring accurate and efficient responses.

- Customize payloads with any specific text or data, enabling unparalleled flexibility in how buttons interact with your agent.

Why It Matters

Buttons aren’t going anywhere and still play a role in transactional conversations with users. Here’s how the advanced button configuration enhancement makes a difference:

- Cost savings: By using buttons to collect precise payloads or trigger predefined actions, you can bypass unnecessary LLM calls. This reduces operational costs and minimizes latency for faster responses.

- Enhanced customization: Custom payloads let you go beyond data collection. Use buttons to trigger complex workflows, automate system actions, and navigate conversations with greater precision and control.

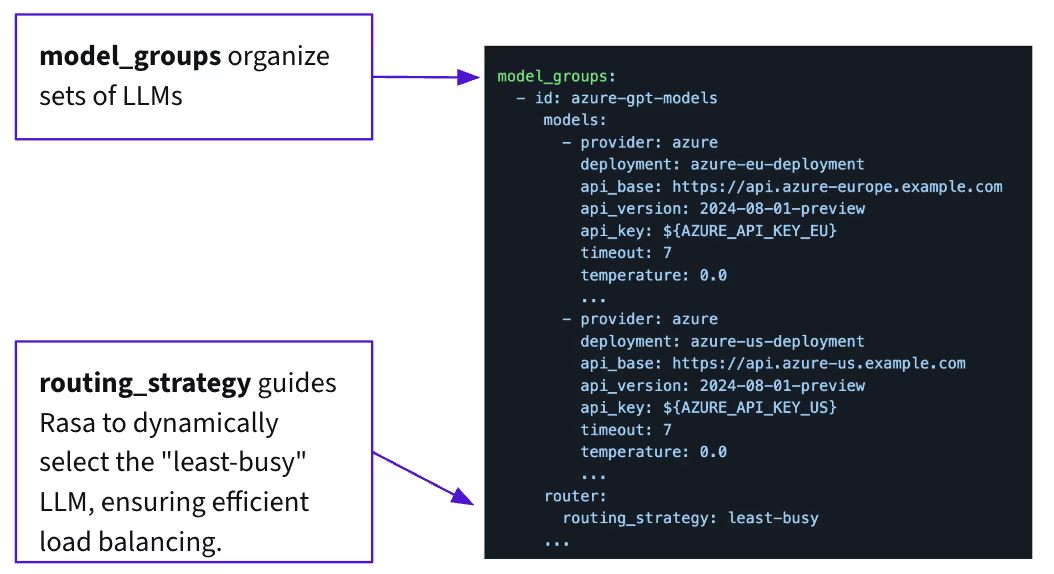

Scale Smarter with Multi-LLM Routing for Optimal Resource Management

Managing a sophisticated LLM-powered dialogue system at scale-like CALM-requires smart resource management, especially during high volumes of concurrent customer conversations. Multi-LLM Routing simplifies this process, enhancing performance, reliability, and flexibility in your conversational AI environments.

With Multi-LLM Routing, you can:

- Distribute and load-balance requests across multiple LLMs and embeddings to avoid bottlenecks and rate limits.

- Organize models into resource groups that can be referenced based on different environments-such as development, testing, or production-ensuring your system uses the right resources for each stage.

- Leverages LiteLLM under the hood to implement the routing logic, simplifying model management and ensuring smooth operation.

Why It Matters

When running LLM-powered systems at scale, rate limits imposed by providers like Azure and other LLM vendors can quickly become a challenge, particularly during peak traffic. Multi-LLM Routing ensures your agent performs seamlessly, even under heavy loads.

Here’s why it’s a game-changer:

- Avoid downtime and bottlenecks: Multi-LLM Routing ensures that no single model deployment becomes overwhelmed by distributing requests across multiple LLMs, keeping your system responsive and reliable.

- Maintain pricing control: Many providers charge premium fees for higher rate limits. If you exceed your current usage quota, you might need to upgrade to a higher-tier plan to maintain performance.

- Optimal resource management: LLM routing logic allows you to scale your system effortlessly, distributing requests to handle concurrent conversations while maintaining high performance.

- Flexibility across providers: Group LLMs to diversify your models and reduce dependency on a single vendor.

Additional Enhancements you’ll want to try out now:

- Python 3.11 support

- Further latency reduction for voice interactions

- Simplified, faster training for studio

Check out our Rasa and Rasa Studio changelogs for more information. Interested in learning more about Rasa? Connect with an expert.