At Rasa, we believe that developers should have the tools to build high-performing AI assistants capable of handling complex conversations, without incurring significant costs of proprietary LLM APIs. This can be achieved by leveraging smaller, open-source LLMs for less computationally intensive tasks.

In our previous posts, we investigated the options for using smaller LLMs for the Command Generator component. In this post we will show you how you can use smaller LLMs for Contextual Rephrasing tasks to significantly cut your system’s costs and improve conversation latency without sacrificing the quality.

What is Contextual Rephrasing?

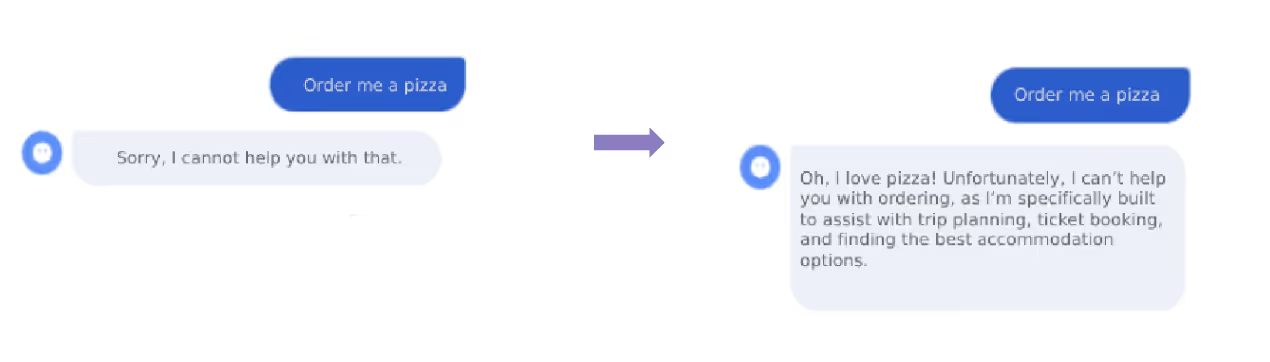

Contextual Response Rephraser in the CALM framework adds a powerful finishing touch to making conversations more natural and dynamic by leveraging LLMs to rephrase templated replies. For example, Contextual Rephrasing enables the assistant to handle unexpected user requests playfully instead of sending a templated response, “Sorry, I cannot help you with that”:

This capability specifically shines in the following scenarios:

- Dynamic Responses for Repetitive Inputs: Users may repeat inputs or restart sessions, and a more engaging, varied response can elevate the interaction quality.

- Seamless Chit-Chat Handling: Chit-chat refers to small-talk-style inputs unrelated to the conversation’s core goal. As shown in the example above, instead of sending a dry, templated response, the assistant can playfully address the user’s request and steer the conversation back toward the specific goal.

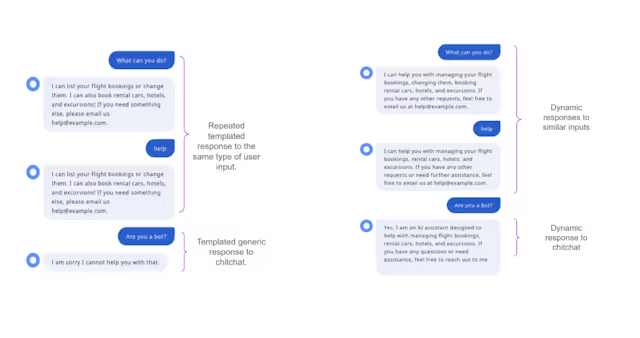

Now, let’s look at a more advanced example. Below, we compare a conversation without Contextual Rephrasing (left-hand side) to a conversation with Contextual Rephrasing powered by OpenAI’s GPT-3 model. Notice how the model subtly refines the assistant's response to a repeated help request and smoothly handles casual, chitchat-like exchanges, redirecting the conversation back to providing relevant assistance.

Rephrasing sprinkles a little magic on our conversations. But do we need a massive LLM for this, or will a smaller model do the trick? Most of the time, OpenAI's proprietary models are the first choice that developers would pick for Contextual Rephrasing. These models generate high-quality rephrased outputs, ensuring a polished user experience. However, the performance comes at a cost - both money and time.

As assistants scale-especially when multiple CALM components also rely on LLMs-the expenses associated with API calls to proprietary models can quickly escalate. The latency of these models is also typically too slow for voice applications. For this reason, adopting such models could significantly enhance the cost-effectiveness and efficiency of this essential CALM component, paving the way for scalable, high-performing conversational AI systems.

Smaller LLMs for Contextual Response Rephrasing

Evaluating the performance nuances of Contextual Response Rephrasing component

Evaluating the Command Generator components of CALM typically relies on End-to-End testing. These tests measure how effectively the LLM predicts flow transitions in diverse conversational scenarios, from straightforward "happy paths" to more intricate context-switching situations. Ultimately, end-to-end testing evaluates if the assistant’s prediction matches the expected outcome.

When evaluating the performance of Contextual Response Rephrasing, things become a bit more complicated. Traditional end-to-end testing methods aren’t sufficient because response variability is an expected outcome of the Contextual Response Rephraser due to the nature of this component. One effective way to evaluate the component is by manually checking how well the assistant handles interactions in two key areas:

- Handling Repeated Inputs: A model that performs well would subtly rephrase the templated response to avoid repeated response messages without losing key details and the meaning of the response message.

- Managing Chit-Chat Interactions: A model that performs well would enable the assistant to playfully address the chitchat, at times mimicking the user’s tone and interactions. All without derailing the conversation from its goal.

Another quantitative approach enhances traditional end-to-end testing with LLM-as-a-judge techniques, which assess factual accuracy between the predicted rephrased response and the expected outcome. LLM-as-a-judge is a technique that uses an LLM with an evaluation prompt to rate generated text based on specific criteria.

Last but not least, evaluating the impact of different models on latency is just as crucial as assessing their overall performance. Latency-the time users wait for the assistant’s response-is a key metric for understanding its effect on user experience. High latency can negatively impact session success, duration, and ultimately, user retention.

Using smaller models for Contextual Rephrasing

While OpenAI models excel at rephrasing, using them for contextual rephrasing tasks can become expensive. To explore alternatives, we tested smaller (under 15B parameter) open-source models to determine if they could serve as viable replacements. Our experiments revealed that smaller Llama models-such as Llama-3.1-8B-Instruct, Llama-3.2-8B-Instruct, and Google’s Gemma model-performed remarkably well in handling contextual rephrasing tasks.

It’s important to note that these off-the-shelf models are not specifically trained for contextual rephrasing but rather on a broad range of data. To optimize their performance for your assistant, you can adjust the temperature parameter to minimize hallucinations and carefully design the prompt templates for better results.

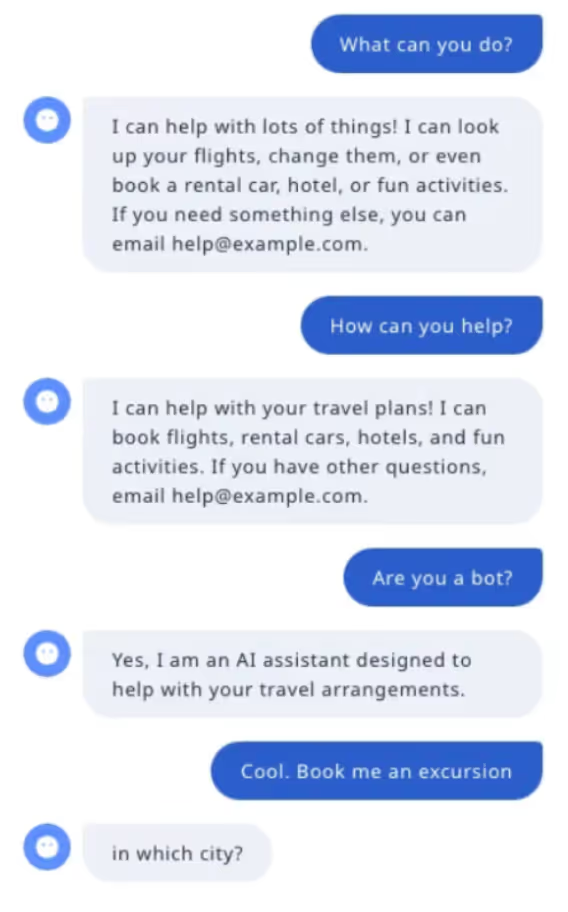

Below is an example of a user-assistant conversation using the Gemma 9B model for rephrasing. The flow and rephrasing quality are comparable to those observed with much larger OpenAI models, demonstrating the strong potential of these smaller, cost-effective alternatives.

The outcomes for Llama 3B, 8B, and 13B models follow a similar trend. To better quantify the performance of these smaller models, we used the LLM-as-a-judge test implementation via deepeval, an open-source LLM evaluation library. We aimed to measure how well the rephrased responses perform across different skills that the assistant was designed to handle:

- Cancellations: The user cancels a request.

- Context switch: The user moves from one request to a different one.

- Corrections: The user updates some provided details.

- Happy path: The user follows the assistant's lead.

- Multiskill: The user makes multiple requests during the conversation.

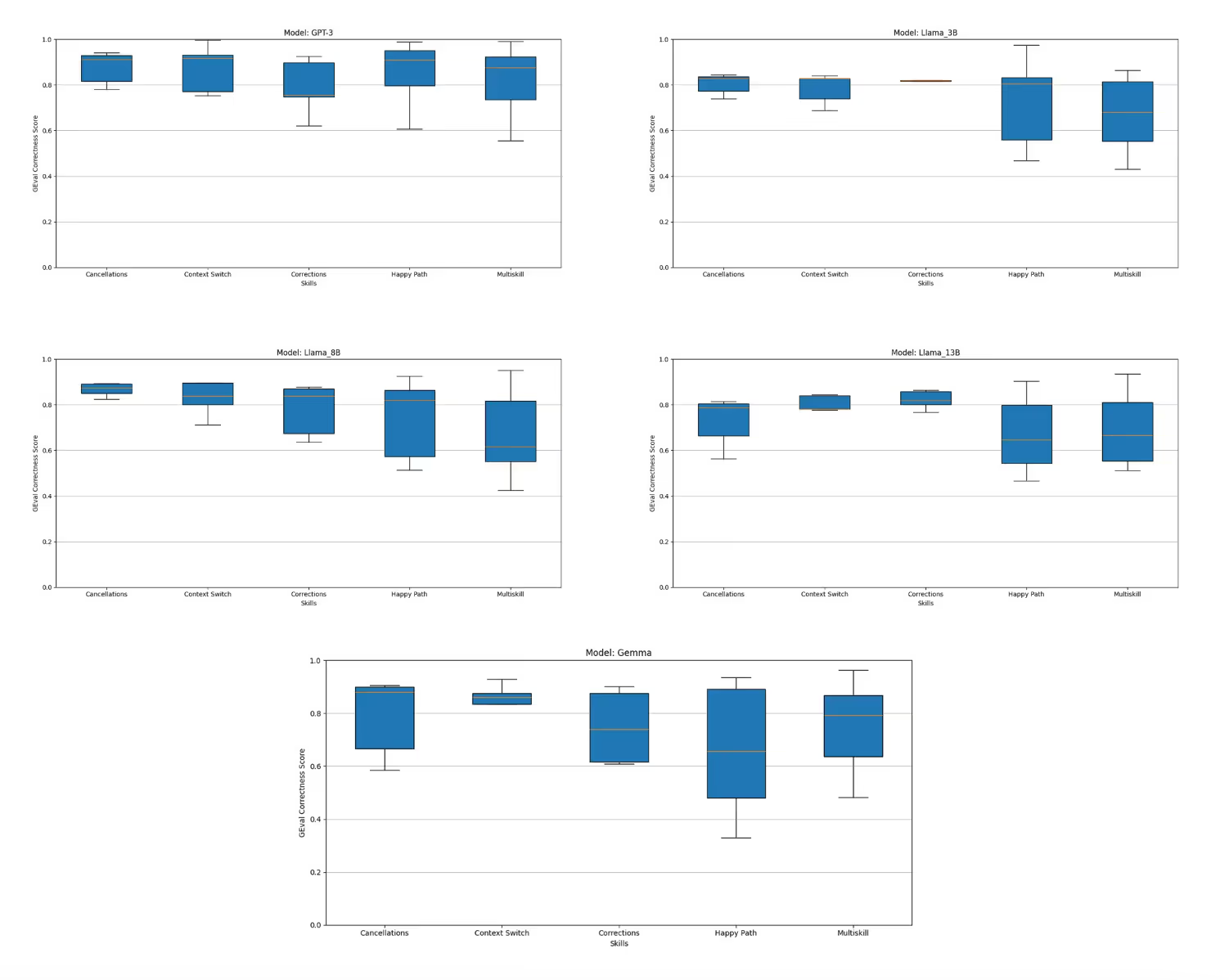

We measured the GEval Correctness score, which evaluates how accurate the factual information was in the predicted rephrased response compared to the actual expected response. A correctness score of 1 indicates that the responses are identical in terms of factual information. In contrast, a score of 0 indicates that the responses differ completely (e.g., dates, locations, or names are entirely different, along with input context). Below are a few actual test examples showing high and low scores, and an example of an outlier where the rephrasing steered the conversation details completely:

The charts below showcase the Correctness test results for each model we compared.

The GPT-3 model performs exceptionally well handling rephrasing tasks across various skills. Smaller models (especially Llama 3B, Llama 8B, and Llama 13B) showcase highly comparable results for specific skills like cancellations, context switching, and corrections. However, they tend to struggle more with generalization, often compensating by incorporating excessive reasoning into their rephrased responses or over-elaborating, which can sometimes shift the meaning of the original response. This behavior is more apparent in longer, multi-skill conversations that include multiple factual details.

We found that fine-tuning the temperature parameter and refining the prompt with clearer instructions-specifying exactly what information the assistant should include and what details to omit (e.g., unnecessary reasoning)-significantly improves the performance of these smaller models.

An additional and very important aspect that developers should keep in mind about Contextual Rephrasing is that it should be used in situations where rephrasing is truly necessary, such as handling chitchat or to make conversations more dynamic. Limiting where and when rephrasing is applied can also help prevent the LLM from engaging in excessive elaboration or hallucinations.

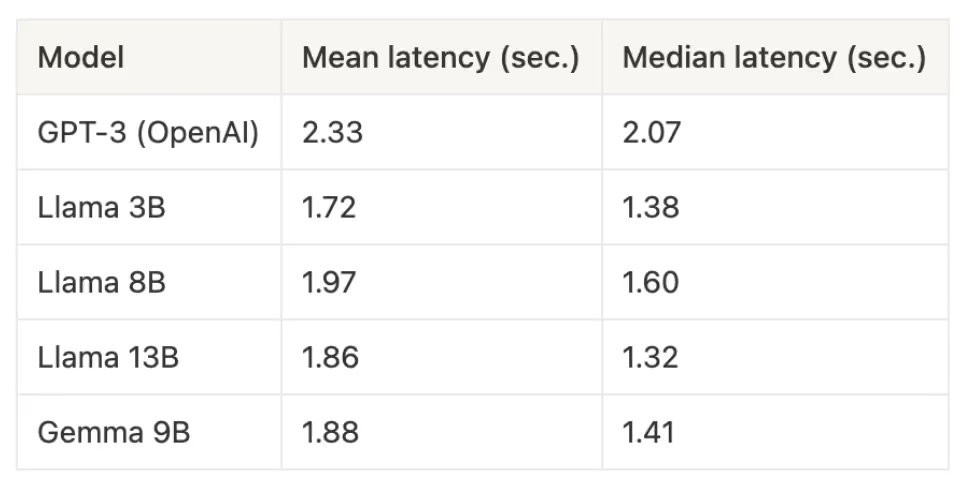

Cost and latency are critical factors when using smaller models. High latency can lead to user dissatisfaction and early drop-offs. Our comparison shows that while larger models like GPT-3 may struggle with latency, smaller models like Llama and Gemma (accessed through dedicated inference endpoints on HuggingFace) deliver significantly better performance for contextual rephrasing:

While off-the-shelf Llama and Gemma models provide pretty good results for general Contextual Rephrasing tasks, developers can get even better performance measures with more sophisticated fine-tuning techniques. The Rasa team has recently published fine-tuning recipes and fine-tuned models for Command Generator tasks. As we continue our work on experimenting with small LLMs for other tasks like Contextual Rephrasing, we will share the techniques with the community.

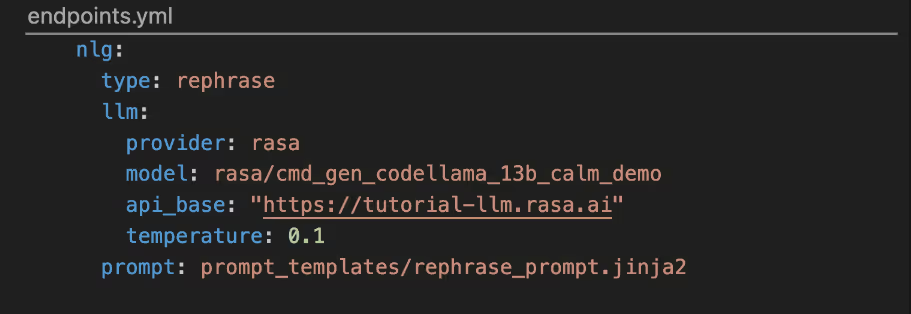

If you’d like to try out smaller models for your assistant’s Contextual Rephrasing tasks, you can use the configuration variation below. This configuration, inside the assistant’s endpoints.yml file, will rephrase the responses using Rasa’s fine-tuned Llama 13B model:

If you’d like to configure other models available through HuggingFace or other platforms, check out the documentation for instructions on configuring these components.

Share your experience with us!

Finding the models that strike the best balance between performance, efficiency, and cost-effectiveness often takes some experimentation. Have you tried using small language models for your CALM assistant? We’d love to hear about your experience on Rasa’s developer forum.