With this blog post, I want to illustrate the concept of “getting out of your bubble” in order to facilitate user-centric design, and share some tried and tested methods of bootstrapping Conversational AI projects that we recommend to the enterprise teams we work with. In the next few paragraphs, I’ll cover some key concepts that include team set-up, scope definition, prototyping, and preparation for the first launch of your AI Assistant.

The original CDD blog post ends with a provocative postscript:

Shipping without having had lots of testers has never worked and your project won't be the exception.

Bootstrapping -- going from nothing to the first version of your assistant - is probably the most contentious part of CDD. You need to iterate based on user feedback, but that doesn’t mean you should move fast and break things and put something half-baked in production.

On the contrary, practicing CDD from day one is essential if you want to avoid a disappointing initial launch. There are many techniques and strategies for getting feedback on early prototypes of your chatbot that don’t involve putting something in production. There are many ways to get out of your bubble before your initial go-live.

CDD asks that you acknowledge that you won’t know how users interact with your bot until you find out. It does not mean you should put a terrible bot in production and “just learn”.

The Conversational Team

CDD is a process for building mission-critical conversational AI, which means you should think of your assistant as a product, not a project. That starts with having the right team in place.

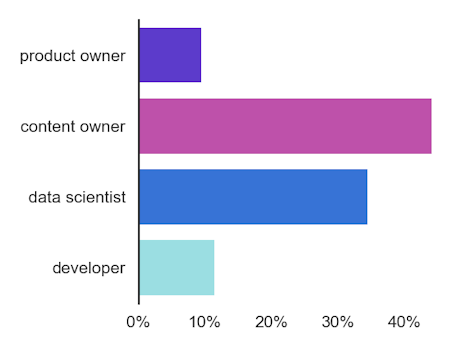

It’s a rapidly evolving field and the market has yet to consolidate on a consistent set of job titles. At Rasa we work with five essential roles/personas to describe the core functions of a conversational AI team - note that these don’t always map 1:1 to individuals and their job titles.

Essential Team Roles

At a bare minimum, you need one product/UX-minded person and someone focused on technology. The product person will drive discovery, prototyping, user testing, design, etc. The tech person will implement integrations with backend systems (like fetching user profile information, or taking actions on behalf of the user), and set up the NLU model and dialogue manager.

This picture deliberately doesn’t specify who, in a minimal team, acts as the annotation lead — driving your process for providing feedback on your model’s predictions to make your ML more accurate over time. Having polled a number of teams, there’s roughly a 50/50 split of whether product or engineering is ultimately responsible for training data quality & the annotation process. Your intent taxonomy is a key interface between these teams, so ideally both contribute to the necessary annotation work. But one person should be directly responsible. As a team scales, ensuring consistent use & understanding of intents & other primitives is a major factor. Scaling beyond a midsized team will be covered in the final post in this series.

Team collaboration

Shared assets are an effective tool to improve collaboration & reduce confusion across teams.

Intent taxonomy

Anyone on your team should be able to look up an intent defined in your assistant and find out:

- What are some typical user messages that correspond to this intent?

- Where is it used in conversations?

- Are there similar intents that exist? What’s the difference between this intent and those similar ones?

A documented intent taxonomy can make it much easier to onboard new members to your team. It’s also very helpful for annotating data in a large assistant. When you have hundreds of intents defined, it’s very difficult to keep these all in your head and know how to label incoming user messages. When two annotators disagree, the documentation should provide clarity.

Test conversations

Test conversations are another great asset that can play multiple roles. They describe, in a format anyone can understand, the conversations your assistant can have with users. When used as acceptance tests, they guard against regressions in your model - you can rest assured that when you make updates to your assistant, you haven’t broken existing conversations. They’re also an unambiguous way to specify exactly how your assistant should behave. While a detailed flow chart describing all of your business logic may make sense to you & your team, it will likely be very hard to parse for other stakeholders like compliance, legal, and marketing.

There are many other types of design documentation that teams use to create alignment; intent taxonomies and test conversations are just two examples. But more important than the assets themselves are the conversations they facilitate. If an asset is meant to make an annotator’s job easier, it needs to be available to them when annotating. If a test conversation is intended to communicate requirements to a developer, it needs to be part of the development lifecycle. Choose 1 or 2 assets that make sense for your team and incorporate them into your weekly team rituals to spur important conversations and keep your documentation from going stale.

Discovery

Discovery is a crucial step. It increases confidence across the team that the opportunity being pursued is a genuine one and that everyone is aligned on expectations.

At the end of the discovery phase, everyone on your team should be able to answer the questions below (hint: this means it should all be documented!). Even getting to an initial set of answers will take many conversations with, for example, the web and mobile product teams, the customer service org, marketing, legal, etc. It’s also a great opportunity to get out of your bubble - and don’t forget the most important stakeholders of all - your customers who will be interacting with the bot.

Where will users interact with our AI assistant?

- An in-app chat experience for logged in users

- A chat widget on our landing page for unauthenticated users

- Integrated into our IVR

What does the full journey look like for a user interacting with our brand? Are there actions the user will take in our app or website before or after this conversation? Will we follow up via an email/phone call/letter?

What KPIs will we track to determine this product’s success?

Which customer segments will be interacting with the assistant? For example, in-app traffic will usually have a very different demographic composition from your IVR system, but note that your existing customer segments may not translate to your conversational AI users. Generative UX research can help you discover these.

What are the first use cases we aim to tackle? Which user goals will the bot support? Take a dozen or so user messages pulled from your support logs as examples of what problems you’ll be helping customers resolve. This is a great exercise to focus everyone’s minds on actual customer problems over hypothetical ones.

What is the tone of voice our assistant will use? What is the personality we’re trying to create that reflects our brand?

What backend systems will our assistant need to interact with?

- Where does the bot need the ability to read and write data? Will that happen via APIs or using RPA?

- Which geographies and languages will we support?

(How) will our AI assistant pair with human agents to solve our customer’s problems?

- All conversations start with the bot, escalating to humans as needed.

- Users are given the choice to opt in to using the bot while waiting for a human to become available (not that common but very effective!)

- The bot will suggest answers to human agents

How will we be handling user data?

You may answer many of these questions with “we’ll start with X, then expand to Y”, which already sounds a lot like a high-level roadmap. While waterfall-style planning of what you’ll be building in 6-12 months’ time is unlikely to reflect reality, a “now, next, later” style roadmap provides the whole team with an outlook of what challenges are coming down the pike (and importantly, which ones aren’t). If you don’t make expectations explicit and documented, everyone will come up with their own.

Prototyping & Early Feedback

Getting feedback on early prototypes of your chatbot is vital. Fortunately, conversation design is much more established than it was a few years ago, and a few excellent books have been written on the subject. A welcome consequence of that shift is that more teams take prototyping seriously. It’s probably the most crucial “get out of your bubble” experience in the whole journey.

Here are some of the things you can expect to learn via prototypes:

- Your users’ linguistic style - use of slang & emojis, spelling, etc. - varies by device (computer & smartphone keyboards are very different) and by segment.

- Your customers do not use the “correct” names for your products and services.

- Your customers do not know the answers to some of the key questions in your business logic.

- Intents that you thought were well-defined actually aren’t. For many messages your bot receives, it’s not easy for the team to know (or agree) which intent it belongs to

- Your customers start conversations with long messages containing a great deal of background information.

- Many things you expected users to say, and paths you expected they would go down, simply don’t happen.

Another valuable outcome of your prototyping phase is an initial training data set. It’s often unavoidable that the NLU data and dialogue paths you use to build your prototypes are the product of your team’s intuition, and that’s a fine way to bootstrap. But now you have your first bit of real end-user data, and that should absolutely be used to build the next iteration of your assistant as you prepare for go-live. The next post in this series is about iterating on that initial assistant and discusses training data in more detail.

Techniques

Remember that the purpose of prototyping is to reduce uncertainty as much as possible. You’re not trying to prove the existence of the Higgs’ boson. You’re looking for big, obvious signals that will inform your design & implementation. Refining what you’ve built based on user interaction is also crucial and will be covered in the next post. For now, focus your energy on the big things.

Paper prototypes

It’s a very useful exercise to write down example conversations illustrating how you expect conversations with your bot to go. Showing these to some internal stakeholders is a great way to create alignment - reading a few example conversations is much easier than asking people to grok a flow chart representing your business logic. It also gives you a chance to get feedback on tone of voice, and inspiration for simplifying the experience. “I like”, “I wish”, “I wonder” is a great framework to ask for feedback on example conversations.

If you’re building an audio experience, paper alone isn’t enough. You’ll want to supplement this with recordings of the conversation or a reading the transcript aloud together.

Wizard-of-Oz testing

WOZ testing - having a human pretend to be the bot - can be a very effective tool for validating the feasibility of an idea. After all, if the user experience isn’t good when it’s powered by a human brain, it’s unlikely to be any good when powered by AI.

There are two common ways to set up a WOZ test. The first is to constrain the wizard to the use of a fixed set of templated bot messages that you’ve designed. The second approach is to allow the wizard to write their own free-form responses. These approaches are complementary: one evaluative, one generative. In either case, be sure to ask the user for feedback before ending the conversation. It’s a unique and valuable thing about conversational AI that you can natively slot feedback requests into the core product experience.

Lo-Fi implementations

A low-fidelity implementation of your bot is usually a 2nd or 3rd step in the prototyping process and is one where you start to get some higher levels of realism. A lo-fi bot usually mocks any API calls with hard-coded data, only supports a couple of user goals and a handful of intents. The purpose here, again, is to learn about user behaviour, not to prove that your bot is ready for prime-time. So you might configure things a little differently from how you would in production. For example, while it’s generally advisable to trigger a fallback response when your bot has low confidence about what to do next, it’s usually better to switch this off while doing user research. You gain more information by letting the bot make mistakes.

Audiences

Who do you recruit to interact with your prototypes? Your goal is to get out of your bubble, so testing within your team, where everybody already knows what the bot is supposed to do, is not especially helpful. Recruiting testers from other departments inside your organisation is far from perfect, but already better. These testers won’t know how the bot is supposed to work, but they are still fluent in the jargon of your organisation. Using a crowdsourcing platform to recruit testers can also be useful, so long as you remember that paying people to pretend they have a problem to resolve with a bot is very different from people who actually have a problem to solve. The context and the stakes are completely different and you will see that reflected in behaviour. Recruiting real customers to interact with prototypes will give you the most meaningful feedback. It’s worth fighting for the opportunity to do this!

Remember that the instructions you give to testers will impact their behavior. Describe the task you would like them to compete by interacting with the bot, but try not to use phrases that they are likely to use in the conversation.

Setting expectations

In large organizations, there is often a reluctance to recruit testers from outside the conversational team because of the reputational risk that comes with a potentially bad experience. It’s important to appropriately frame the interaction. Are you conducting user research to evaluate an idea, or are you doing usability testing of a product that’s on track to go live. Setting these expectations clearly is an appropriate way to elicit the type of feedback you are looking for, and it’s important to weigh up the risk of not doing testing. Avoiding user testing for fear of blowback is one way to guarantee that your initial launch won’t go well.

Building confidence for a launch

So, we’ve done discovery, user research, prototyping, and combined everything we’ve learned into a first implementation. How do we know when it’s ready to go live? There are a number of things you can do to build confidence in your go-live.

Set clear expectations with end users

- By now, you really should have recruited some test users from your target group to interact with the first implementation of your bot. Use the context around the bot, as well as the opening message, to set expectations about what the bot can and cannot handle.

Ensure you have the ability to turn the bot off (partially or completely)

- In case of any major surprises, it’s good practice to have some or all of your bot functionality behind a feature flag, so that you can turn it off without having to redeploy anything.

Ensure you have a CI/CD pipeline set up with automated test coverage of the most important flows.

- If you need to ship a hotfix, you want to be sure that this won’t introduce any regressions. Automated tests provide those guardrails.

Ensure your model evaluations are valid

- Your prototping phase produced some real end-user data which you can use to see how your model really performs. Evaluating your model purely on synthetic data will mislead you in to thinking your model is much better than it actually is. Ensure that you’re evaluating your NLU model against real messages that users have sent or spoken to your prototypes, even if that dataset is relatively small.

Ask users for feedback

- Ask users if you solved their problem and if appropriate ask them to elaborate. Only a small percentage of your users will actually provide this kind of feedback, but it’s still an important signal to tell you how you’re doing.

Have a process in place for continued iteration of your assistant, and connect the dots between your efforts and the KPIs you are tracking.

- That’s what the next post in this series is about!