This guide will briefly highlight how Rasa's NLU models can be hosted as an independent API. We'll also go the extra mile by showing you how you can wrap it all in a customisable docker container.

Rasa NLU as an API

When you run `rasa train` from the command line, two models will get trained. The first model is the NLU model, which predicts the intent of the message as well as entities in the user message. The second model is the dialogue model. This model predicts the following action in a conversation.

The Rasa assistant needs both models to function. But nothing is stopping you from re-using just the NLU. You could take just the trained NLU model and use Rasa to serve it.

You can also use Rasa to only train an NLU model. The command below starts up the Rasa starter project and trains just the NLU pipeline.

Shellcopy

rasa init

rasa train nlu --fixed-model-name specific-model-name

Once the training completed, you should see a file specific-model-name.tar.gz appear in the models folder. You can run this model, as a service, via the rasa run command.

Shellcopy

rasa run --enable-api --model models/specific-model-name.tar.gz --port 8080

You now have a server running that can predict user messages over HTTP. You can confirm by running this curl command.

Shellcopy

curl --request POST \

--url http://localhost:8080/model/parse \

--header 'Content-Type: application/json' \

--data '{"text": "i am doing great"}'

The response will look something like this;

jsoncopy

{

"text": "i am doing great",

"intent": {

"id": -4491517768022582880,

"name": "mood_great",

"confidence": 0.9999957084655762

},

"entities": [],

...

}

This is already pretty useful, but if you'd like to host this service, you'd probably want to dockerize it first.

Rasa from within Docker

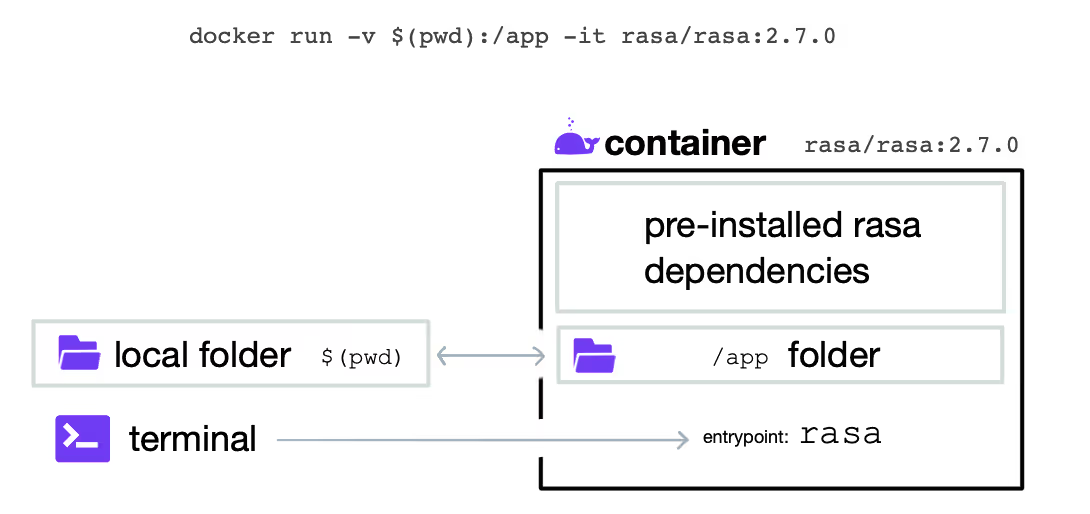

Rasa maintains a set of convenient Docker containers to help you get started. You could, for example, use Docker as a method to omit virtual environments. When you run rasa from the terminal, you're pointing to Rasa's version in your virtual environment. Instead, you can also have Rasa run from inside of a preconfigured Docker container.

Let's consider the following command.

Shellcopy

docker run -v $(pwd):/app -it rasa/rasa:2.7.0

This command does a few things. So let's go over everything.

- This command tells Docker to run the

rasa/rasa:2.7.0container locally. If the container isn't available locally, it will try to download it first. The name of the container follows a/:convention. You can find many more containers from Rasa hosted on dockerhub that use the same name convention. - The

rasa/rasa:2.7.0container is relatively light. It comes without any optional Rasa dependencies. We could choose to userasa/rasa:2.7.0-main-spacy-deinstead, which carries the extra spaCy dependency and a spaCy model for German. You might also chooserasa/rasa:2.7.0-main-fullif you want all the optional dependencies. You can learn more about the available pre-made containers on our docs. - The

-itflag tells docker to run in interactive terminal mode. That means that when the container runs, you'll be able to interact with what is running inside of the container as if it were your terminal. - We also attach a volume to the container via

-v $(pwd):/app. The$(pwd)command will evaluate to the current working directory outside of the docker container. This will be linked to the/appfolder inside of the container. This means that the container will have access to the trained models in themodelsfolder.

Let's now consider what happens when we actually run the command.

Shellcopy

> docker run -v $(pwd):/app -it rasa/rasa:2.7.0

usage: rasa [-h] [--version]

{init,run,shell,train,interactive,telemetry,test,visualize,data,export,x}

...

Rasa command line interface. Rasa allows you to build your own conversational assistants 🤖. The rasa command allows you to easily run most common commands like creating a new bot, training or evaluating models.

When you run the container, it looks like you're calling rasa from the command line directly! That's because the container is configured to have rasa as an entrypoint. You're not calling the version of Rasa that's connected to your virtual environment, you're connecting to the version of Rasa that's inside of the container.

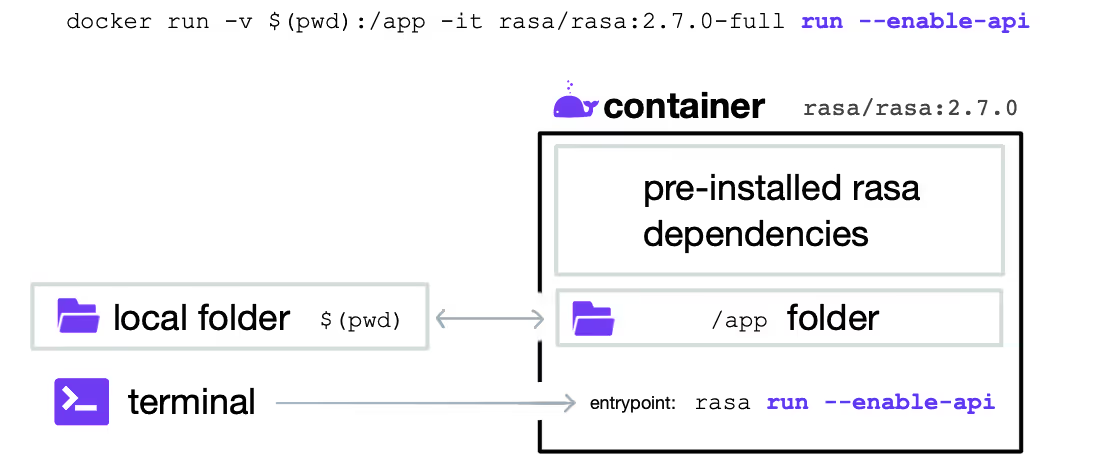

That also means that you can run the following command if you serve a NLU model.

Shellcopy

docker run -v $(pwd):/app -it rasa/rasa:2.7.0 run --enable-api

Inside the container, since rasa is the entrypoint, this command will run rasa run --enable-api-command. Since the docker container has access to your local /modelsfolder, this command will serve a model from within the container.

We're not there yet, though. The container will be running our service, but there's no way to communicate with the outside world yet.

Shellcopy

docker run -it -v $(pwd):/app -p 8080:8080 rasa/rasa:2.7.0 run --enable-api --port 8080

The -p 8080:8080 links port 8080 on your local machine to the port on the docker container. That means that you'll be able to query the model via curl again.

Shellcopy

curl --request POST \

--url http://localhost:8080/model/parse \

--header 'Content-Type: application/json' \

--data '{"text": "i am doing great"}'

Making a Custom Container

We just ran the container locally, which is great, but it depends on the files and folders that we have on our local machine. Without a trained model on disk, the container won't be able to serve a model. If you want to launch the container as an application, you'd prefer the container to be functioning independently of the disk state.

So let's make our container that has all the functionality we like. Let's customize the config.yml file a bit, and let's write our own Dockerfile to construct the container.

Let's start by changing the config.yml file. Let's say that we want to add the large spaCy model for English to our NLU pipeline.

YAMLcopy

language: en

pipeline:

- name: SpacyNLP

model: en_core_web_lg

- name: SpacyTokenizer

- name: SpacyFeaturizer

- name: LexicalSyntacticFeaturizer

- name: CountVectorsFeaturizer

- name: CountVectorsFeaturizer

analyzer: char_wb

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 100

constrain_similarities: true

Let's now build the Dockerfile that can train this pipeline. We will construct this pipeline from scratch, so we will first install Rasa with spaCy and download the en_core_web_lg model. After that, we can copy our local files into the container so that it can train. Once the training is done, we wrap up by telling the container how to run itself.

Shellcopy

# use a python container as a starting point

FROM python:3.7-slim

# install dependencies of interest

RUN python -m pip install rasa[spacy] && \

python -m spacy download en_core_web_lg

# set workdir and copy data files from disk

# note the latter command uses .dockerignore

WORKDIR /app

ENV HOME=/app

COPY . .

# train a new rasa model

RUN rasa train nlu

# set the user to run, don't run as root

USER 1001

# set entrypoint for interactive shells

ENTRYPOINT ["rasa"]

# command to run when container is called to run

CMD ["run", "--enable-api", "--port", "8080"]

Why not use the rasa/rasa-spacy container?

Technically, we could have also used the rasa/rasa:2.7.0-main-full or the rasa/rasa:2.7.0-main-spacy-encontainer as a starting point instead of python:3.7-slim. So why didn't we?

A minor reason is that this keeps the container more lightweight. The full container comes with optional dependencies that we don't need, like huggingface. The encontainer comes with a en_core_web_mdmodel while we're building one with en_core_web_lg.

The main reason for starting from scratch is that the goal of this blogpost is to show how you can fully customize your container for NLU prediction. After all, you may also want to add custom components that depend on external python libraries so a custom container might be an easier starting point for some use-cases.

Before building this container, it'd be good to add a .dockerignore file to our folder. This file is very similar to a .gitignore file because it defines files that should not be included. We don't want our virtualenv to be copied into the container. We also don't need any local models to be copied in. Here's what our .dockerignore looks like:

Shellcopy

tests/*

models/*

actions/*

**/*.md

venv

With our files set up, we can start building our Rasa NLU container.

Shellcopy

docker build -t koaning/rasa-spacy-example .

This command builds the container by running all the steps defined in the Dockerfile. We also add a tag (via -t) to our container to have a name, koaning/rasa-spacy-example, that we can refer to when we try to run the container.

Shellcopy

docker run -it -p 8080:8080 koaning/rasa-spacy-example

Once again, you can use curl to send web requests to your container. You could even go a step further now and run this container on a cloud service. The nice thing about our container is that it contains a trained model and can serve NLU predictions without any other dependencies. For example, you could send the container to Google Cloud so that services like Cloud Run can run it on your behalf.

Shellcopy

docker tag koaning/rasa-spacy-example gcr.io//rasa-nlu-demo

docker push gcr.io//rasa-nlu-demo

There are many options, though. Everycloudprovider has its way of hosting docker containers, and you could even consider running your containers on a VM or Kubernetes.

Conclusion

In this tutorial, we've seen how to make custom docker containers for Rasa NLU models. This is great because it allows us to re-use components from our virtual assistant for other use-cases.

If you're considering this approach, we recommend keeping the following things in mind.

- A trained Rasa NLU container can get quite heavy depending on the configuration that you've used. The

en_core_web_lgspaCy model is 700 MB, so pushing/pulling this container might take a while. You may also need to provision your containers with extra compute resources if you use heavy-weight models. - If you're using a Rasa NLU container in a serverless setting like Cloud Run, you should also be aware that it can take a while to spin up the container. Typically you don't pay for a serverless service if no requests come in because the service will go into a "cold state". Sending a single request will move the service into a "warm state," but the first request sent will receive a slow response because the container needs to be spun up. Again, if you're building a heavy container, this may result in the first request having a response time of seconds, not milliseconds.

- Running a Rasa NLU container is great, but it's not a complete service. You'll have a service that can independently make predictions, but you won't have a feedback mechanism. If you're going to re-use the NLU model, we recommend frequently syncing back with the labeled data that you receive via Rasa X.