TL;DR

As the hype around LLMs continues, everyone’s scrambling to figure out how to make LLM-powered chatbots actually useful and practical, especially for enterprise applications. While fully unstructured LLM agents promise flexibility, developers find them too unreliable for production applications.

While studying different approaches, we found that semi-structured approaches separating conversational ability from business logic execution strike the necessary balance, providing reliable and consistent results without sacrificing flexibility. In this post, we’ll jump into the different methods and compare them, guided by examples of how the different types of assistants perform. As a starting point we’ve used the Langchain (LangGraph) example from their customer support assistant tutorial.

We ran experiments and automated tests to measure cost and latency per user message for two different semi-structured approaches:

- CALM1 separates conversational ability from business logic execution

- LangGraph combines conversational ability with business logic execution

All experiments were powered by GPT-4. The CALM approach had a significant edge in terms of response time and operational costs. The code to reproduce everything in this post is here.

For instance, across our experiments, the LangGraph assistant incurs a mean cost of $0.10 per user message, more than 2 times the CALM Assistant’s mean cost of $0.04. Across experiments where the user changes their mind, the mean cost for LangGraph is $0.18, a 4.5-fold increase over CALM’s $0.04. Thus, separating conversational ability from business logic results in a cost reduction of up to 77.8%.

Similarly, LangGraph’s mean latency across experiments was 7.4 seconds per user message, a nearly 4-fold increase over CALM’s 2.08 seconds. When optimized with NLU2, CALM’s mean latency was reduced to 1.58 seconds. Once again, across experiments where the user changes their mind, the mean latency for LangGraph was 10.1 seconds, a nearly 6-fold increase over CALM’s 1.72 seconds, and CALM + NLU got us down to 1.41 seconds.

We also saw that CALM is far more reliable and consistent in its responses. In contrast,

the LangGraph approach lacked consistency and reliability in the conversations, interactions, and execution of required tasks (take a look at the screenshots in this post to get a sense of these mistakes).

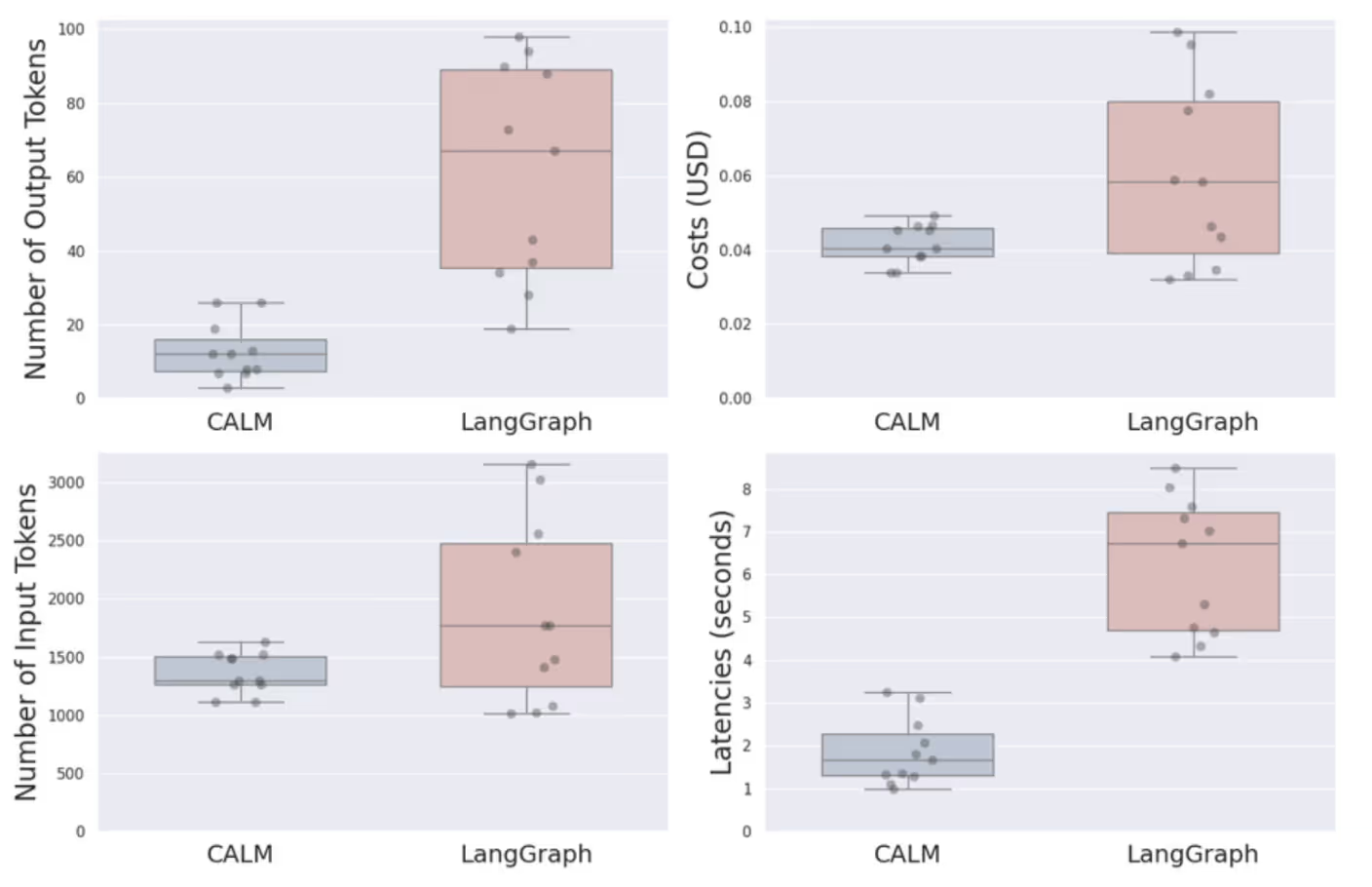

Caption: Box plots and beeswarm plots comparing "CALM" and "LangGraph" assistants across key metrics. The plots show completion tokens, costs ($/conversation), input tokens, and latencies (seconds). The line in the middle of each box represents the median. Beeswarm points show individual data points, revealing distribution density. Lower values indicate better performance for all metrics. If you're wondering what these comparisons are based on, please read the rest of the post for context and methodology. This visualization offers a comprehensive view of the two systems' performance, highlighting differences in efficiency and resource usage.

Mixing conversational ability and business logic seems like trying to enjoy a 3-course meal by putting it all in a blender: once blended, the entropy of the system increases, and we’re unable to return to retrieving our business logic (or main course, in our tasty metaphor). Let’s jump in!

Introduction

The conversational AI landscape is in a state of flux. We've moved beyond simple rule and intent-based chatbots (for a rich history, see here, here, and here), but the industry is still grappling with how to harness the power of large language models (LLMs) in a way that's genuinely useful and reliable for businesses. The million-dollar question isn't just how to build AI assistants but how to build them to deliver consistent value in real-world applications while adhering to crucial business logic. Let's dive in and compare the options.

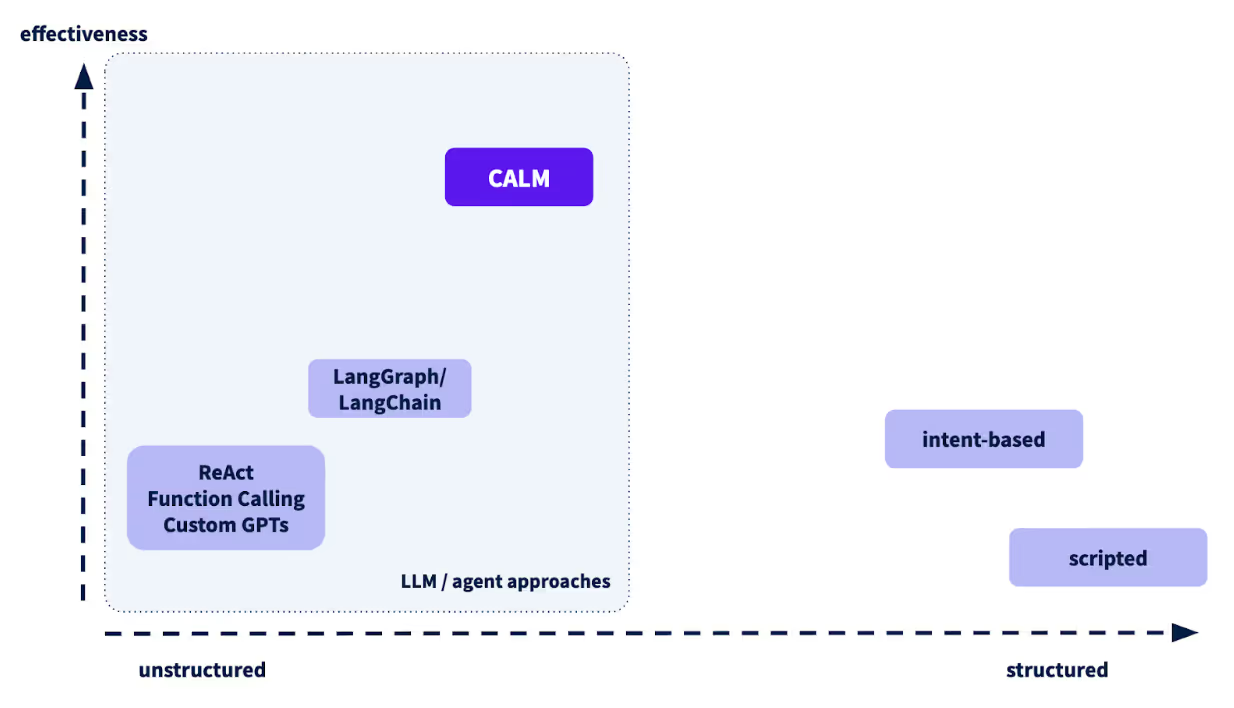

Two Dimensions of AI Assistants

When evaluating AI assistant frameworks for practical use, we need to consider two key dimensions:

- Structure (Unstructured to Highly Structured)

- Effectiveness (e.g., reliability, cost, performance, and ability to execute outcomes)

Caption: Comparative effectiveness of conversation management approaches. The figure shows CALM and CALM+NLU (Natural Language Understanding) positioned higher on the effectiveness scale compared to other methods like LangGraph. This visualization represents our analysis of how well each approach handles complex conversational scenarios and maintains contextual understanding. If you're wondering what this comparison is based on or how we arrived at these results, please read the rest of the post for full context and methodology.

This framework allows us to categorize different approaches and understand their strengths and weaknesses. Let's explore each category.

Fully Unstructured Approaches: Embedding Business Logic in System Prompts

Unstructured approaches, like those using raw large language models (LLMs), are the wild child of the AI world. They're incredibly flexible and can handle a wide range of queries. But here's the catch - they're about as reliable as a weather forecast. One minute, they're spot on; the next, they're telling you to pack sunscreen for a blizzard.

In a fully unstructured approach such as ReAct or using function calling, all business logic resides within a single system prompt (note: you can still have sub-agents, but all your true logic lives in a prompt). This approach leverages the flexibility of large language models but often at the cost of consistency and reliability. Here's an example of what such a system prompt might look like for an AI assistant for an airline:

By following this prompt, the LLM will try to act as a helpful assistant, but due to the probabilistic nature of LLMs, its responses and behavior will vary every time you talk to it and often fail to follow the instructions correctly. This happens because you provide the instructions for each task (e.g., a flight booking) to the LLM in natural language. The LLM then acts as a smoothie maker, blending the instructions with its knowledge of the world, and much of the structure is lost.

Semi-Structured Approaches: Balancing Flexibility and Consistency

Semi-structured approaches aim to find a middle ground between the flexibility of LLMs and the need for more reliable, consistent responses. Frameworks like LangGraph fall into this category, offering tools to guide LLM interactions without fully constraining them.

One of the big challenges with these approaches is the lack of consistency and reliability in the conversations, interactions, and execution of required tasks. This shouldn’t surprise us as we are still interacting with one LLM assistant, prone to hallucination, as evidenced by the diagram in this LangGraph tutorial:

Caption: Figure from LangGraph’s Build a Customer Support Bot tutorial

To see how reliably such AI assistants can work, we tested the assistant from this tutorial (you can reproduce our work and have conversations with the assistant yourself by following the instructions in this Github repository).

We generated 5 conversations with the multi-skill LangGraph assistant using the same user prompts:

- Asked for our flight details

- Changed the flight

- Book a car, hotel, and excursion

There was only 1 problem-free conversation out of 5, so you could say that this has a 20% accuracy at sticking to business logic. But our view is that the mistakes are so frequent and serious that the exact number doesn't really matter.

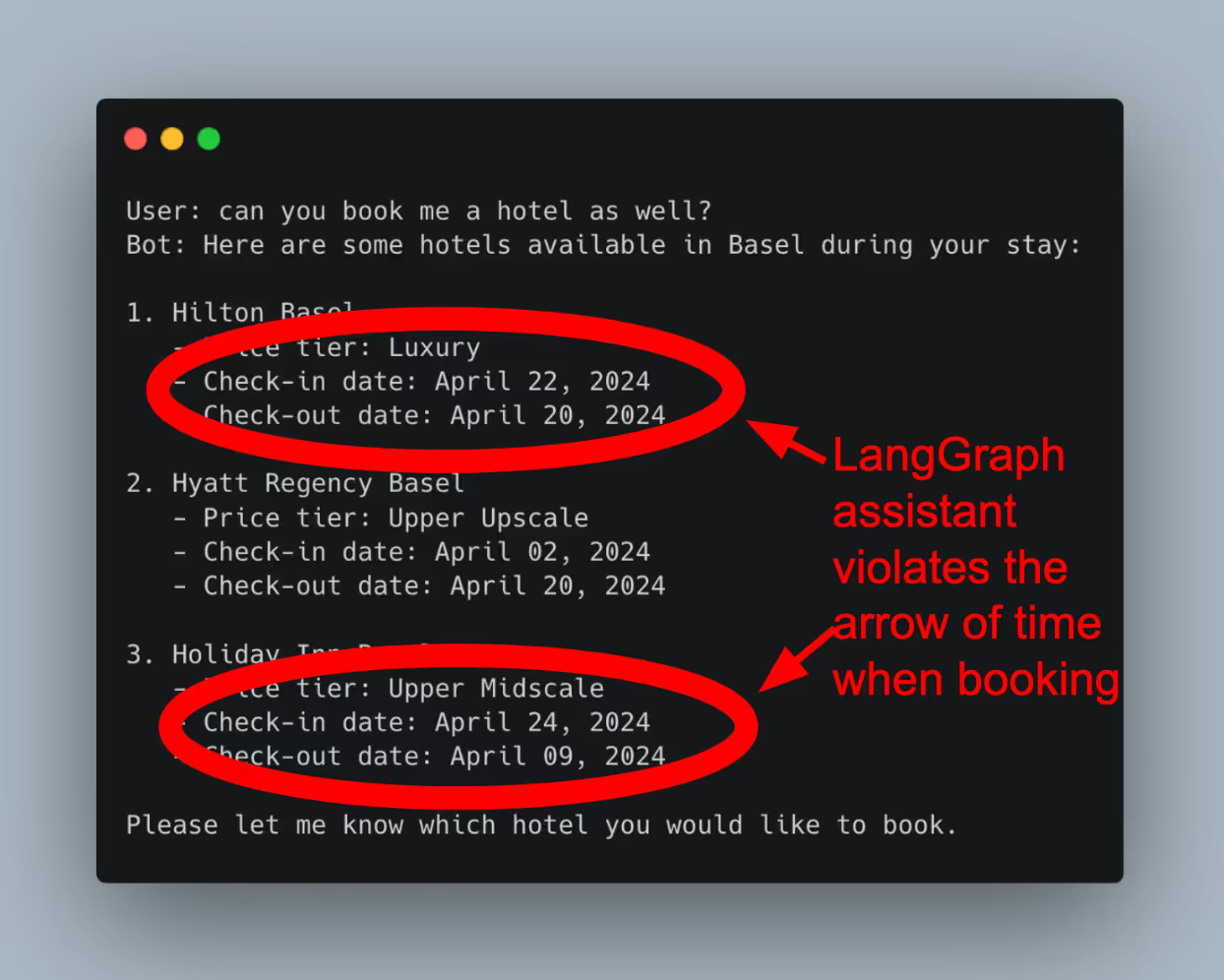

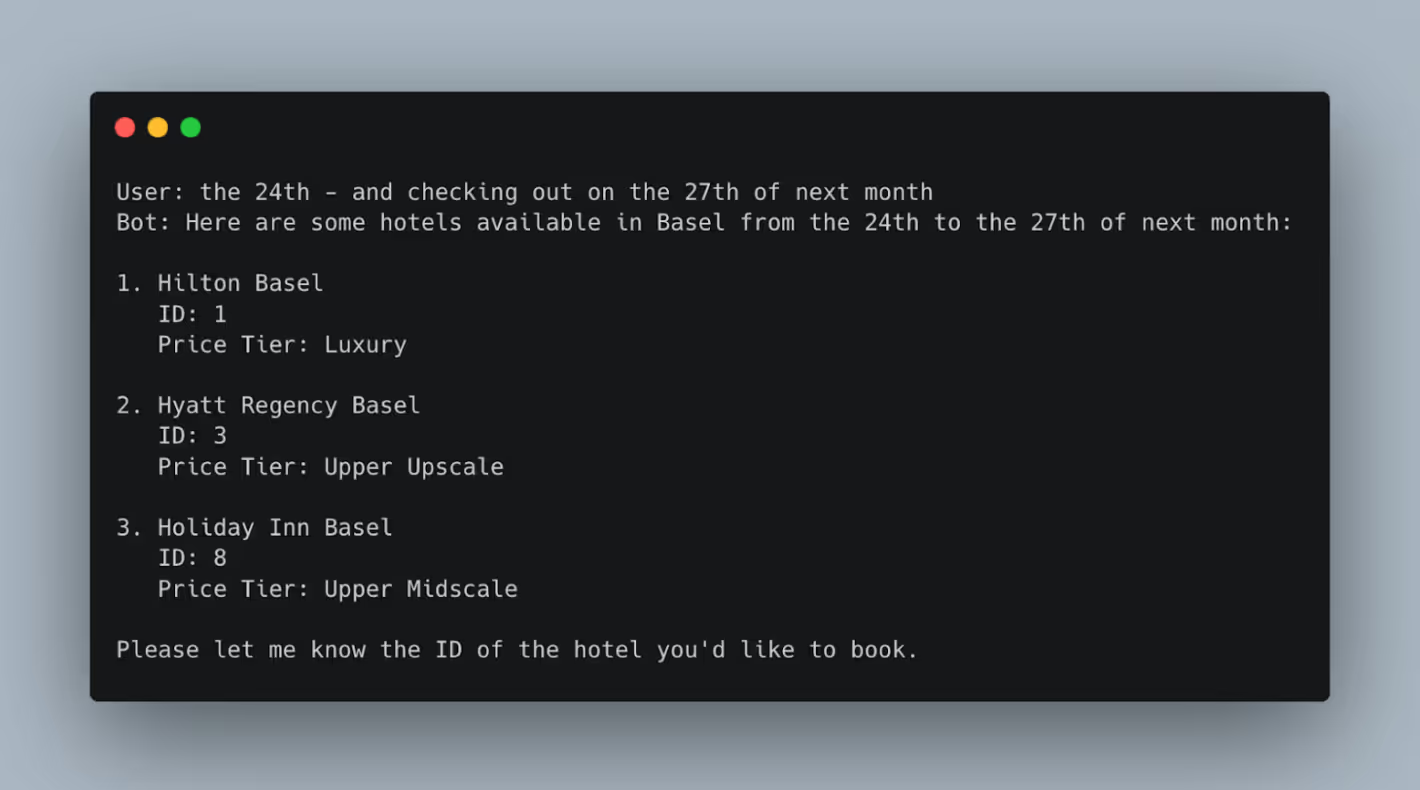

Even in a mere 5 conversations, there were major inconsistencies in the way information was presented and how the assistant wanted the user to respond (here, asking for the ID instead of the name):

This isn’t necessarily a dealbreaker but can impact the user experience negatively.

There is a total dealbreaker, however: the assistant has offered hotels from April 22 - April 20 and April 24 - April 9! It is unacceptable in an enterprise environment for AI assistants to offer trips that defy the arrow of time.

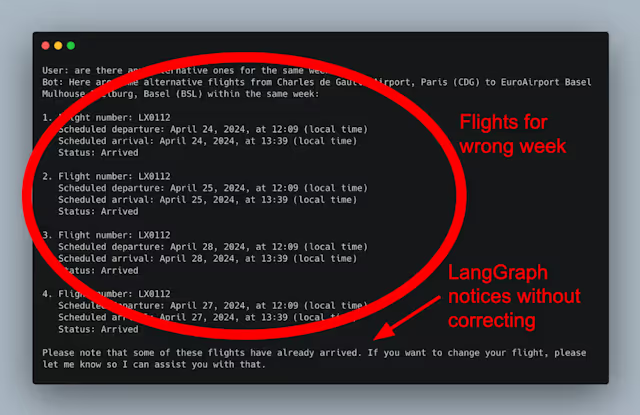

What’s worse is that when it books flights, it does so inconsistently. In the following, we’ve asked for our current flight details and requested alternatives for the same week:

Note that it gets the results out of order. Once again, it's not a dealbreaker. However, in another conversation (with exactly the same prompts), we get an additional flight (also note the different formats here):

In yet another conversation, the LangGraph assistant provides alternative flights for the wrong week and even identifies that some of these flights have already arrived:

Sadly, it gets worse. Sometimes, the Assistant will book the next flight correctly:

At other times, it will book the incorrect flight, even if the correct one was in the provided list. Once again, note inconsistencies in the format of reservation confirmation:

Now, this is by design, in a certain sense, due to the probabilistic nature of LLMs! So, we need a more reliable semi-structured approach that provides more guardrails than LangGraph while still leveraging the power of LLMs for what they are really good at. Businesses need consistency in their AI interactions but also want to handle all the different ways conversations can go.

To do this, we use many of the rich capabilities of pre-LLM conversational AI assistants alongside all the wonderful aspects of LLMs. All our lessons from working with Fortune 500 companies here at Rasa still hold, although the technological landscape has shifted dramatically. One of the most important lessons is that we need our AI assistants to adhere to business logic.

Understanding Business Logic in Conversational AI

When we say “business logic,” we’re not talking about a rigid dialogue tree. We’re talking about the steps the assistant needs to take to complete a task. This includes:

- Information Gathering: Collecting necessary data from users or APIs.

- Decision Points: Making choices based on available information.

- Process Flow: Following a sequence of steps to complete tasks.

- Constraints and Validations: Ensuring the AI operates within set parameters.

Implementing robust business logic ensures:

- Consistency: Predictable behavior across interactions.

- Accuracy: Correct task completion.

- Composability: Reliably re-using subtasks across use cases.

- Compliance: Adherence to regulations and policies.

- Efficiency: Streamlined processes and enhanced user experience.

Balancing Business Logic and Flexibility

Modern approaches, like CALM, balance structured processes with natural conversation flow (see our CALM tutorial, YouTube playlist and Research Paper for further details). It does this by separating conversational ability from business logic execution. CALM offloads business logic, so the LLM doesn’t have to worry about it. This allows for flexible input handling, contextual understanding, and adaptive dialogue.

For example, in a money transfer scenario, the AI gathers details, verifies them, and processes the transaction while accommodating user queries and changes. The assistant can respond appropriately when users change their minds, say ambiguous things, or have clarifying questions. This blend of structure and flexibility ensures that AI assistants deliver reliable and efficient service tailored to business needs. The CALM reimplementation of the LangGraph botresults in conversations like this:

All conversations with the CALM assistant will move through the business logic as above and will differ only in the type of chit-chat and rephrasing necessary to make the conversation more natural. To verify this across all conversations, we have built end-to-end tests that make sure the business logic is followed and the CALM Assistant passes all such tests. You can run the tests yourself by following the instructions in the README of our repository.

Semi-structured approaches, like CALM, aim to provide more consistent and reliable responses. However, it's important to note that structure doesn't mean rigidity. Modern structured approaches follow business processes faithfully without constraining users to a rigid dialogue tree. It's about finding the sweet spot between consistency and flexibility and using LLMs and deterministic systems for what they are great at.

Latency and Operational Costs

Let’s be very clear: reliability and consistency are table stakes for enterprise applications, and the LangGraph assistant lacks these. Other key concerns are cost and latency, so we explored these for several AI assistant approaches.

We looked at the following setups:

- Two semi-structured approaches: CALM and LangGraph

- An optimized semi-structured approach: CALM + NLU

We evaluated each approach based on:

- Speed and operational costs (crucial factors for real-world deployment)

Our testing process involved:

- Creating a set of common user scenarios and queries

- Implementing each scenario in all three approaches

- Running automated tests to measure cost and latency per user message

Key Findings

- Approaches that separate conversational ability from the execution of business logic, such as CALM, have a significant edge in terms of response time and operational costs.

- Such approaches showed higher consistency in following business rules and maintaining coherent conversations across interactions. They also balanced consistency and flexibility, offering more reliable responses than other methods while maintaining some adaptability.

- The enhanced semi-structured approach (CALM + NLU) maintained the same position as CALM in structure and reliability but showed improved speed and a slight advantage in cost due to fewer LLM calls.

Speed and Cost

Our findings show that structured and semi-structured approaches have a significant edge in response time and operational costs, particularly for frequently performed tasks.

Across our experiments, we see that the costs and latencies of the LangGraph Assistant are higher and have significantly more variance. For example, in the multi-skill examples discussed above, we see the following:

In some cases, such as conversations in which the user wants to cancel something, we even see that your worst-case latency with CALM is still better than your best-case with LangGraph:

For plots of results for all our test cases, please see this notebook here.

Across various use cases, we observe that the LangGraph assistant exhibits significantly higher costs and latencies than the CALM Assistant. For instance, across our experiments, the LangGraph assistant incurs a mean cost of $0.10, more than 2 times the CALM assistant’s mean cost of $0.04. Across experiments where the user changes their mind, the mean cost for LangGraph is $0.18, a 4.5-fold increase over CALM’s $0.04.

Similarly, LangGraph’s mean latency across experiments was 7.4 seconds, a nearly 4-fold increase over CALM’s 2.08 seconds. Once again, across experiments where the user changes their mind, the mean latency for LangGraph was 10.1 seconds, a nearly 6-fold increase over CALM’s 1.72 seconds. Overall, the LangGraph assistant’s metrics show greater variance, particularly in costs and latencies, across all use cases, highlighting the need for optimization to achieve more consistent and efficient performance. You can reproduce all of these results by following the instructions in this README.md.

Future Outlook

The first versions of Rasa were built when word2vec was the most hyped-up language model. The framework evolved many times over to incorporate all the SOTA models the community at large built, such as BERT, ConvERT, GPT-2, and now the next generation of models and LLMs, which can leverage in-context learning. We’re excited about CALM, which we believe provides the right balance between structure and flexibility, tailored to each specific use case.

We encourage you to explore different approaches and consider how they might apply to your needs and use cases. The conversational AI landscape is rich and varied, and there's no one-size-fits-all solution. When choosing an approach, consider your requirements for consistency, flexibility, and adherence to business logic.

What is your experience with different conversational AI approaches? Have you encountered challenges with consistency in AI assistants? We'd love to hear your thoughts and experiences!

Next Steps

We’d love for you to play around with CALM and let us know your thoughts. You can get started by:

- Spinning up the codespace associated with this blog post from the repository here

- Checking out the docs here

- Watching our YouTube playlist of videos to help you get started with Rasa and CALM

Notes

- CALM stands for “Conversational AI with Language Models”↩

- CALM + NLU is like CALM, but instead of always using an LLM, it lets an NLU model pick up the easy parts. It's a way of handling simpler queries or user inputs with a more lightweight model, rather than always relying on the more computationally intensive LLM for every interaction.↩