October 5th, 2021

Am I Allergic to This? Voice Assistant for Sight Impaired People

Angus Addlesee

This is a streamlined abridgement of a paper that I co-authored with the whole team detailed below, published (soon) at the International Conference on Multimodal Interaction 2021. If you ever need, please do cite our paper titled “Am I Allergic to This? Assisting Sight Impaired People in the Kitchen”

Tl;DR

Blind and partially sighted people need assistance with food packaging that often contains safety-critical information. Therefore, Textual Visual Question Answering (VQA) could prove critical in increasing the independence of people affected by sight loss. For example, comprehending text within an image is necessary to determine: what type of soup is in a can, how long to cook a microwave meal, when a box of eggs will expire, and whether a meal contains an ingredient they are allergic to. This handful of examples relate to a kitchen setting - a particularly challenging area for visually impaired people.

We extended the existing Aye-saac voice assistant prototype with this task and setting in mind. We developed textual VQA components to accurately understand what a user is asking, extract relevant text from images in an intelligent manner, and to provide natural language answers that build upon the context of previous questions. As our system is created to be assistive, we designed it with a particular focus on privacy, transparency, and controllability. These are vital objectives that existing systems do not cover. We found that our system outperformed other VQA systems when asked real food packaging questions from visually impaired people in the VizWiz VQA dataset.

The Team

At the start of 2020, I co-supervised a group of seven MSc students doing Heriot-Watt University’s Conversational Agents and Spoken Languge Processing course. We built the foundations of a voice assistant for blind and partially sighted people. You can read about that work and the students here.

This year’s team worked on this project over a 12-week period as part of the same course. They are:

Students:

Elisa Ramil Brick LinkedIn

Vanesa Caballero Alonso LinkedIn

Conor O’Brien LinkedIn / GitHub

Sheron Tong LinkedIn

Emilie Tavernier

Amit Parekh LinkedIn / GitHub

Supervisors:

Angus Addlesee LinkedIn / Twitter

Oliver Lemon LinkedIn / Twitter

Project Motivation

Cooking is a crucial skill for anyone wanting to lead a healthier and more independent life. Learning to cook is already challenging, but is significantly more complex for people affected by sight loss. Charities like Sight Scotland Veterans support this group by offering accessible kitchen appliances, kitchen layout advice, and wonderful social cooking events:

At CVPR 2020, a panel discussion (which you can watch here) with four blind technology experts revealed that textual information was a daily challenge - for example, finding the correct platform in a train station.

Textual information is everywhere in the kitchen and this information is often critical for safety. We use textual information to determine what type of soup is in a can, how long to cook a microwave meal, when a box of eggs will expire, and whether a meal contains an ingredient we are allergic to.

Technologies do exist to solve this problem, and they tend to be either human-in-the-loop, or end-to-end:

Human-in-the-loop systems allow visually impaired people to connect to sighted-volunteers, or pay trained staff depending on the platform. They can then use their phone camera to send photos or video and ask questions about the images. The volunteers are very accurate and can answer questions requiring timely/cultural understanding (for example “What does this work by Banksy mean?”), but they are not private. Images may contain personal information like addresses on letters or family photos that the user did not intend to share, which this unknown volunteer can now see.

End-to-end systems are more secure as they do not involve humans, they rely on powerful models that have been trained on large amounts of data. These models are not very transparent however, making it very difficult to determine the reasoning behind a particular response. This is incredibly important when developing assistive technologies however, as incorrect answers can have very dangerous outcomes. For example, incorrectly answering questions about medication.

End-to-end systems tend to just directly read text aloud for this reason, and the fact that textual visual question answering (VQA) is a challenging task. Although reading every word on a cereal box would ultimately provide an answer to most questions, this is simply not accessible as it is frustrating and takes a huge amount of time (as also discussed in this panel).

Our System

We decided to make some initial steps towards a voice assistant that can adequately perform textual VQA. We aimed to answer questions that required understanding of the text within an image, additional context from previous interactions, and some world context. For example, “Is this safe to eat?” requires the current date and a user’s allergens to answer adequately.

We also focused on privacy, transparency, and controllability to ensure the system is suitable for visually impaired people.

Introducing Aye-saac

As mentioned, a group of MSc students at Heriot-Watt University built a baseline voice assistant for visually impaired people from scratch. They called it Aye-saac and you can read about it here.

This year we built upon Aye-saac by building new components, improving existing ones, and adding pre-processing steps for textual VQA.

Thanks to Aye-saac’s microservice-based architecture, its behaviour is very explainable and components can be added or tweaked very easily. This transparent and controllable foundation was exactly what we needed.

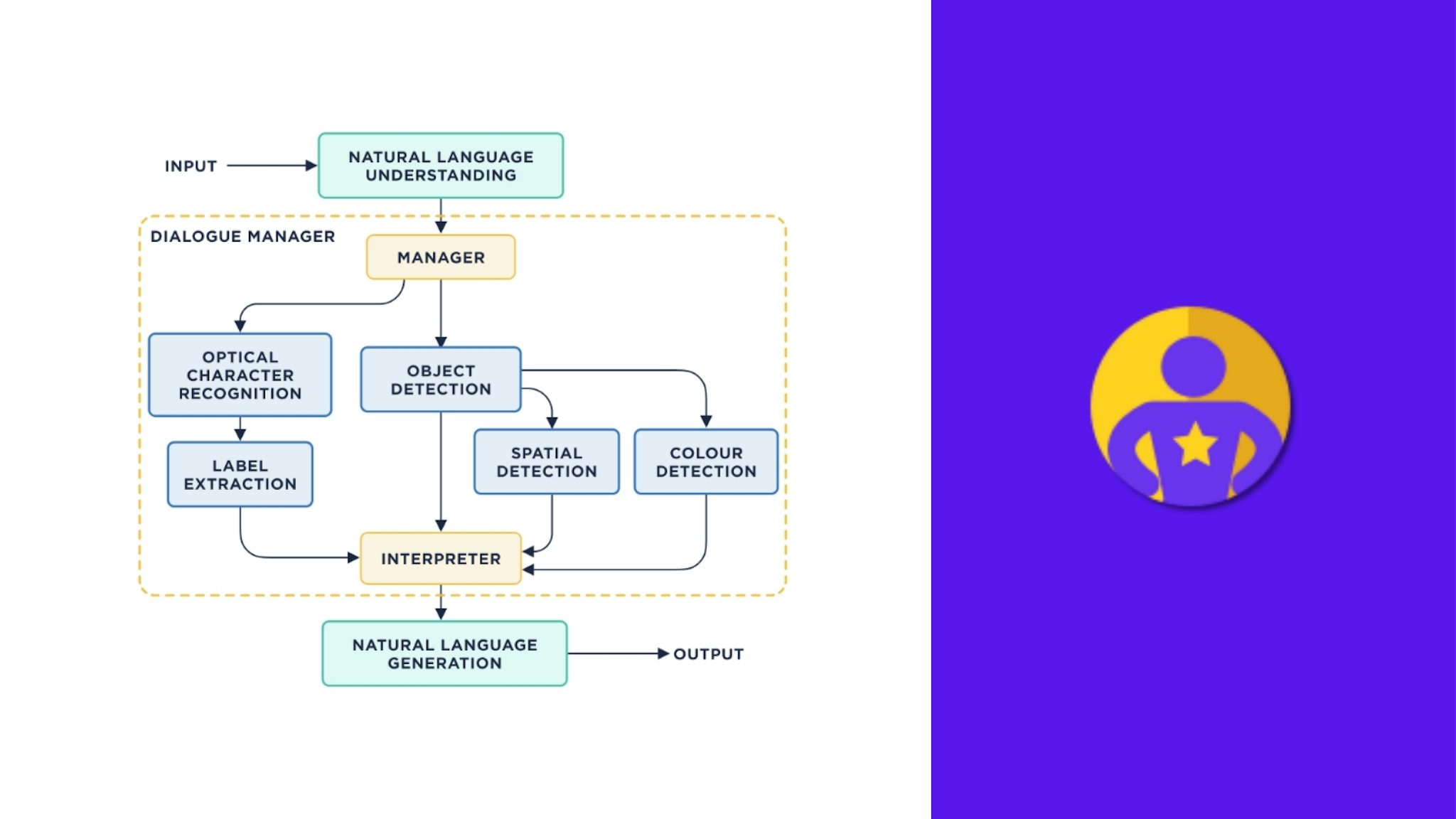

In a similar fashion, we created an updated architecture diagram and next we will cover each of our updates in more detail.

You will notice that there is a new “Spatial Detection” component that we do not discuss below. That was implemented by another group of students - keep an eye out for that work being published very soon!

Following the user’s question:

Natural Language Understanding (NLU)

Aye-saac originally used a Rasa NLU model to receive the user’s question and ‘understand’ it.

We continued to use Rasa as it is a brilliant open-source conversational AI framework that can transform the raw-text question into a structured form with key details extracted.

The previous team implemented a very simple Rasa model however, so we really needed to update this to handle all of our added functionality. We decided to use a Dual Intent Entity Transformer (DIET) model within Rasa, using spaCy’s pretrained language models. Instead of training separate intent classification and entity extraction models, DIET models train these together as it boosts overall performance.

In order to understand the new questions that we plan to answer, we needed to generate more training data. We therefore crowd sourced data from fellow students. Examples include:

-

Are there nuts in this? (intent:detect_ingredients)

-

Is this still good to eat? (intent:detect_expiration)

-

When does this go off? (intent:detect_expiration)

-

I should stay away from eating dairy and molluscs, is this safe for me? (intent:detect_safety)

With our newly collected data, we trained the DIET model and our NLU was ready, understanding the intent of the user and extracting key entities (like dairy and molluscs in the last example) for use by downstream components.

We also implemented a ‘user-model’ that stored the user’s allergens detected by Rasa. This then allows us to answer vague questions like “Can I eat this?” with more specific justification.

Using Rasa for our NLU made it very easy to update the existing model by training a new one from scratch and just directly replacing the old one. We were very impressed by the performance of the DIET model overall.

Manager within the Dialogue Manager

Once the user’s question has been ‘understood’ by our NLU component, the manager selects a path through the various components within Aye-saac. In all of our cases, being that we are focusing on textual VQA, the manager passes the processed question through the optical character recognition (OCR) and label extraction components for almost all of our new intents.

The manager component also controls the cameras, and sends the photo along the same path as the user’s question.

Optical Character Recognition (OCR)

OCR extracts text from images and is therefore crucial for our project. One OCR challenge that we quickly identified was the fact that the text in images is not always straight. It would be extremely difficult to guide a visually impaired person to rotate objects on varying axes to straighten the text in relation to the camera. Aye-saac’s answers all rely on the OCR performance however, so boosting its accuracy was a high priority.

We implemented a “skew correction” pre-processing step in the OCR component to improve the reading of text on items held at an angle. In short, we dilate the text until characters overlap to form rectangles. The skew angle is then computer with reference to the rectangle’s longest edge. Here is an example to illustrate this process:

This skew correction improved the word-level accuracy of Tesseract OCR by 23%, but we decided to opt for Keras OCR in the end as it had a slightly better overall performance (4%).

Label Extraction

Once the NLU has interpreted the user’s question, and the OCR has extracted any text from the image, the label extraction component is then tasked with finding the correct information within the text to answer the user’s question.

As mentioned earlier, some answers provide safety-critical information and we therefore aimed to design a transparent extractor. This gives us the ability to easily explain and tweak the reasoning behind a particular answer. We opted for a rule-based approach that depends on food packaging labels typically found in the UK.

Keywords were of particular importance, with “ingredients”, “nutrition”, “storage”, etc… commonly appearing before the text that answers are found within. Similarly, expiry dates tended to be in certain formats: dd MMM, dd/mm/yyyy, dd.mm.yyyy, MMMyyyy, etc…

We would like to implement a more complex extractor that uses label layouts and colours (like the traffic light systems) to better interpret product packaging. We deemed this out-of-scope for now as the lack of standardised labels makes this a huge challenge.

With no universal label standards, our version of Aye-saac has to expect a variety of label content and formats. A standard “Accessibility QR Code” would be incredibly easy for any system to detect, and would increase accuracy to ~100%.

Natural Language Generation (NLG)

All of the information gathered from each of the components is then collected in the interpreter and passed to the NLG to generate a natural language system response.

With all of our added functionality, Aye-saac’s controllability was welcomed as we needed to respond to a whole range of new user questions. To avoid incorrectly hallucinating responses learned from data, Aye-saac uses a templative NLG method. Thanks to this, we just had to create several positive and negative responses for each new NLU intent.

We could now respond to user’s questions:

In the above example, our system will remember that the user is vegetarian for future interactions. For example, if the user then asks whether another food item is ok to eat, our system will note that it does/doesn’t contain meat.

We also implemented a simple hierarchy of foods, noting that ‘sausages’ are ‘meat’ for example. We did not have the time to do this for all food types however, so our system does not know whether a ‘lemon’ is a ‘fruit’. We could use knowledge graphs in the future for this purpose (e.g. lemon in Wikidata).

Evaluation

We argue that while VQA is a huge area of research, textual VQA receives little attention - even though it can help visually impaired people become safer and more independent.

To test this, we put our new version of Aye-saac up against Pythia; a VQA challenge winner from Facebook AI Research.

We asked Aye-saac, Pythia, and humans some textual VQA questions from the VizWiz VQA dataset. This dataset contains image-question pairs from people that are affected by sight-loss. Some examples include:

Using the food label examples within this dataset, we obtained the following results:

As you can see, Pythia was the quickest to respond but only answered correctly 23.6% of the time. Given that many of the questions are binary yes/no questions, Pythia was bound to get some right. For example, Pythia correctly replied “no” when asked whether a food item contained beef. We believe that Pythia looked for the object beef, but do not know for sure.

Pythia is a VQA challenge winner and we are not claiming that it is a bad system - just that its ability to perform textual VQA is lacking. This is likely due to an absence of controlled OCR, relying on the model to implicitly learn to perform its own OCR while training.

Our main aim is to highlight that textual VQA is a critical, yet overlooked area of research. Explicitly including an OCR component could greatly benefit people.

Aye-saac was the slowest to respond to questions (which we are working on), but did correctly answer 61.1% of the questions. This was obviously not as accurate as a human, but human-in-the-loop systems have their own drawbacks as discussed earlier (privacy, cost, and time to connect).

When Aye-saac was incorrect, the OCR was usually to blame. For example, our allergen detection component is always correct when the OCR is accurate. We did implement skew-correction to mitigate some of the OCR errors, but Aye-saac is completely reliant on existing OCR models.

We have plans to develop a more complex label extractor, use knowledge graphs for food group understanding, and further expand the range of questions our NLU can understand. This should improve performance with current OCR models, but an “accessibility QR code” on packaging would make many of these tasks trivial, and ensure safety-critical answers were accurate.

Conclusion

We have tried to highlight the importance of textual VQA throughout this article and we really hope that it becomes a fundamental part of future VQA systems and evaluation. In addition, we believe that privacy, transparency, and controllability are necessary goals when designing assistive technologies - especially when answering safety-critical questions.

We will link the final paper here and at the top of this article once released. It contains a lot more detail for those of you with questions. The code can also be found here: https://github.com/Aye-saac/aye-saac

This team of MSc students were a delight to work with and were very excited to publish this paper. Please do pop back up to the top of this article and check out their social media.

Excitingly there is another student team! Their paper has just been accepted at ReInAct 2021, so watch out for another article on a similar topic.