May 25th, 2020

How to Use BERT in Rasa NLU

Vincent Warmerdam

Since the release of DIET with Rasa Open Source 1.8.0, you can use pre-trained embeddings from language models like BERT inside of Rasa NLU pipelines. Pre-trained language models like BERT have generated a lot of excitement in recent years, and while they can achieve excellent results on NLP tasks, they also tend to be resource-intensive. The question for many is when the benefits of using large pre-trained models outweighs the increases in training time and compute resources.

To help answer this question, we set up an experiment to serve as an example on how to set up BERT for your own pipeline. We compared three different pipeline configurations: a light configuration, a configuration using ConveRT, and a heavy configuration that included BERT. In this post, you'll learn how you can use models like BERT and GPT-2 in your contextual AI assistant and get practical tips on how to get the most out of these models.

Setup

To demonstrate how to use BERT we will train three pipelines on Sara, the demo bot in the Rasa docs. In doing this we will also be able to measure the pros and cons of having BERT in your pipeline.

If you want to reproduce the results in this document you will need to first clone the repository found here:

git clone git@github.com:RasaHQ/rasa-demo.git

Once cloned, you can install the requirements. Be sure that you explicitly install the transformers and conVert dependencies.

pip install -r requirements.txt

pip install "rasa[transformers]"

You should now be all set to train an assistant that will use BERT. So let's write configuration files that will allow us to compare approaches. We'll make a seperate folder where we can place two new configuration files.

mkdir config

Create the pipeline configurations

For the next step we've created three configuration files in a folder named "config". They only contain the pipeline part that is relevant for NLU model training and hence don't declare any dialogue policies.

config/config-light.yml

language: en

pipeline:

- name: WhitespaceTokenizer

- name: CountVectorsFeaturizer

- name: CountVectorsFeaturizer

analyzer: char_wb

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 200

config/config-convert.yml

language: en

pipeline:

- name: ConveRTTokenizer

- name: ConveRTFeaturizer

- name: DIETClassifier

epochs: 200

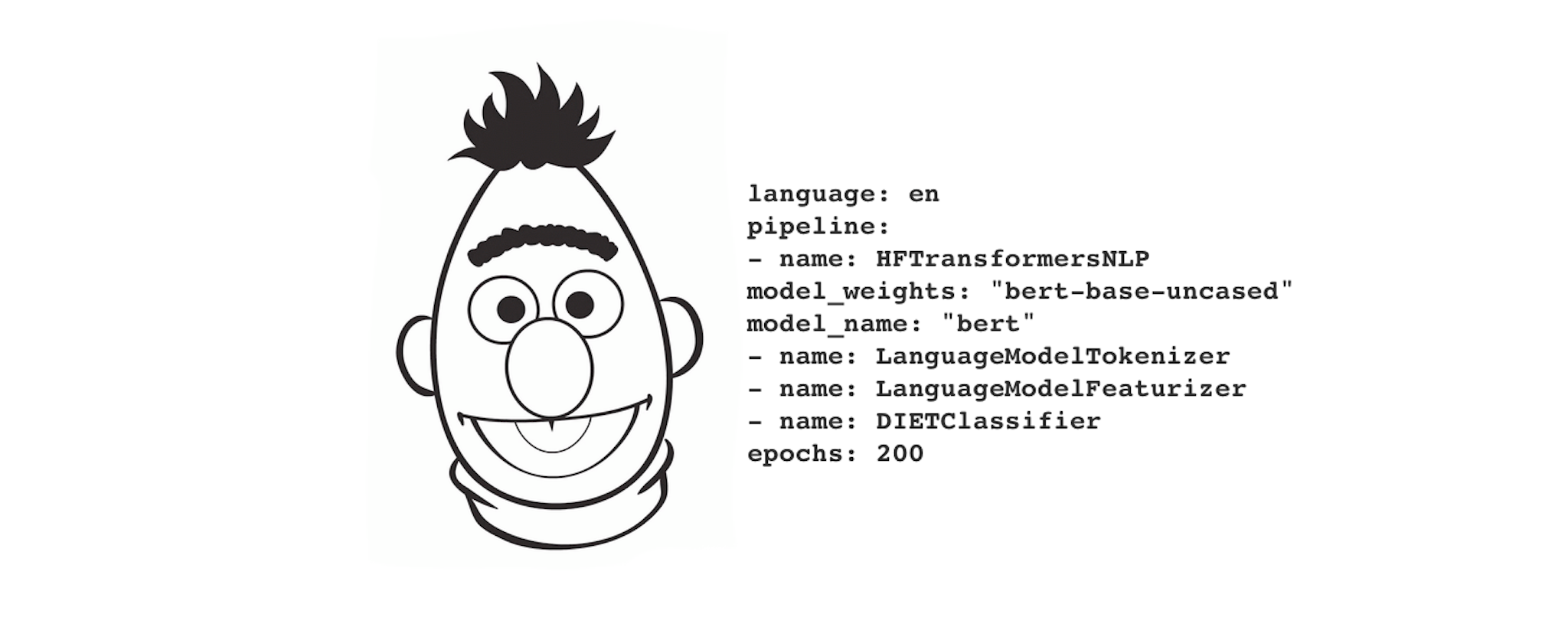

config/config-heavy.yml

language: en

pipeline:

- name: HFTransformersNLP

model_weights: "bert-base-uncased"

model_name: "bert"

- name: LanguageModelTokenizer

- name: LanguageModelFeaturizer

- name: DIETClassifier

epochs: 200

In each case we're training a DIETClassifier for combined intent classification and entity recognition for 200 epochs, but there are a few differences.

In the light configuration we have CountVectorsFeaturizer, which creates bag-of-word representations for each incoming message (at word and character levels).

The heavy configuration replaces it with a BERT model inside the pipeline. HFTransformersNLP is a utility component that does the heavy lifting work of loading the BERT model in memory. Under the hood it leverages HuggingFace's Transformers library to initialize the specified language model. Notice that we add two additional components LanguageModelTokenizer and LanguageModelFeaturizer which pick up the tokens and feature vectors respectively that are constructed by the utility component.

In the convert configuration, the ConveRT components work in the same way. They have their own tokenizer as well as their own featurizer.

Note that we strictly use these language models as featurizers, which means that their parameters are not fine-tuned during training of downstream models in your NLU pipeline. This saves a lot of compute time, and the machine learning models in the pipeline can typically compensate for the lack of fine-tuning.

Run the Pipelines

You can now run all of the configurations:

mkdir gridresults

rasa test nlu --config configs/config-light.yml \

--cross-validation --runs 1 --folds 2 \

--out gridresults/config-light

rasa test nlu --config configs/config-convert.yml \

--cross-validation --runs 1 --folds 2 \

--out gridresults/config-convert

rasa test nlu --config configs/config-heavy.yml \

--cross-validation --runs 1 --folds 2 \

--out gridresults/config-heavy

Results

As the processes run, you should see logs appear. We've highlighted a few lines from each pipeline configuration here.

# output from the light model

2020-03-30 16:21:54 INFO rasa.nlu.model - Starting to train component DIETClassifier

Epochs: 100%|███████████████████████████████| 200/200 [17:06<00:00, ...]

2020-03-30 16:23:53 INFO rasa.nlu.test - Running model for predictions:

100%|███████████████████████████████████████| 2396/2396 [01:23<00:00, 28.65it/s]

...

# output from the heavy model

2020-03-30 16:47:04 INFO rasa.nlu.model - Starting to train component DIETClassifier

Epochs: 100%|███████████████████████████████| 200/200 [17:24<00:00, ...]

2020-03-30 16:49:52 INFO rasa.nlu.test - Running model for predictions:

100%|███████████████████████████████████████| 2396/2396 [07:20<00:00, 5.69it/s]

# output from the convert model

2020-03-30 17:47:04 INFO rasa.nlu.model - Starting to train component DIETClassifier

Epochs: 100%|███████████████████████████████| 200/200 [17:14<00:00, ...]

2020-03-30 17:49:52 INFO rasa.nlu.test - Running model for predictions:

100%|███████████████████████████████████████| 2396/2396 [01:59<00:00, 19.99it/s]

From the logs we can gather an important observation. The heavy model consisting of BERT is a fair bit slower, not in training, but at inference time we see a ~6 fold increase. The ConveRT model is also slower but not as drastically. Depending on your use case this is something to seriously consider.

Intent Classification Results

Here, we compare scores for intent classification, side by side.

| config | precision | recall | f1-score |

|---|---|---|---|

| config-convert | 0.869469 | 0.86874 | 0.867352 |

| config-heavy | 0.81366 | 0.808639 | 0.80881 |

| config-light | 0.780407 | 0.785893 | 0.780462 |

Entity Recognition Results

These are the scores for entity recognition.

| config | precision | recall | f1-score |

|---|---|---|---|

| config-convert | 0.9007 | 0.825835 | 0.852458 |

| config-heavy | 0.88175 | 0.781925 | 0.824425 |

| config-light | 0.836182 | 0.762393 | 0.790296 |

Conclusion

On all fronts we see that the models with pre-trained embeddings perform better. We also see, at least in this instance, that BERT is not the best option. The more lightweight ConveRT features perform better on all the scores listed above, and it is a fair bit faster than BERT too.

As a next step, try running this experiment on your own data. Odds are that our dataset is not representative of yours, so be sure to try out different settings yourself. Lastly, we leave you with a few things to consider when deciding which configuration is right for you.

- Ask yourself, which task is more important - intent classification or entity recognition? If your assistant barely uses entities then you may care less about improved performance there.

- Similarly, is accuracy more important or do you care more about latency of bot predictions? If responses from the assistant become much slower as shown in the above example, you may also need to invest in more compute resources.

- Note that the pre-trained embeddings that we're using here as features can be extended with other featurizers as well. It may still be a good idea to add a CountVectorsFeaturizer to capture words specific to the vocabulary of your domain.

Have you experimented with using pre-trained language models like BERT in your pipeline? We're keen to hear your results when testing on your data set. Join the discussion on this forum thread and share your observations.