October 1st, 2021

New Livestream: Rasa Community Showcase

Emma Jade Wightman

We have another brand new regular stream on our YouTube channel - The Rasa Community Showcase!

During these livestreams, we will discuss various contextual bot projects with our talented community members and contributors. Shed light on their approach and discuss challenges they overcome.

This is also a unique opportunity to share community news and other projects that may interest you, or you’d like to collaborate with.

In our first episode, we shared some community related updates, highlighted some exceptional hobby chatbots and connectors. In addition, we interviewed Rasa Superhero Julian Gerhard on his contribution to the Natural Language Understanding (NLU) Examples GitHub Repository, BytePair Embeddings. BytePair Embeddings can be seen as a lightweight variant of FastText. They need less memory because they are more selective in what subtokens they remember. They're also available in 275 languages!

Check out the first episode:

Coming up next!

On Thursday 7th of October at 4pm CEST, we will talk to Angus Addlesee about his project, Aye-Saac, and developing a voice assistant for sight Impaired people using Rasa Open Source.

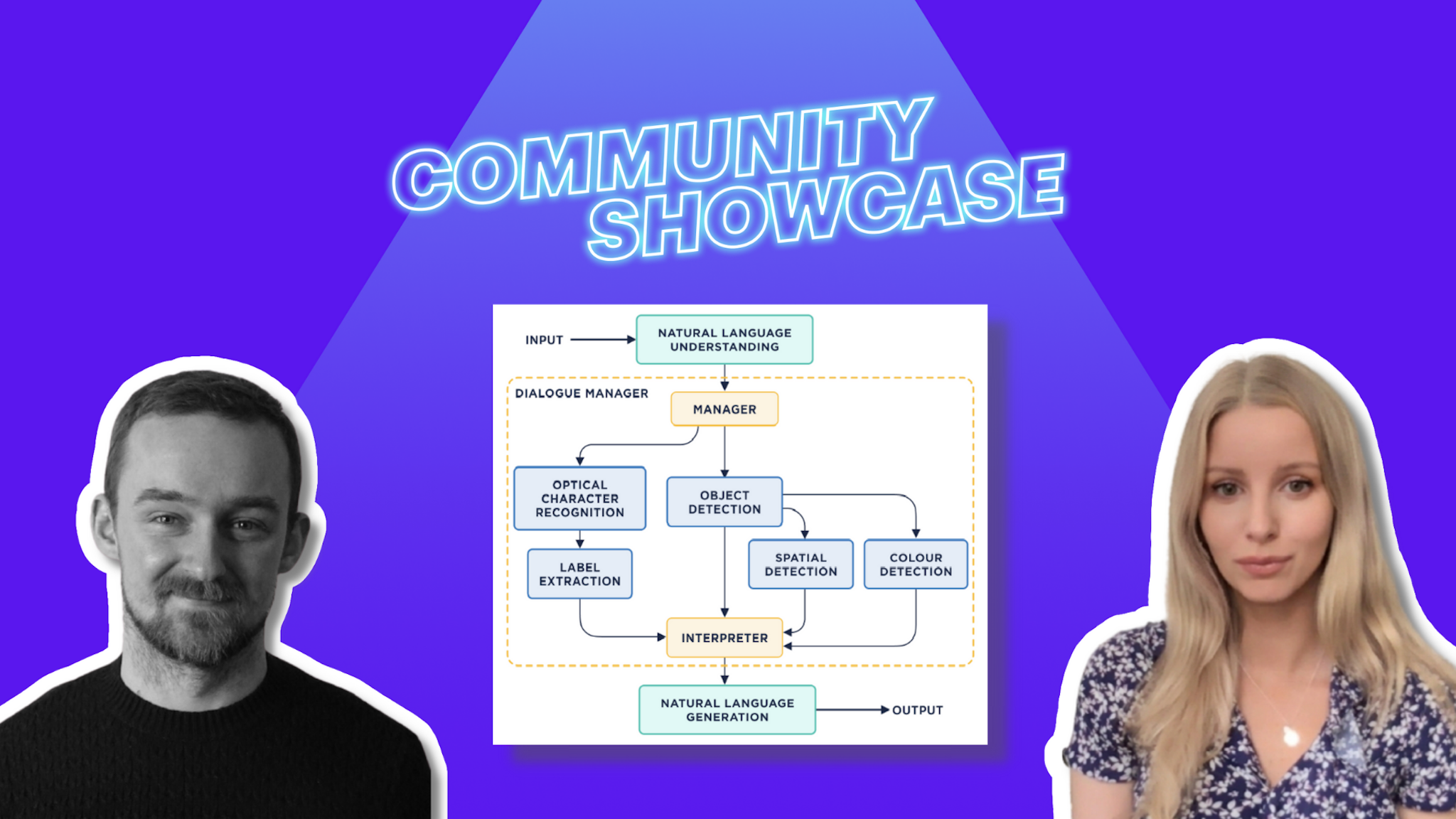

Angus and his team developed textual Visual Question Answering components to accurately understand what a user is asking, extract relevant text from images in an intelligent manner, and to provide natural language answers that build upon the context of previous questions. As their system is created to be assistive, they designed it with a particular focus on privacy, transparency, and controllability. These are vital objectives that existing systems do not cover. They found that their system outperformed other VQA systems when asked real food packaging questions from visually impaired people in the VizWiz VQA dataset.

If you’d like to hangout, discuss open source community related topics and ask Angus about his project - don’t forget to set a reminder and tune in on our channel.