With Conversational AI technology quickly evolving, many teams are looking for quantitative results on how LLM-native approaches like CALM and ReAct agents compare. They also want to know the benefits these provide over classic NLU-based chatbots. Evaluating these tools objectively and ensuring your evaluations are ‘ecologically valid’ can be challenging. So, we wrote this post to share some best practices.

It's essential to apply consistent, transparent metrics across all systems being tested, especially since the various approaches have different strengths.

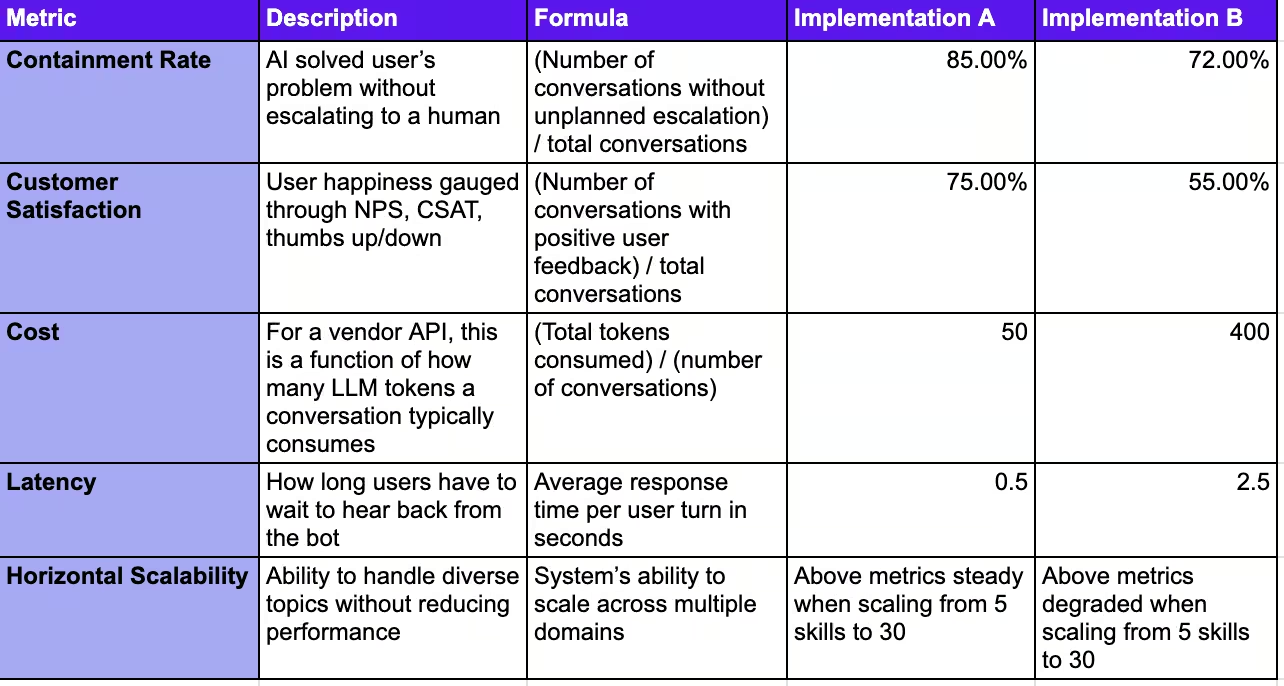

Key Metrics for Evaluation: What to Measure and Why

When evaluating conversational AI systems, it's crucial to start with foundational metrics-the non-negotiables that are essential for assessing performance. Once you’ve covered the basics, you can also consider some optional metrics to gain a deeper understanding of your system’s capabilities. These are metrics that should always be measured on actual production conversations, rather than any kind of synthetically generated conversations.

Online vs. Offline Evaluation: Why It Matters

While evaluating systems online (using real user interactions) provides the most accurate representation of performance, offline evaluation can still play a critical role, especially during development and iteration phases. Offline evaluations rely on pre-collected data or simulated conversations to measure performance without needing to deploy in production. They allow for faster experimentation but may not capture the full complexity of live user interactions.

For an in-depth look at how we’ve approached offline evaluation in the past, see this blog post. However, for the purposes of this post, we focus on online evaluation, where metrics reflect real-time interactions with users in production environments.

Foundational Metrics (The Must-Haves)

These are the core metrics that should always be included in evaluations:

It’s also important to do your best to implicitly measure customer satisfaction and two common methods are:

- Conversion / Activation: User events on web/mobile that indicate whether the problem was resolved or persists.

- Users NOT getting back in touch with support in the 24 hours after the conversation.

In toto, these metrics form the foundation of evaluating conversational AI systems. They allow us to measure whether the system solves the problem as well as how it performs in terms of user satisfaction, cost efficiency, and scalability.

Common Mistakes (Banana Peels): Pitfalls to Avoid in Evaluating Conversational AI

When evaluating conversational AI systems, it's easy to make mistakes that lead to skewed or incomplete results. These missteps, or "banana peels," can derail your ability to make objective, ecologically valid comparisons. Here are three common mistakes to avoid:

Mistake 1: Inconsistent Core Business Logic

It’s essential to maintain consistency in the process you’re automating, not just the user interface. If one implementation asks two questions and the other needs ten pieces of information, you’ve introduced inconsistencies. This skews the evaluation, making it impossible to compare the systems objectively.

Fix: Ensure the core business logic remains identical across systems. This will allow you to focus entirely on the AI’s performance.

Mistake 2: Misinterpreting Containment Rate

Containment rate is a valuable metric for assessing how well the AI handles conversations without human intervention. However, one of the most common mistakes is failing to distinguish between intended and unintended escalations, which leads to misleading conclusions about the AI's success rate.

- Intended escalations occur when the assistant is designed to escalate a conversation (e.g., the user requests something outside its capabilities).

- Unintended escalations happen when the user becomes frustrated and asks to speak with a human, indicating a failure of the system.

Also note thatif one of your implementations has more skills than the other, the less complete implementation will have to escalate more, so make sure your business logic is consistent across implementations (Mistake 1 above).

The Fix: When measuring containment rate, always separate intended from unintended escalations. This will provide a clearer picture of the system's actual performance and highlight areas where the AI needs improvement.

Mistake 3: Relying Solely on Manual Evaluation

Manual evaluations of conversational AI systems can introduce subjectivity, as different evaluators may have varying standards for success. This approach becomes problematic when assessing key metrics like solution rate and customer satisfaction, as it makes it difficult to obtain valid and consistent comparisons across different systems. Additionally, manual evaluation can be costly and inefficient, especially when it comes to measuring things like latency and cost-effectiveness over time.

However, while automated evaluation is critical for scalability and consistency, it’s equally important to not rely solely on automation. Automated tools can miss nuanced issues, especially when dealing with generative AI. For example, a major telecom company set the bar too low by having humans only flag glaring errors or hallucinations, overlooking subtler insights that could have improved the bot’s overall effectiveness.

The Fix: Automate your evaluations wherever possible. Use standardized scripts to track core metrics like containment rate and latency, ensuring your results are objective, consistent, and free from human bias. Automated evaluation can also help prevent costs from spiraling due to inefficient LLM usage, especially when it comes to mismanaged API calls.

However, supplement automation with manual review of a representative sample of conversations. Human oversight can help identify subtle issues that automation might miss-especially in areas like generative model outputs, where hallucinations and conversational flow can greatly affect user experience. This blend of automation for scale and manual review for depth ensures that your evaluation process is both comprehensive and precise.

What You Can Do Today

Use a dashboard or spreadsheet to track your metrics as you compare your AI Assistants’ performance. We’ve created a Google Sheet here that has key metrics for rows and implementations for columns. We encourage you to copy and use it!

Final Thoughts: Creating Objective and Ecologically Valid Conversational AI Evaluations

As we've explored, objectively evaluating chatbot implementations is not just a technical exercise-it's crucial for building AI assistants that deliver tangible value in real-world scenarios. To recap:

- Focus on foundational metrics: containment rate, customer satisfaction, cost, latency, and scalability. These form the backbone of any robust evaluation framework.

- Avoid common pitfalls: Be vigilant against inconsistent business logic, misinterpreted containment rates, and over-reliance on manual evaluation.

- Balance is key: Combine automated evaluation for consistency and scale with thoughtful manual review for nuanced insights.

The goal isn't just to have a chatbot-it's to deploy a solution that solves real problems efficiently, scales effectively, and enhances user experiences.

What should you do next?

- Implement our evaluation framework using our spreadsheet template. Start tracking these metrics consistently across all your chatbot implementations.

- Run a comparative test between your current system and at least one alternative approach. Pay special attention to how they perform in terms of scalability and latency under various loads.

- Don't forget security: While we've focused on performance metrics, ensure you're also conducting rigorous security assessments, especially if your chatbots handle sensitive data (Stay tuned for a future post that will dive into securing AI systems–until then, you can explore additional insights in this resource).

- Share your findings with your team and use the data to drive your next optimization efforts. Consider setting up regular review cycles to continuously refine your chatbot's performance.

By embracing these objective evaluation methods, you're not just improving a chatbot-you're paving the way for AI that truly enhances customer experiences, drives business value, and scales with your growing needs. Track your Assistants’ metrics in this spreadsheet today 🚀