Rasa Core is an open source machine learning-based dialogue system for building level 3 contextual AI assistants. In version 0.11, we shipped our new embedding policy (REDP), which is much better at dealing with uncooperative users than our standard LSTM (Rasa users will know this as the KerasPolicy). We're presenting a paper on it at NeurIPS, and this post explains the problem that REDP solves and how it works.

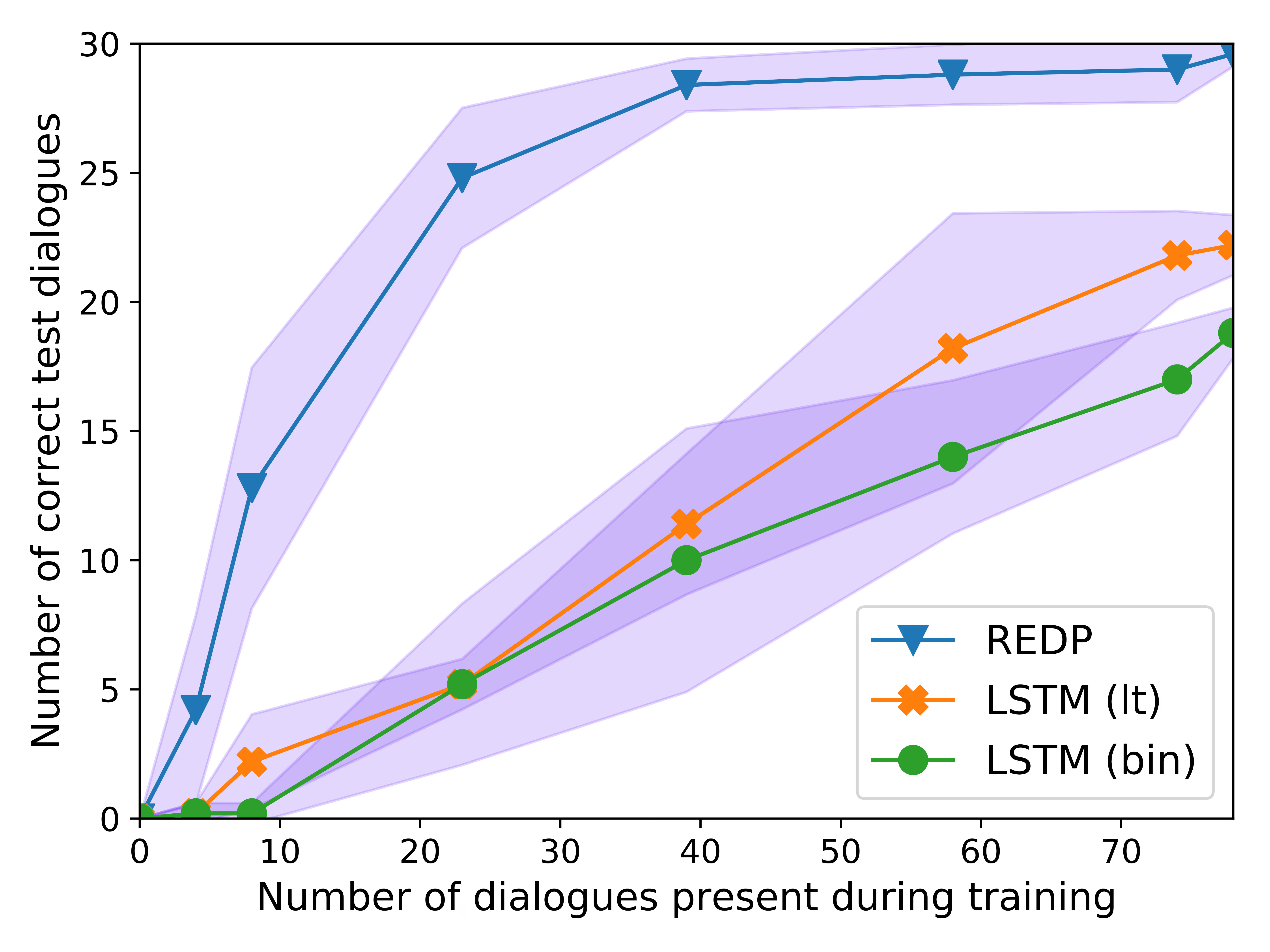

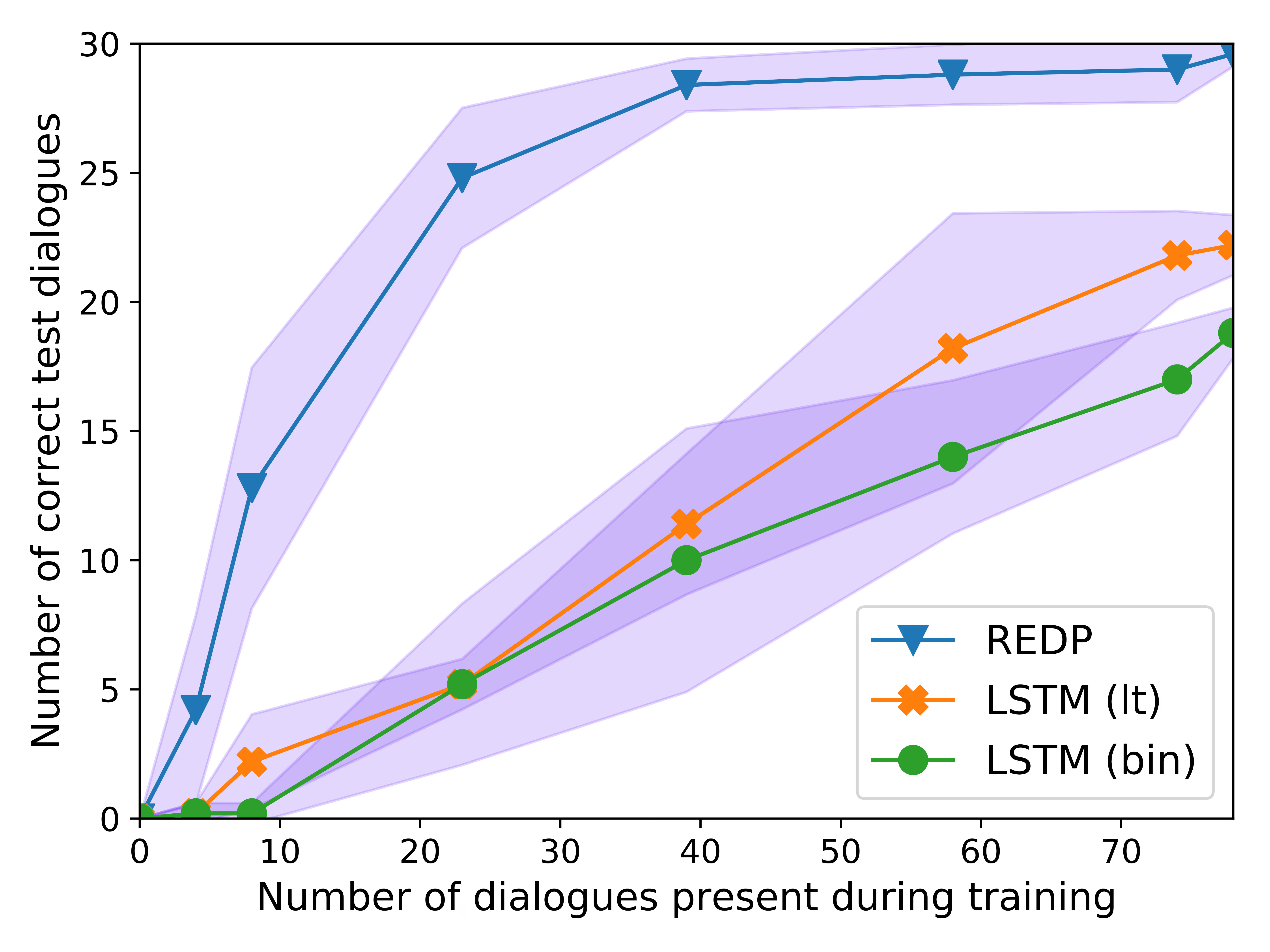

Our new dialogue model (REDP) outperforms our standard LSTM policies at dealing with uncooperative users. These plots are explained in more detail below.

Uncooperative Users Make a Developer's Life Hard

The hard thing about building a good AI assistant is dealing with the infinite ways that your users stray from the happy path. REDP, our new dialogue policy, has two benefits: (1) it's much better at learning how to deal with uncooperative behavior, and (2) it can re-use this information when learning a new task.

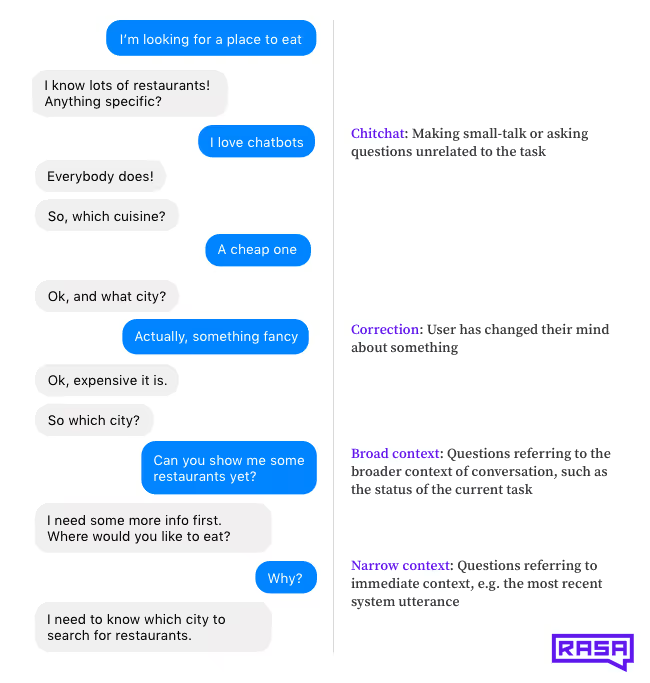

What do we mean by uncooperative behavior? To show the point, we'll take the always-popular restaurant recommendation example, but the same applies to building an assistant for IT troubleshooting, customer support, or anything else. Say you need 3 pieces of information from a user to recommend a place to eat. The obvious approach is to write a while loop to ask for these 3 things. Unfortunately, real dialogue isn't that simple and users won't always give you the information you asked for (at least not right away).

When we ask a user "what price range are you looking for?", they might respond with:

- "Why do you need to know that?" (

narrow context) - "Can you show me some restaurants yet?" (

broad context) - "Actually no I want Chinese food" (

correction) - "I should probably cook for myself more" (

chitchat)

We call all of this uncooperative behavior. There are many other ways a user might respond, but in our paper we study these four different types. Here's an example conversation:

In each case, the assistant has to respond in a helpful way to the user's (uncooperative) message, and then steer the conversation back in the right direction. To do this correctly you have to take different types of context into account. Your dialogue has to account for the long-term state of the conversation, what the user just said, what the assistant just said, what the results of API calls were, and more. We describe this in more detail in this post.

Using Rules to handle uncooperative behavior gets messy fast

If you've already built a couple of AI assistants or chatbots, you probably realize what a headache this is and can skip to the next section. But let's try and come up with some rules for one of the simplest and most common uncooperative responses: I don't know. To help a user find a restaurant case we might ask about the cuisine, location, number of people, and price range. The API we're querying demands a cuisine, location, and number of people, but the price range is optional.

We want our assistant to behave like this: if the user doesn't know the answer to an optional question, proceed to the next question. If the question is not optional, send a message to help them figure it out, and then give them another chance to answer. So far, so simple.

But if the user says I don't know twice in a row, you should escalate (for example handing off to a human agent, or at least acknowledging that this conversation isn't going very well). Except of course if one of the "I don't know"s was in response to an optional question.

You can handle this much logic pretty well with a couple of nested if statements. But to deal with real users, you'll need to handle many types of uncooperative behavior, and in every single user goal that your assistant supports. To a human, it's obvious what the right thing to do is, but it's not so easy to write and maintain a consistent set of rules that make it so. What if we could build a model that could figure out these dialogue patterns, and re-use them in new contexts?

REDP Uses Attention to Handle Uncooperative Dialogue

Attention is one of the most important ideas in deep learning from the last few years. The key idea is that, in addition to learning how to interpret input data, a neural network can also learn which parts of the input data to interpret. For example, an image classifier that can detect different animals can learn to ignore the blue sky in the background (which is not very informative) and pay attention mostly to the shape of the animal, whether it has legs, and the shape of the head.

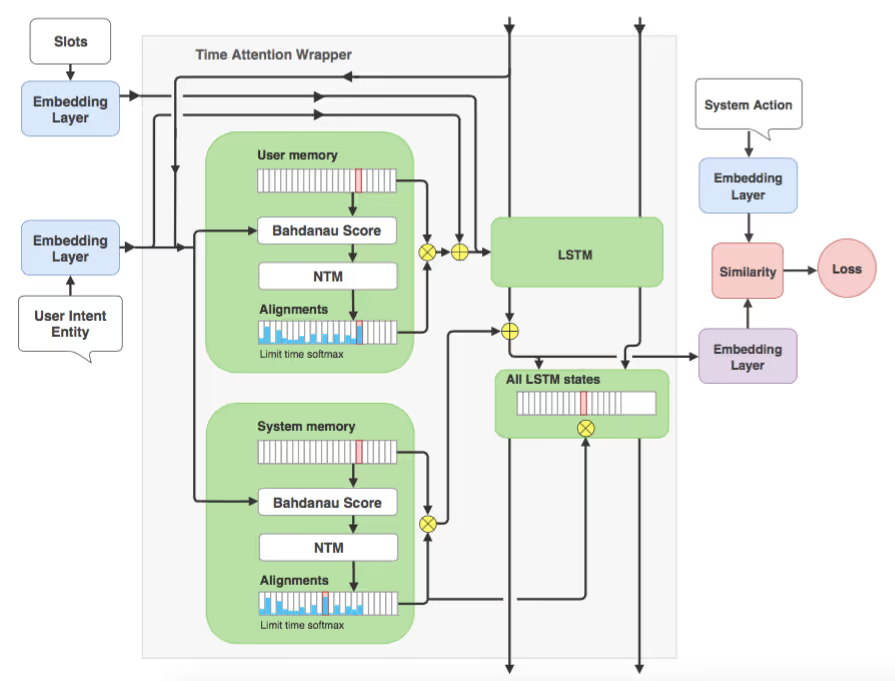

We used the same idea to deal with uncooperative users. After responding correctly to a user's uncooperative message, the assistant should return to the original task and be able to continue as though the deviation never happened. REDP achieves this by adding an attention mechanism to the neural network, allowing it to ignore the irrelevant parts of the dialogue history. The image below is an illustration of the REDP architecture (a full description is in the paper). The attention mechanism is based on a modified version of the Neural Turing Machine, and instead of a classifier we use an embed-and-rank approach just like in Rasa NLU's embedding pipeline.

Attention has been used in dialogue research before, but the embedding policy is the first model which uses attention specifically for dealing with uncooperative behavior, and also to reuse that knowledge in a different task.

REDP Learns when NOT to Pay Attention

In the figure below, we show a Rasa Core story in the middle, and a corresponding conversation on the right. On the left is a bar chart showing how much attention our model is paying to different parts of the conversation history when it picks the last action (utter_ask_price). Notice that the model completely ignores the previous uncooperative user messages (there are no bars next to chitchat, correct, explain, etc). The embedding policy is better at this problem because after responding to a user's question, it can continue with the task at hand and ignore that the deviation ever happened. The hatched and solid bars show the attention weights over the user messages and system actions respectively.

REDP is Much Better than an LSTM Classifier at Handling Uncooperative Users

This plot compares the performance of REDP and the standard Rasa Core LSTM (Rasa users will know this as the KerasPolicy). We are plotting the number of dialogues in the test set where every single action is predicted correctly, as we add more and more of the training data. We run two slightly different versions of the LSTM (for details, read the paper).

Reusing Patterns Across Tasks

We didn't just want to see how well REDP could deal with uncooperative users, but also to see if it could reuse that information in a new context. For example, say your Rasa assistant already has a bunch of training data from real users (being uncooperative, as they always are 🙂). Now you want to add support for a new user goal. How well can your assistant handle deviations from the happy path, even when it's never seen uncooperative behavior in this task before?

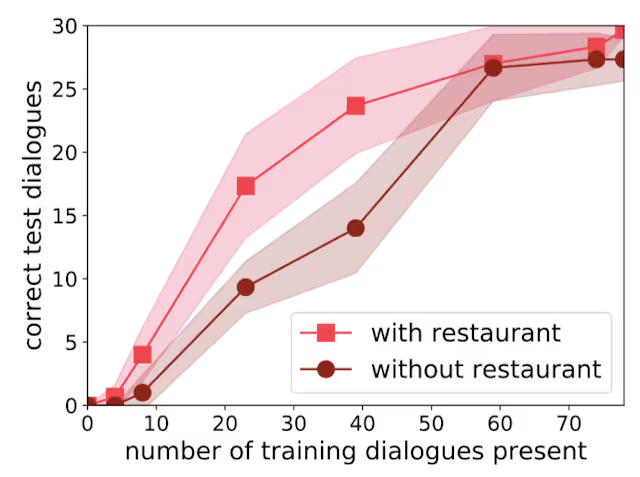

To test this, we created a train-test split of dialogues for a hotel booking task (containing a bunch of uncooperative behavior), and compared the performance with and without including training data from another task (restaurant booking).

V1 of REDP Showed a Big Benefit from Transfer learning

The plot above shows some results for an early version of REDP (not the final one, we'll show that next). The test set is in the hotel domain. The squares show how the performance improves when we also include training data from the restaurant domain. There's a big jump in performance, which shows that REDP can re-use knowledge from a different task! This is also called transfer learning.

Here is the same plot for the LSTM. There is some evidence of transfer learning, but it's much smaller than for REDP:

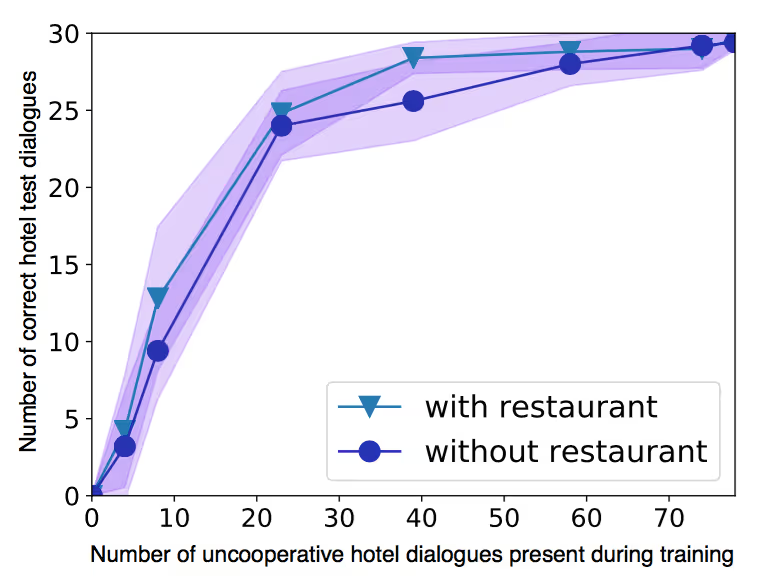

V2 of REDP Solves the Task Very Quickly

This plot shows the results for the 'final' version of REDP, which is described in the paper and implemented in Rasa Core. The performance is much better than V1. With only half the data, REDP reaches almost 100% test accuracy. We still see a benefit from transfer learning, but there isn't much room for improvement when adding the restaurant training data.

Next Steps

We're really excited about the embedding policy, and have a bunch more experiments running to show off what it can do. It made mincemeat of the first task we gave it, so we're throwing it at some even harder problems to study transfer learning in the wild.

Go and try REDP on your dataset! The code and data for the paper is available here. Please share your results in this thread on the Rasa forum.

There's a lot of hard work ahead to make the 5 levels of AI assistants a reality, so if you want to work on these problems, and ship solutions into a codebase used by thousands of developers worldwide, join us! We're hiring.