In 2019 I wrote that it’s about time to get rid of intents. One year later, we shipped the first intentless (or “end-to-end”) dialogue model in Rasa. Now, we’re announcing a big step forward, bringing the power of LLMs into Rasa’s dialogue management.

- Prefer to watch? Check out the webinar replay

- Want access to our beta program? Upgrade to Rasa Pro

Intents provide structure

First, a word in defense of intents. Intents are extremely useful. A remarkable feature of language is that humans can understand sentences that have never been said before, like this headline from a couple of years ago:

Iceland’s president admits he went ‘too far’ with threat to ban pineapple pizza

When we define intents, we create buckets that represent, at a high level, the kinds of things people will say to our bot. We simplify the problem of understanding the infinite possibilities of language down to assigning user messages to the correct bucket.

Helpful as they are, intents are also a bottleneck

Let’s be real: intents are made up and don’t really exist. People don’t think in intents; they say what’s on their mind. Taking an AI assistant from a prototype to something really good, many of the challenges we encounter stem from the rigidity of assuming that every user message has to fit into an intent.

Intents present different challenges as a conversational AI product matures:

- When bootstrapping a new assistant, defining intents upfront is time-consuming and error-prone

- When scaling your team, knowing what message belongs to what intent becomes a huge overhead

- When improving your bot, crowbarring in new intents becomes ever harder

Luckily, recent advancements in large language models (LLMs) have transformed the way we approach conversational interfaces. ChatGPT & its competitors are immensely powerful - and don’t use intents.

I’m excited about tapping into the power of LLMs to enhance enterprise-grade conversational AI. Rasa users have been able to use LLMs like BERT and GPT-2 in their NLU pipeline since we introduced the DIET architecture in early 2020. While we can just use these models to predict intents, we can do much more if we reimagine our approach to conversational AI.

Using LLMs to power an intent-based bot is like driving a Ferrari on 10 mph roads

Intentless dialogue is now a beta feature in Rasa Pro

We’ve built an LLM-powered, intentless dialogue model and are excited to share the beta release with the world today. It’s a new component called the IntentlessPolicy (to use the technical term). Here's a quick tour.

We can bootstrap a new assistant without defining intents

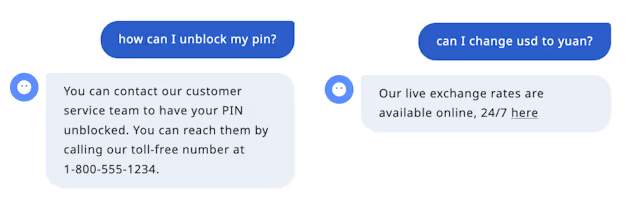

A common starting point for creating a new bot is a set of FAQs. These may have come from another team or department, or simply scraped from a website. Normally, your first step would be to define intents for each of the questions. What if we could skip that?

All we’ve done at this point is feed a list of question-answer pairs into the bot. We haven’t defined any intents, rules, or stories.

Using the IntentlessPolicy, our bot already provides a good question-answering experience, understanding many different ways that users could phrase their questions:

But the intentless dialogue model can do much more than that.

We can understand how meaning compounds over multiple turns

Let’s explore some conversations that are difficult to model using intents, but can be flawlessly handled by our intentless dialogue model. The conversation below presents two challenges. First, you need to look at both of the user’s messages to understand what they want. Second, I’d struggle to come up with an intent to describe “not zelle. a normal transfer”. In an intent-based world, we would have to map each of the user messages to an intent, and then explicitly describe how these two intents, in this order, lead to the final answer.

But we haven’t done any of that here. The IntentlessPolicy policy - like all Rasa policies - decides what to do next based on the history of the conversation, not just the last user message. But unlike other Rasa policies, it works directly with the text that users sent, rather than relying on an NLU model to predict an intent.

It understands how the series of user messages combine to tell us what to do in a zero-shot fashion (remember, all we’ve given the bot so far is a set of FAQs!).

We can contextually understand messages which would otherwise be out of scope

When you or I read the conversation below, it’s perfectly obvious that “I have a broken leg” means - in this context - that the user is not going to come to the branch. This is another example of where intents let us down. The pragmatics of the message matter.

What would your NLU model have to say about the message “I have a broken leg”? It sounds like a medical issue, and would almost certainly trigger an out-of-scope or fallback message. Yet, in this context, it is absolutely relevant to the conversation, and the intentless dialogue model correctly offers an alternative to visiting the branch.

The true measure of understanding is whether we can respond appropriately, not whether we predicted the right intent.

Our intentless model can generalize from very little data

So far, we’ve seen what the intentless policy can do out of the box, without any training data beyond FAQs. But of course, we don’t want to rely on what the model already knows, we have to be able to practice conversation-driven development if we want to build a great user experience.

Fortunately, when we released the end-to-end TED policy back in 2020, we already introduced the ability to train Rasa using end-to-end (read: intentless) stories. They look just like regular Rasa stories, but the user turns are represented directly by the text the user sent, rather than by intents and entities.

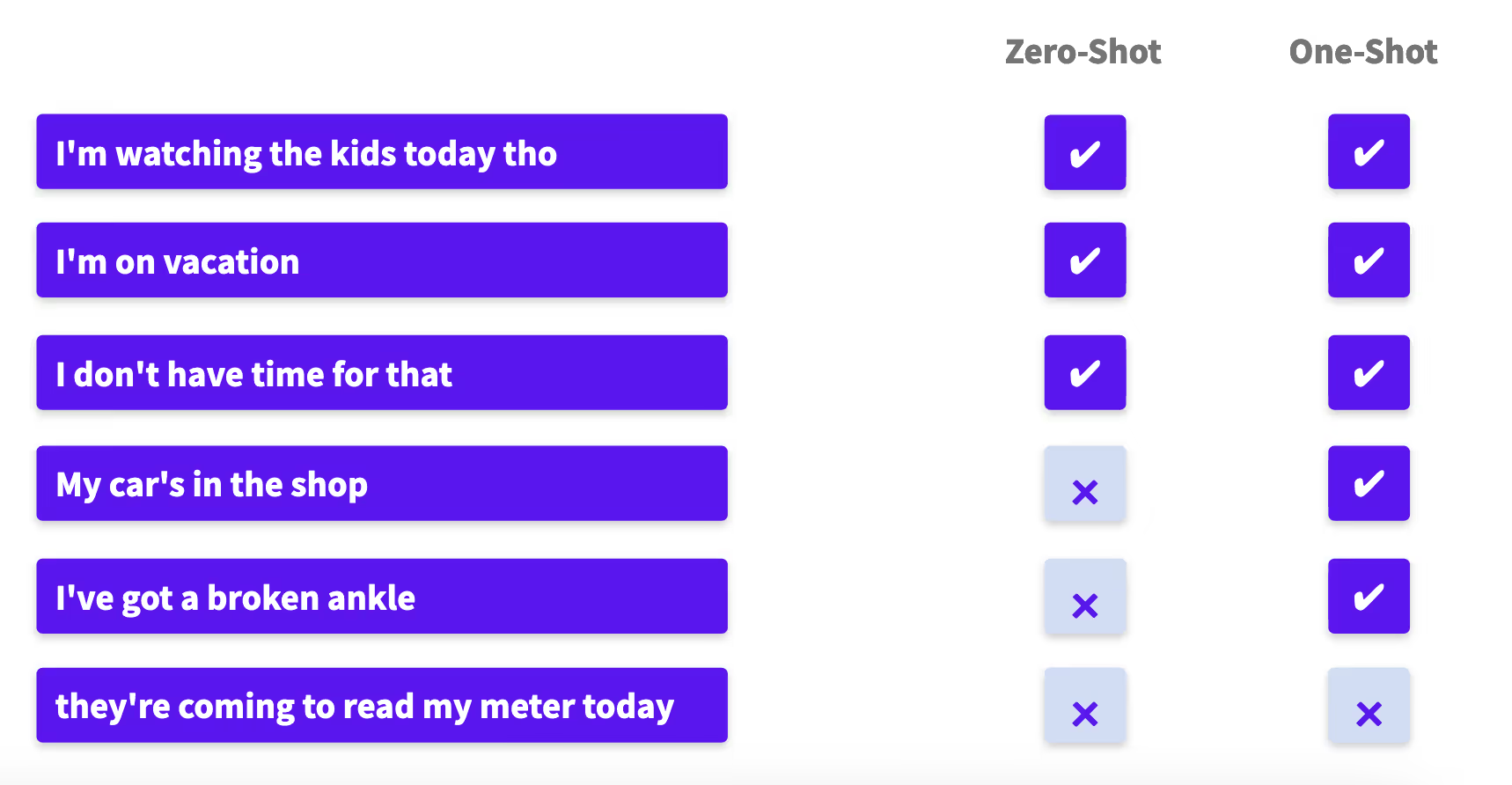

To evaluate what the intentless model already knows, and what it can learn, let's take a look at some test cases. Each test case is a copy of the conversation above, with the second user message “I have a broken leg” swapped out for a different reason why someone might not be able to come into the branch.

In the zero-shot column, we see that the intentless model, out of the box, already gets 3 of the 6 cases right. In the one-shot column, we see that with just 1 training example, all but one of the cases is handled correctly.

The power of LLMs makes the intentless model incredibly data-efficient.

Generative AI, safe for enterprises to use

Because intentless dialogue is implemented as a Rasa policy, it comes with two major benefits:

- It does not hallucinate. There is no risk of sending factually incorrect, made-up nonsense to users

- It integrates seamlessly with hard-coded business logic

Here’s how that integration works:

Forms are a convenient way in Rasa to capture some business logic. For example, when a user says that they’d like to open a savings account, we can trigger a form that collects the necessary information from the user and guides them through the process.

A form can be activated by the intentless model, or by detecting an intent and providing a rule or a story. Once the form is active - it runs the show and drives the conversation forward according to the business logic.

That business logic is known upfront and isn’t something you need a model to learn. The benefit of using forms is that your logic is externalized and easily editable.

Adding the IntentlessPolicy to your bot doesn’t interfere with your existing forms, but it does more than that. It can even handle contextual interjections and digressions.

When we talk about getting rid of intents, what we’re actually talking about is making intents optional. Use them when they make sense, but don’t be limited by them.

For example, in the conversation below, the intentless policy kicked in to answer the user’s question, before handing control back to the form. All we had to do for this work was provide an explanation of the address requirement as one of the possible responses the bot can send.

In an intent-based world, we would have defined a generic explain intent to capture a question like “why do you need to know that?”, and explicitly define how to handle that question at this specific point in the flow. With our intentless model, this just works automatically.

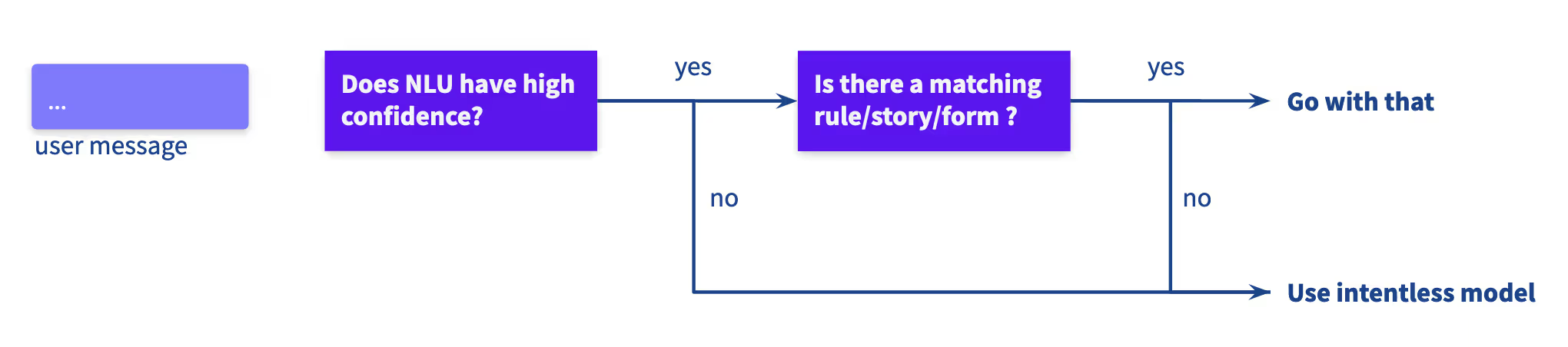

The IntentlessPolicy works perfectly in conjunction with intents, rules, stories, and forms. You can reason about the intentless policy the same way you think about a fallback - it kicks in when the NLU model has low confidence, or when an intent has been matched but there is no rule or story that tells your bot what to do. But this model is much more than a fallback - it’s set up to give the right answer.

By adjusting the thresholds where this policy kicks in, you can introduce it in a completely non-disruptive way to an existing project. By gradually introducing the intentless model, you can start to replace more and more “Sorry, I didn’t get that” messages with actually helpful responses and see your KPIs go up as a result.

Our Intentless future

As we move towards level 5 conversational AI, it's becoming increasingly clear that we need to move away from the rigidity of intent-based models. At Rasa, we believe that an intentless approach to building chatbots is the way forward, and we're excited about the possibilities it presents.

I’ve only shown a couple of examples here of what the intentless policy can do, but there is much more to come.

We've seen that an intentless model can deliver significant benefits in terms of ease of development and improved user experience. By eliminating the need for manually defining intents, we can bootstrap new chatbots in seconds, while also reducing the overhead of scaling our teams and adding new functionality to existing bots.

But the benefits go beyond just ease of use. With an intentless approach, chatbots are better equipped to understand messages in context and handle contextual interjections with ease. This means that users are less likely to encounter frustrating "I didn't get that" responses, resulting in a more satisfying and engaging experience.

Rasa’s vision is to transform how people interact with organizations through AI. To do that, we have to help the teams building conversational AI get out of their bubble so that bots can understand users when they express their needs in their own words.