I think it's time we start creating a common language to talk about the structure of conversations with AI assistants.

Web app UX has a common language

We have a weekly meeting at Rasa called "UX Problem solving" where product designers, frontend and backend engineers propose and debate how to solve a problem in our UX. For example, in Rasa X we recently made the domain file editable in the UI. The challenge was that your domain file contains the templates your assistant uses to send messages. The UI already has a separate tab for editing those, so we would be duplicating information.

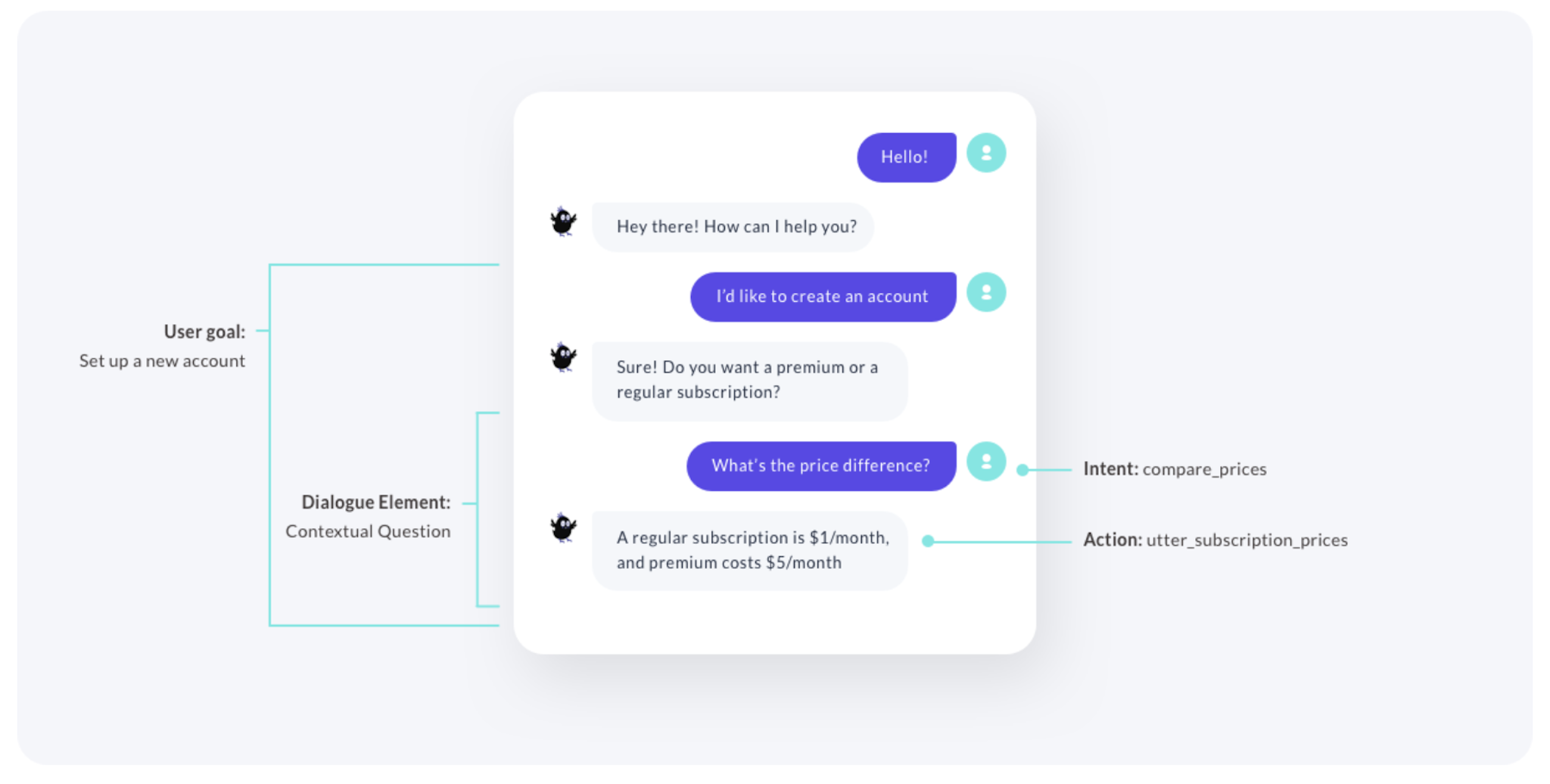

We debated possible solutions using words like modal, popup, and hover state. But as a community, we don't yet have the vocabulary to have a similar discussion about the elements of dialogue. We talk about intents, entities, and actions, but those concepts are too detailed. We don't discuss margin vs. padding in our UX meeting, and shouldn't discuss conversations in terms of intents and actions.

Conversational AI needs a common language

We think it's helpful to split conversations up into user goals, where each user goal can mix and match reusable dialogue elements.

In this first version, we've defined 13 dialogue elements and split them into three categories:

- small talk

- completing tasks, and

- guiding users.

An example of guiding users is the "explicit confirmation" dialogue element, useful when your assistant isn't sure how to help the user

The docs for this element show a code snippet which uses the TwoStageFallbackPolicy. "Two Stage Fallback" is something that would only make sense to a developer, so we looked for names that would be intuitive to every member of a product team.

We hope that product teams can discuss conversations more effectively if they can talk at the right level of abstraction. Introducing dialogue elements is a step in that direction. You can find them in the Rasa docs and if you have a suggestion, please join this forum thread or open a pull request!