At Rasa, we constantly innovate to challenge the status quo of conversational AI. With that goal, we’re proud to introduce a new, LLM-native paradigm for building reliable conversational AI: CALM (Conversational AI with Language Models).

CALM delivers a massive leap in two dimensions: it makes assistants far more intelligent, and it makes conversational AI much easier to build. It leverages the power, fluency, and time-to-value of LLMs while retaining the controls and guarantees of NLU-based systems.

You can build a sophisticated, LLM-native assistant with CALM while guaranteeing zero hallucination and that your business logic is faithfully executed. CALM is how enterprises can put next-generation assistants in production today.

Adding to our suite of offerings, we’re thrilled to launch the new Rasa Platform, an LLM-native solution providing pro-code and no-code development of CALM assistants.

This post is a high-level overview of CALM and the reasons we’re so excited about it. And for those with questions, don’t miss the FAQ section at the end.

Towards Level 5 Assistants

Rasa’s vision is to transform how people interact with organizations through AI. That means that individuals can express themselves in their own words, while AI assistants figure out how the organization can help them. We describe this in our 5 levels of conversational AI. With each level, the task of translating personal requests into the organization’s language becomes less burdensome. CALM is a leap in that direction.

CALM has three key components: business logic, dialogue understanding, and conversation repair. I will introduce each of them and show how they work together.

Business Logic

Rasa is used to building assistants that achieve things for users, and CALM allows us to natively combine LLMs and your business logic. In CALM, we describe business logic using flows. A flow contains steps to collect information from the user, call APIs, and follow any branching logic.

For example, consider this flow for transferring money. It says we need to:

- Ask the user for the recipient and the amount to transfer

- Call an API to check if the user has enough funds to complete the transaction

- Either complete the transaction or inform the user this isn’t possible

The most important thing to notice about this business logic is that it doesn’t include any description of the user’s side of the conversation. There’s no box labeled ‘intent’, or lines describing “if the user says this and then says this”.

When I talk to enterprise teams about their business logic, they usually show me a flow chart detailing every possible conversation a user might have on a subject. I find these awfully hard to read, because they are trying to cram too much information into one diagram. They’re representing both the business logic and all the ways the user can navigate it. This usually looks like a plate of spaghetti.

In CALM, your flows describe your actual business logic. The user’s side of the conversation is handled through Dialogue Understanding and Automatic Conversation Repair.

A new way to understand

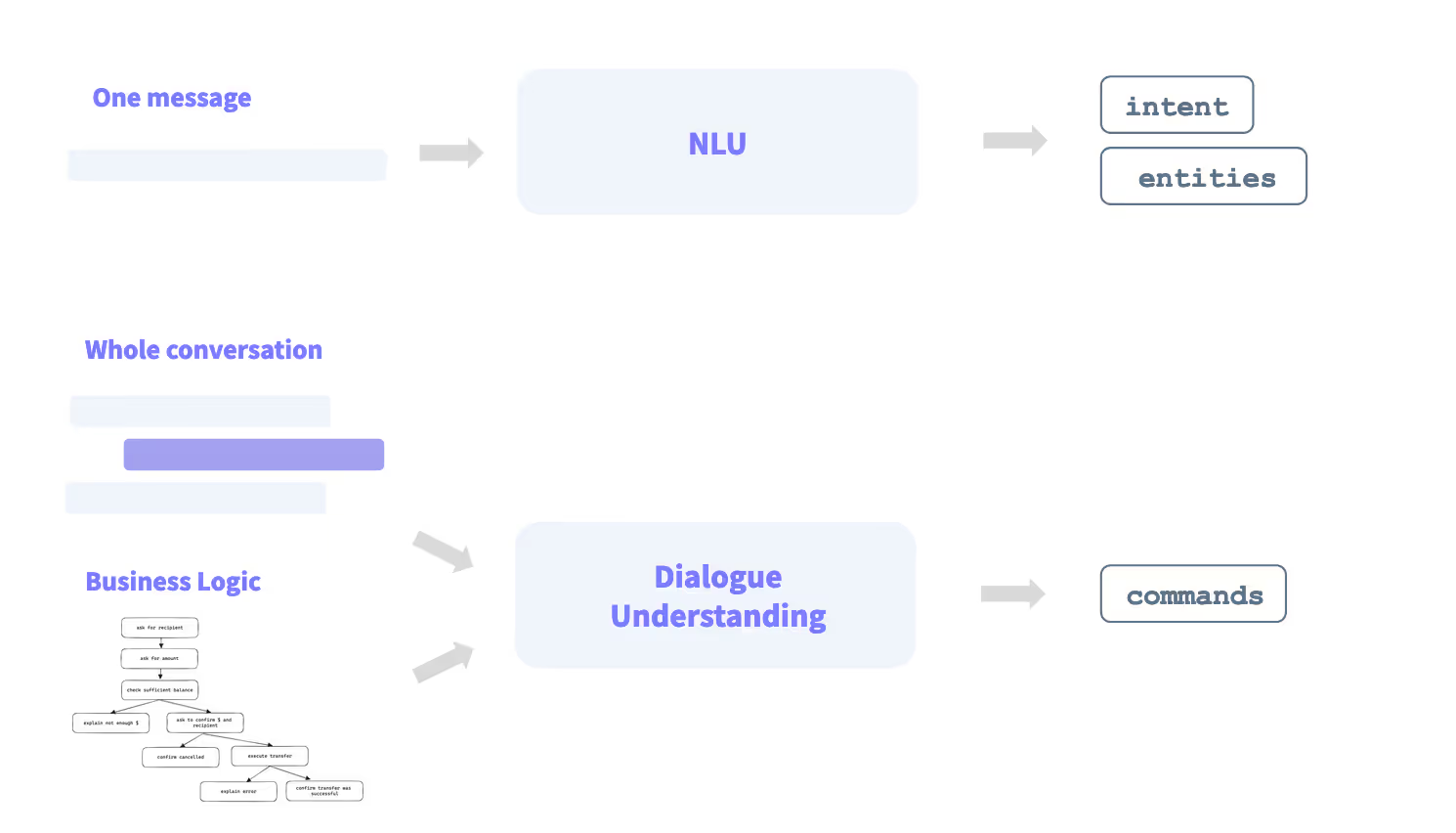

A big shift in CALM is we no longer rely on “NLU” models. Within conversational AI, NLU (Natural Language Understanding) describes the processing of user messages and predicting intents and entities to represent what those messages mean.

CALM uses a new approach called Dialogue Understanding (DU), which translates what users are saying into what that means for your business logic. It differs from a traditional NLU approach in key ways:

- While NLU interprets one message in isolation, DU considers the greater context: the whole back-and-forth of the conversation as well as the assistant’s business logic.

- NLU systems output intents and entities representing the information in a message, but DU outputs a sequence of commands representing how the user wants to progress the conversation.

- NLU systems match an isolated input to a fixed list of intents. DU instead is generative, and produces a sequence of commands according to an internal grammar. This gives us a far richer language to represent what users want.

By far the biggest benefit of Dialogue Understanding is that it frees us from intents. Until now, we’ve used NLU models to translate things users are saying into things our assistant can do, and intents have served as the “language” we used to do that translation.

Intents reduce the problem of understanding human language to categorizing messages into predefined buckets. That’s a powerful simplification, but also a bottleneck to scaling. If you’ve built an AI assistant, you probably felt your intents becoming a house of cards. A team member wants to introduce a new intent, but it’s too similar to an existing one. Or users run into an edge case you want to handle. So you try to figure out the puzzle of where you can introduce a new intent that doesn’t break everything else.

For years, we’ve advocated moving away from intents; with CALM, that’s now a reality. CALM translates complex utterances into simple grammar driving your business logic.

Conversation Repair

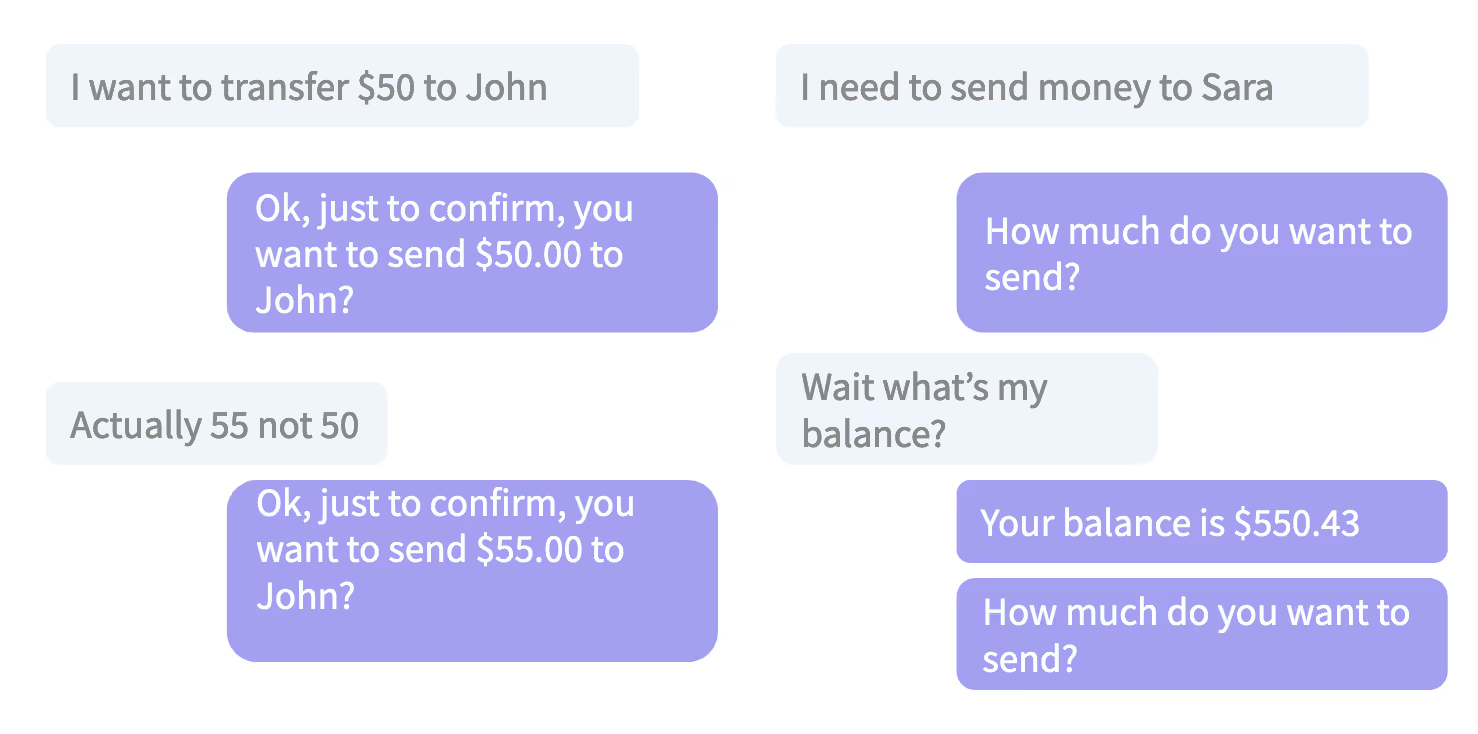

Dialogue Understanding translates what users say into what that means for your business logic. But real user behavior is more complex than just following the happy path. When your assistant asks a user for some information, conversation repair kicks in if the user does something other than providing the information you requested. CALM handles these out-of-the-box using fully customizable patterns.

You can build a simple flow like the money transfer example above, and your assistant will automatically handle conversations where users change their mind, interject mid flow, switch context, or ask clarifying questions.

Putting it all together

CALM is greater than the sum of its parts. The dialogue understanding and conversation repair components in CALM keep your business logic pure.

As a result, building assistants with CALM is very fast. We no longer have to start by mapping out dozens of possible user journeys and bootstrapping training data for intents and entities. You build the essence of your business logic, and conversations just work.

CALM is the biggest leap forward since we first started Rasa, and the feedback from our Beta is unlike anything I’ve seen before. It’s a new paradigm giving us the best of both worlds: LLMs and NLU.

Interested in what CALM can do? Ready to try the next big thing in conversational AI? Contact Rasa today and let’s get started!

Frequently Asked Questions

How can you guarantee zero hallucination?

By default, assistants built with CALM only send predefined, human-authored responses to users. There is no scope for hallucination because we don’t send generated text to end users. You can allow some generation by choosing to use contextual response rephrasing or by integrating RAG / enterprise search into your assistant, but it’s not required.

How is this actually different from predicting intents?

If I have a flow for transferring money, can’t I just train an intent to trigger that flow? Why is CALM better?

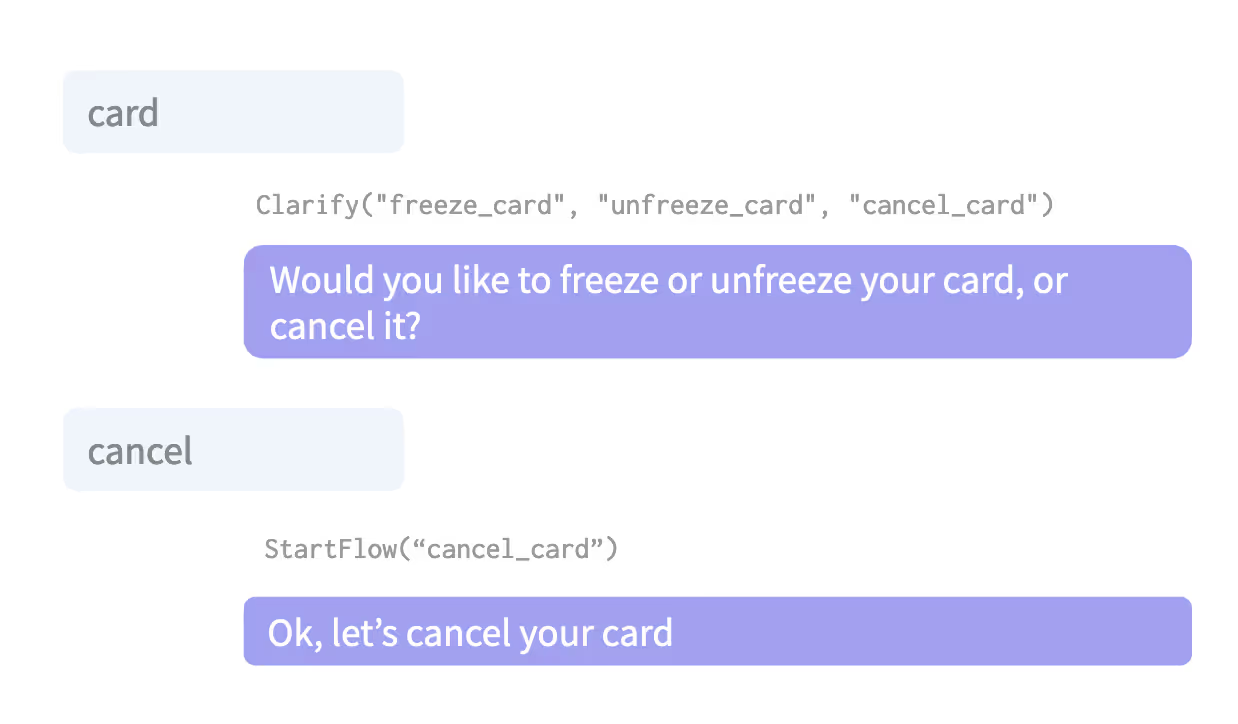

Intents work perfectly well for straightforward cases like this. That’s also why the whole industry has used them for so long. CALM is especially helpful for cases that are very hard to model with an NLU system. Take this conversation as an example:

If I wanted this to work with NLU, I would first think about what the NLU would output for these one-word messages, and then I would tell my dialogue manager how that sequence of intent-entity pairs would lead to starting this flow.

How does this compare with LLM Agents?

The “LLM Agents” idea has recently gained popularity in hacker circles. If you’re building an open-ended, “do anything” style personal assistant, then LLM Agents are a cool idea. LLM Agents give the end user full autonomy over the experience, generating and executing dynamic logic by calling APIs on-the-fly.

But enterprise AI assistants aren’t like that at all - we’re not giving end users free reign to interact with internal APIs. We want to reliably help users do a known list of things, like upgrading their account, changing billing information, ordering a new card, buying an extra bundle, etc. We need to faithfully and consistently follow the business logic of those processes.

CALM uses LLMs to understand what users want and translates thatin the context of your business logic.

In CALM, even complex business logic is explicit, easily editable, andalways followed faithfully. That’s faster, more robust, and more secure than LLM Agents, which recursively call an LLM to guess what should happen next.

CALM is also easy to debug because you can see exactly what commands were generated and if these deviate from what you expected. You can pinpoint exactly where something went wrong, unlike a chain of LLM calls. And because your assistant follows discrete steps, analytics and understanding user journeys are straightforward.

If you don’t have intents, how do you know what’s going on in your assistant / how do you do analytics?

Many teams use intents to keep track of their assistant’s most-used and best-performing features. In CALM, we do the same by tracking which flows are being triggered and how users progress along the flow steps.

Because CALM takes discrete steps, there is no need to use intents to approximate and track the topics customers have asked about. In fact, Rasa Pro’s analytics pipeline already supports flows and provides the ability to analyze user behavior.

How does CALM integrate with RAG?

Retrieval-Augmented Generation (RAG) is maturing as an enterprise search solution, allowing assistants to answer questions from documents without first translating them into Q&A pairs. CALM integrates natively with RAG-based enterprise search. When a user asks a question best answered by a supporting document, the dialogue understanding component outputs a command indicating this question should be answered using enterprise search. This works anywhere in a conversation, including in the middle of a flow. The user’s question is answered using the enterprise search component, and the assistant returns to the interrupted flow.

How do I migrate to CALM?

We introduced CALM in a minor release of Rasa, meaning that it has backwards compatibility. If you have an existing assistant built with Rasa, you can implement a single flow in CALM while maintaining your existing NLU model, stories, rules, forms, etc.