Function calling, or tool use, is all the rage in the world of LLM agents. And for good reason – it gives LLMs a way to interact with the world, reading and writing data. But for customer-facing AI, I believe "function calling" is the wrong abstraction. We need something robust and stateful. We need Process Calling.

The Current State: Function Calling / Tool use

I’m using the terms “function calling” and “tool use” interchangeably. Both refer to an LLM deciding to invoke an API call when it needs to read or write some data.

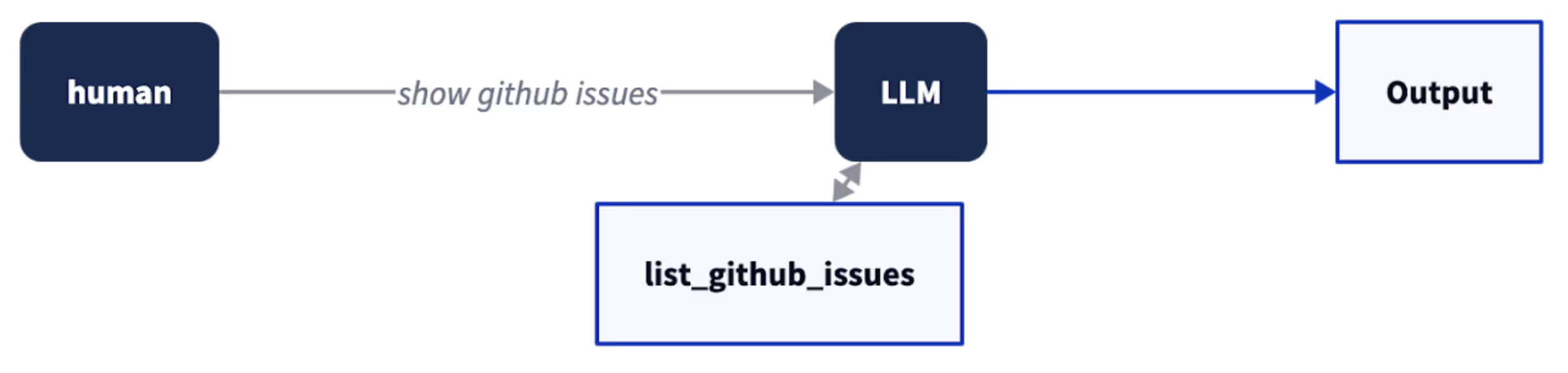

It typically looks like this:

The Model Context Protocol (MCP) is gaining popularity as a way to standardize this interaction pattern. The key point from the MCP docs is this: “tool operations should be focused and atomic”. One tool, one job. Fetch data. Update a record. Simple.

Real-world Use Cases Require More Than Atomic Tools

For customer-facing AI, what we need isn’t a tool but a process. Companies have established business processes, and a successful AI assistant guides users within these structures. Its role is to understand the user's need, identify the relevant company process, communicate how it can help, and then facilitate the steps needed to reach the user's goal, all while navigating the conversation fluently.

Think about customer service for an e-commerce site. A user doesn't usually come in with a neat, API-ready request. They say things like:

“You messed up my order.”

Okay, what does that mean?

- Not delivered yet?

- Delivered late?

- Only some of the items came?

- Wrong items?

- Extra items?

- Damaged items?

- Just unhappy with their purchase?

Each of these scenarios triggers a specific business process, often involving multiple API calls, clarifying questions, and conditional logic. For instance, if the issue is missing items, the internal business logic might look like this:

- Check Order History API: If one recent order, assume it. Otherwise, clarify with the user.

- Check Fulfillment API: Was the order split into multiple shipments?

- If split, Check Delivery API for each: Are any still in transit?

- If deliveries complete: Were they left with a neighbor? Were any items out of stock?

- If out of stock: Ask the user: refund or wait 1 week for restock?

Notice that the business logic represented by this process does not specify pre-defined conversation paths. For a given task, it just describes:

- What information is needed from the user

- What information is needed from APIs

- Any branching logic based on the information that’s collected

Tool Use for Multi-Step Processes: “Prompt and Pray”

In a pure "tool use" paradigm, the LLM gets access to atomic tools: list_user_order_history, show_fulfillment_details_for_order, check_delivery_status. So how do these compose together in a larger task?

I've seen two common, problematic approaches:

- ReAct: Wrap the LLM call in a

whileloop and use a ReAct-style prompt to let the LLM guess the correct sequence of tool calls. - Stuffing the prompt: Same as above, but now you also cram a lengthy natural language description of your process into the LLM's prompt, hoping it follows instructions.

Both of these amount to a prompt and pray strategy, producing incredibly flaky assistants that drive developers to pull their hair out.

This is a terrible way to build software. It reminds me of why Dijkstra famously declared Go To Statement Considered Harmful. GOTO statements made it a nightmare for anyone debugging a program to follow execution paths–jumps could go anywhere! This makes it impossible to write modular code.

Relying on an LLM to orchestrate a multi-step process has similar problems (just a lot worse):

- Opacity: Why did it call function A instead of B this time? We can only guess by looking at intermediate LLM outputs, and can only fix issues via trial and error..

- Broken Modularity: We can't reliably establish preconditions and postconditions for code blocks - everything relies on the LLM’s interpretation.

- Inconsistent Control Flow: The program's structure isn't determined by inspectable rules but by the LLM's interpretation of context and non-deterministic sampling.

Companies like Anthropic and LlamaIndex recognize the need to combine LLMs with deterministic code, but what they call “workflows” only describe what happens in response to a single user message. Process calling, on the other hand, can handle tasks that require back-and-forth with the user.

Process Calling: Stateful Collaboration

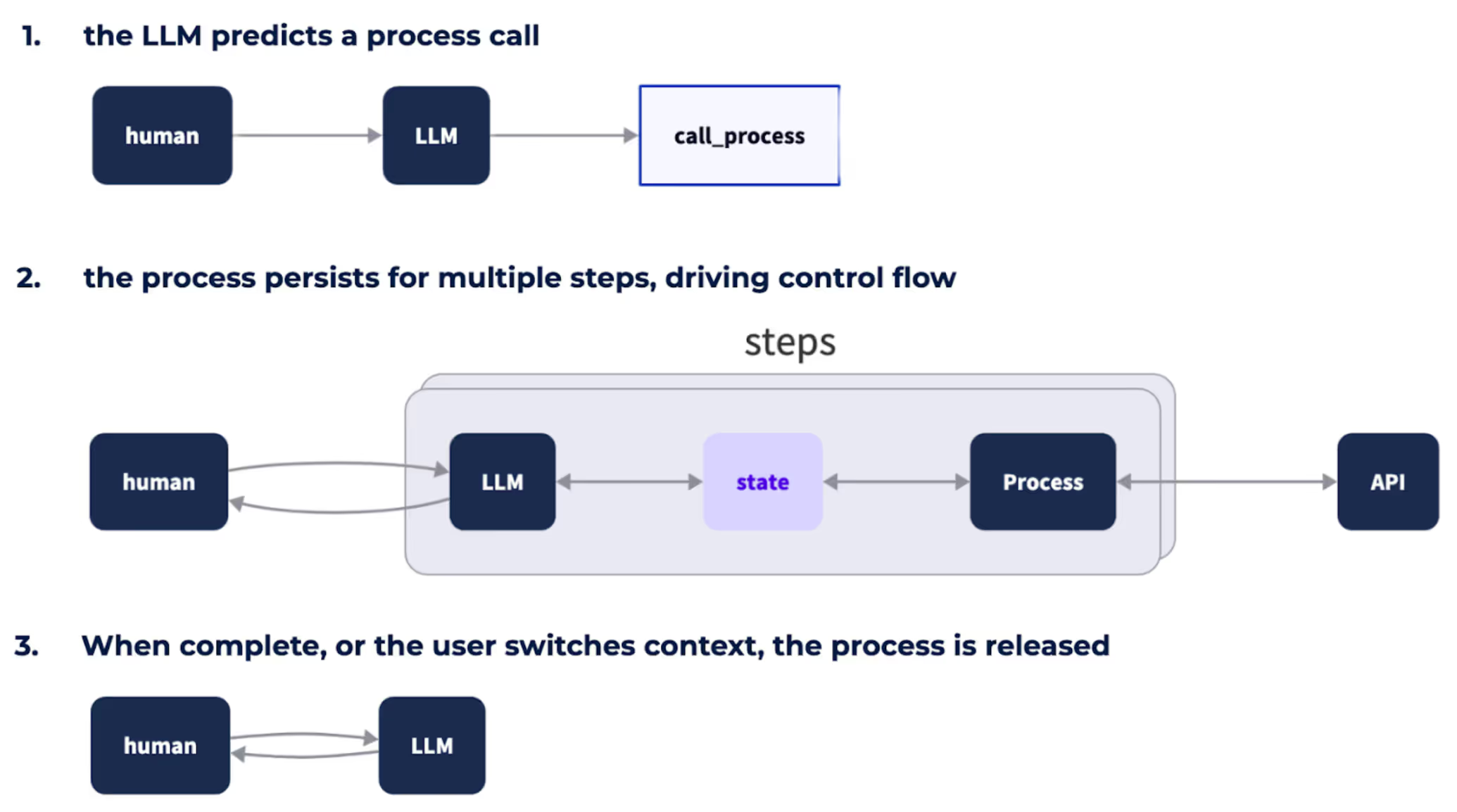

This is where Process Calling comes in. Definition: With Process Calling, the LLM invokes and then collaborates with a long-running, stateful process.

Mechanism:

- The user interacts, and the LLM identifies the need for a specific, defined business process.

- Crucially, the Process then persists. It doesn't just execute one step and disappear.

- It reads and writes to a shared state accessible by both the Process and the LLM.

- It executes defined business logic (like checking those order, fulfillment, and delivery APIs in sequence).

- It handles branching based on the information collected.

- When it needs information from the user, it prompts for user input via the LLM, allowing the LLM to handle the natural language interaction for that specific step.

- The Process dictates the sequence of business operations, and the LLM handles the conversational turns around those operations.

- When the process completes, or the user switches context, the process is released.

Tool calling is a special case of process calling where the process has a single step.

The benefits of process calling are:

- Reliable, deterministic execution of business logic: Your core processes run as designed, every time. No more LLM guessing games for critical steps.

- True modularity: Business processes become self-contained, reusable modules with clear responsibilities. You can actually reason about their pre- and post-conditions.

- Straightforward debugging: When something goes wrong in the business logic, you're looking at a defined process state and execution path, not poring over LLM traces trying to divine a model’s reasoning.

- Up to 80% less token use and up to 8x lower latency: By not doing multiple LLM calls in series.

The cost of process calling is that you have to invest some time up-front to encode your business process in a formal language. But code generation makes this fast and easy, And this initial investment pays dividends almost immediately by drastically reducing the time you'd otherwise spend debugging flaky LLM-driven flows and wrestling with prompt engineering.

CALM

In 2023, we introduced a new approach to conversational AI called CALM. Process Calling (which we often refer to as "business logic" in the Rasa docs) is one of its three core pillars.

At the time, ReAct agents were all the rage and the idea of combining LLM agents with deterministic processes was heretical. But as I’m seeing more libraries adopt a similar approach, I believe Process Calling deserves to be recognized and discussed as a fundamental pattern in agentic design, right alongside concepts like RAG, tool use (in its atomic sense), and reflection.

There’s more to CALM than just Process Calling, of course. But this stateful collaboration is key because it also enables the LLM to perform more robustly by providing explicit, structured context about the state of the overarching process at every conversational turn. The LLM isn't left to guess; it's empowered.

Build Processes, Not Just Chains of Tools

For developers building assistants in production – no matter which framework you use – the distinction matters. If your agent needs to guide a user through a multi-step business operation, don't just give your LLM a bag of atomic tools and hope it improvises correctly every time.

Instead, think in terms of Process Calling. It's a better abstraction for these critical interactions. Define your core business logic as stateful, deterministic processes. Let the LLM do what it does best – understand nuanced human language and conduct fluid conversation – while your Processes ensure your business rules are followed reliably.

Start using process calling today by building a CALM assistant: start coding if that’s your thing, or try Rasa’s no-code UI.