Teams building text- and voice-based virtual assistants encounter a unique set of challenges during the PoC phase. Those who are new to conversational AI find themselves navigating a new technology stack while attempting to prove out the business case for a chatbot. And teams who have built virtual assistants before often find themselves stuck in old patterns even as they try to evaluate new solutions.

This guide will zero in on best practices for building conversational AI PoCs, to help teams get maximum value from the exercise. At the end of the day, the goal of a proof of concept is to invest the fewest resources needed to validate an approach. We'll show you how to build a proof of concept that uncovers potential problems early, meets target timelines, and creates a solid foundation for further development.

What is a proof of concept?

A proof of concept is a low fidelity, simplified version of a virtual assistant. The purpose of a PoC is to learn as much as possible about a proposed approach, and ultimately, to determine whether or not to move forward. In some cases, the code delivered for a PoC may evolve into a more robust prototype, or minimum viable assistant (MVA). Other times, code written for a PoC may be used purely for discovery and later discarded.

There are a few characteristics that make a proof of concept different from other types of discovery exercises. Teams usually enter into a proof of concept with a fixed set of core requirements, and they work quickly, over a brief development cycle. The output of a PoC exercise is an AI assistant that demonstrates a small set of tasks. It can be demoed to stakeholders and even given to test users. Because PoCs are so tangible, they can be powerful tools for communicating a virtual assistant's potential to others inside the organization and to pilot customers.

The most successful PoCs expend just the amount of development resources needed to verify requirements can be met-no more, no less.

Some PoCs go too light on development; they seem successful on the surface, but they're overly simple. In later phases of development, challenges come up that should have been anticipated. Other PoCs go far too deep, creeping up in scope and inflating project timelines. Without clear definition between the PoC phase and later development, teams lose the ability to make clear decisions about when and how to move forward.

Each section of this guide will focus on a distinct phase of the PoC development process. We'll start with gathering requirements, planning, and design. Then, we'll cover important considerations during active development. Finally, we'll explore PoC evaluation criteria, along with a list of questions every conversation team should ask.

Gathering requirements

Many teams are eager to dive directly into writing code, but taking time to formulate clear requirements prevents costly mis-alignment. In the requirements stage, two questions should be top of mind:

- Are we building the right thing for our business?

- Are we building the right thing for our customers?

To address the first, project scoping identifies desired business outcomes, as well as constraints. To address the second, user research helps teams understand users' problems and start to formulate a solution.

Business requirements

Most enterprise virtual assistants fall into one of two categories: customer-facing use cases or internal use cases. For customer-facing assistants, business outcomes target customer experience metrics like decreased wait time and NPS, or cost-savings metrics like containment rate and self-service adoption. Internal use cases allow employees to self-serve on HR or IT helpdesk requests, increasing operational efficiency. Some use cases bridge both categories. Agent assist, for example, uses artificial intelligence to help human support agents find answers to customer questions. Whatever the use case, it's important for conversation teams to align with business stakeholders on metrics that indicate success.

Constraints are another important business consideration at the earliest stages. Teams can explore the constraints around their project by asking questions such as:

- What is the project budget?

- What are the team's strengths and skill sets?

- Are there any industry regulations around privacy or data security that may impact the choice of AI platforms?

- Are there internal IT policies related to hosting and infrastructure that play a factor in selecting a technology stack?

- Is there a deadline for getting the assistant in production?

- Are there specific platforms or devices, e.g. Facebook Messenger, a smart speaker, or Android, that must be integrated?

- Will support for voice commands be required in future phases?

It's important to be clear about constraints before researching technology solutions. Limitations-particularly regulatory restrictions-can play a significant role in narrowing the field of suitable technologies.

User requirements

At this stage, the team should also devote time to understanding the problems faced by end users (and the benefits they expect users to gain). Deep user research is sometimes overshadowed by business requirements in the early stages, but it's critical to think about how customers will receive and use the virtual assistant.

Creating customer personas is a tried and tested method for understanding end-users' habits, priorities, and goals. Because virtual assistants engage users through natural language, it's particularly important to consider how customers talk, which devices or communication platforms they use, and the context in which they make contact.

Speaking directly with customers is the gold standard, but it isn't the only way to get information. If the virtual assistant is going to be embedded in a website or mobile app, teams can use telemetry tools like Hotjar to record user interactions and identify friction points that could be resolved by AI powered assistants. Reviewing search terms users type into a website can be another valuable source of data on what customers are asking. And of course, transcripts of conversations between customers and human support agents can provide rich insights. Lastly, teams should lean on the expertise of colleagues who are close to the end user, like customer success and sales staff. These individuals know the customer better than anyone in the organization and can provide guidance on what users really need.

Planning and design tips

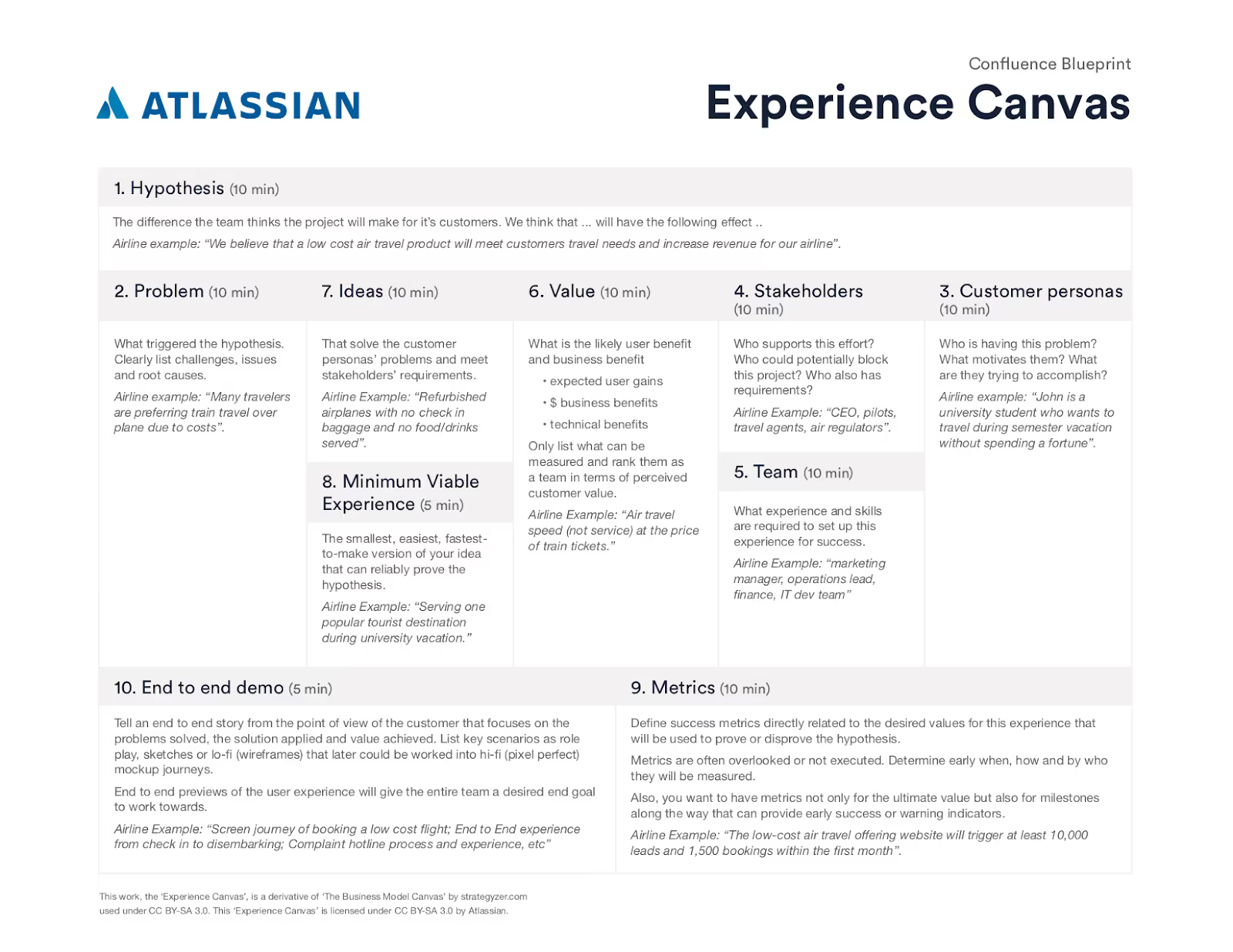

There are many frameworks designed to help teams generate ideas and turn requirements into something actionable. One of these frameworks is the Experience Canvas, an exercise developed by Atlassian (based on the Lean Canvas, developed by Ash Maurya).

An Experience Canvas is a guided activity that helps teams clearly define the problem they're trying to solve. Teams begin with a hypothesis about what they think the project can achieve. For example, We believe that an intelligent virtual assistant will increase customer satisfaction and decrease strain on limited support resources. During the exercise, teams work through understanding the problem, who benefits from the solution, and what success looks like. Running an experience canvas requires a time commitment of a few hours and the participation of everyone on the team, but the end result is an artifact that the entire team can refer back to any time, to reorient around agreed upon priorities.

The happy path

One outcome of an exercise like the Experience Canvas is describing the minimum experience that solves the customer's problem. Another term for this is the happy path-the most straightforward flow of operations that helps the user complete a task.

This is an important concept in building virtual assistants, because in the real world, there are many ways that a customer can stray from the happy path. Customers might phrase their request in an unusual way or ask about a topic that the assistant hasn't been trained to understand. Techniques like conversation-driven development and conversation design address these challenges as teams get further toward production, but during the PoC phase, teams should focus their attention on building out only happy paths-selecting 2-3 tasks that are central to the purpose of the assistant.

For example, a proof of concept for a consumer banking chatbot might address just 3 key conversation flows: 1) replacing a lost or stolen card, 2) paying a credit card balance, and 3) checking account balances. For the team designing these conversations, the happy path would be a scenario where the customer is providing all of the requested information, with no "surprises".

User: I need to pay off my credit cardBot: Towards which credit card account do you want to make a payment?User: OneCardBot: How much do you want to pay?User: Pay off my minimum balance pleaseBot: For which date would you like to schedule the payment?User: Please schedule it for the first of next monthBot: Would you like to schedule a payment of $85 (your minimum balance) towards your Gringots account for 12:00AM, Friday May 01, 2020?[Yes][No, cancel the transaction]User: YesBot: Payment of 85 (your minimum balance) towards your Gringots account scheduled to be paid at 12:00AM, Friday May 01, 2020

By designing just for the happy path during the PoC phase, conversation teams can move quickly, validating the most important use cases. As the project grows, support for unhappy paths should be prioritized by observing how test users talk to the assistant. In this way, teams can ensure that they're focusing time and development resources on things customers are actually inclined to do, rather than trying to build for an infinite number of possible paths.

Developing the proof of concept

The goal of the development phase is to produce a working assistant that, while simple, explores architecture, integration, and deployment needs in order to determine feasibility. Teams also need to consider strategies for working with training data and models, particularly as the AI assistant scales over the long-term.

Going too deeply into development during the PoC phase can waste resources, but it's also important to test all of the critical "moving parts" of the virtual assistant. PoCs often include basic database operations, integration with other systems or APIs, and sometimes authentication or speech recognition services. If it's a must-have feature for the success of the virtual assistant, it should at least be explored in the proof of concept.

One early hurdle in the development phase is sourcing training data. Virtual assistants require lists of example user messages, grouped together into intents, in order to train the machine learning model. The best natural language processing (NLP) performance is achieved when the example messages used to train the model are very close in structure and substance to what users actually say.

If a team has access to support transcripts, they can be a great starting point for building up a realistic data set. If not, manually writing out 10-15 training examples per intent is sufficient for a PoC. Although many more training examples per intent will be required for a robust production assistant, a more robust data set can be built up gradually over time. Rasa Enterprise is a tool that allows teams to build up a data set and test the assistant in parallel, sourcing new training examples directly from conversations between test users and the assistant.

Development checklist

- Build happy path conversation flows for 2-3 important tasks

- Create intents with 10-15 example messages per intent

- Test major integration requirements with APIs, databases, authentication, and messaging channels

- Identify areas where the technology stack may need to be customized, and ensure the conversational AI platform provides necessary access and flexibility

- Evaluate data security and privacy needs, and ensure the AI virtual assistant is in compliance

- Deploy the proof of concept in an environment similar to the production hosting environment

- Establish a preliminary workflow for pushing and testing changes in staging and production environments

Avoiding common development pitfalls

Set a time limit. It's important to timebox a proof of concept in order to set a clearly defined boundary around the required work. Some PoCs can be completed within a 2 week sprint, others may take several. Generally speaking, a PoC that takes longer than 3 months has lost momentum.

Carve out time for team training. Another common pitfall is diving directly into development without proper training in the technology stack. It can be tempting to try to learn a new conversational AI platform on the fly, especially for those with experience in machine learning. However, lack of training can greatly slow a project down or even result in wasted development cycles. Rasa offers documentation and training courses to help team members become proficient with the conversational AI platform quickly.

Look for an open source starting point. Teams can save considerable development time by using a pre-built AI assistant as a starting point instead of beginning from scratch. Rasa offers starter pack assistants in domains like financial services, retail, insurance, and IT helpdesk that anyone can use to start building a chatbot or voice assistant. All starter pack assistants are open source and free to download.

Testing

It may sound surprising, but rigorously testing a proof of concept is often overlooked.

After development concludes, testing with end-users tends to take a back seat to demoing the PoC to stakeholders. While demos are an important deliverable, they're tightly controlled, and don't typically uncover any new learnings about user experience. Including even a brief period of usability testing in the PoC phase should be considered an essential step.

However, testing doesn't necessarily mean putting the assistant in the hands of customers, at least not yet. Instead, volunteers recruited from within the organization can provide a fresh perspective on user experience. The most important thing is making sure that test users weren't involved in designing or developing the assistant. This ensures testers don't have prior knowledge of the conversation patterns the assistant has been trained to handle, making them more likely to push the assistant's boundaries (just like real users do).

Testers should be briefed on the assistant's capabilities (and expectations should be set that the assistant is still in early development). Testers should be encouraged to speak naturally to the virtual assistant and even try to push the boundaries of what it can handle. It's normal for the assistant to make a few mistakes-it is a proof of concept, after all. Each mistake is an opportunity to learn more about user behavior and improve the assistant's performance.

During testing, Rasa Enterprise can be used to both share the assistant and to analyze conversations. Messages from test users can even be labeled with intents, turning them directly into training data. In this way, teams can create a more robust assistant, even in the PoC phase. For more tips, see Usability Testing for AI Assistants.

Success criteria

A proof of concept isn't just the first stage of building a virtual assistant-it's a search for an answer. A proof of concept can't be considered successful unless it provides information needed to decide whether to invest in further development.

Below are a set of questions teams can ask to evaluate a proof of concept and arrive at a definitive answer.

Does the conversational AI platform permit customization?Yes ☐No ☐Does the conversational AI platform comply with privacy and data storage regulations?Yes ☐No ☐Is the natural language processing robust enough to support required languages and domain-specific terms?*Yes ☐No ☐Is it possible to integrate with necessary messaging platforms, data sources, and other systems?Yes ☐No ☐Does the organization need technical support within an agreed-upon timeframe? If so, can support be contracted?Yes ☐No ☐Can the technology stack support scaling in terms of future use cases and traffic?Yes ☐No ☐Are conversation flows easy to create, reason about, and maintain?Yes ☐No ☐Does the conversational AI platform offer visibility into model performance, training data errors, and usage metrics?Yes ☐No ☐Is there a way to study user experience and improve the assistant over time?Yes ☐No ☐

Conclusion

While a proof of concept is only the beginning of developing a virtual assistant, the discovery and choices made in the PoC phase can make or break the project. A successful PoC starts with user research, incorporates key business requirements, and validates the design by putting the assistant in the hands of testers at the earliest stages.

At the end of the PoC phase, teams should have a clear line of sight on building a virtual assistant that can help customers resolve problems, as well as the tools needed to do it. And if energy has been into building a watertight proof of concept, teams won't be caught off guard by obstacles that can slow down development as the project nears production.

Continue your development journey with Rasa's whitepaper: PoC to Production. PoC to Production is a guide to overcoming challenges many enterprises face as they scale a virtual assistant from a simple proof of concept to a robust assistant running in production.