TL;DR:LLM-powered chatbots have transformed what’s possible in enterprise AI assistants, but success depends on more than just plugging in a model. Scalable, safe, and effective assistants need the right architecture—combining orchestration, grounding, memory, and fallback systems. This blog breaks down how LLM chatbots work, common deployment patterns, and why hybrid architectures outperform intent-based design alone. It also explores key engineering considerations and shows how Rasa gives teams full control to deploy LLMs responsibly, with the flexibility to adapt and scale.

Large language models (LLMs) have changed what people expect from enterprise chatbots and virtual assistants. Instead of rigid, scripted interactions, users now expect natural dialogue that adapts to their intent. But as impressive as LLMs are, plugging one into an assistant through an API doesn’t guarantee success.

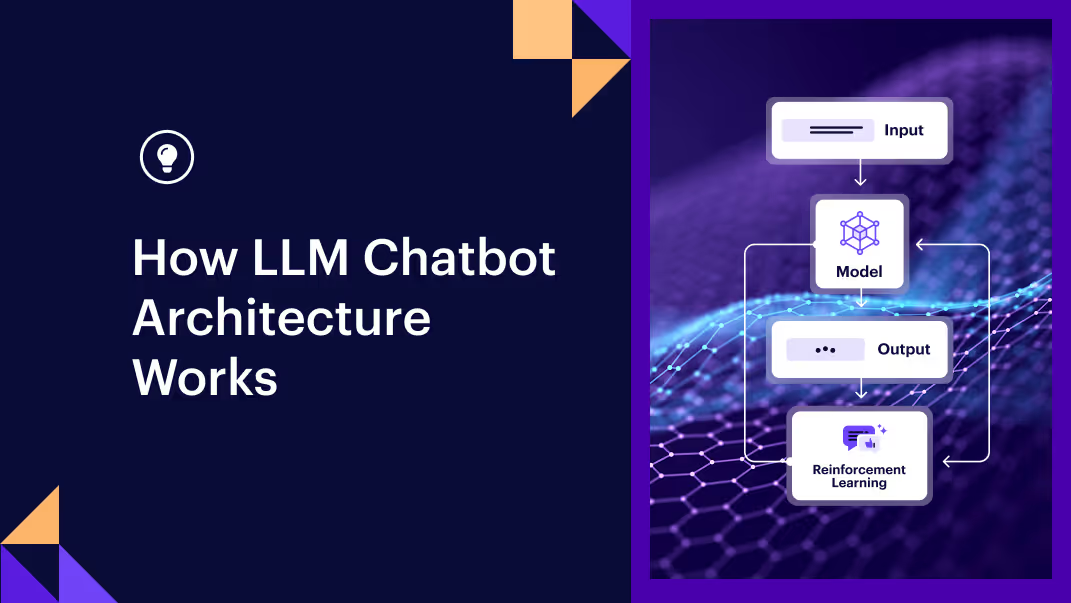

To work reliably in real-world settings, an LLM-powered assistant needs the right chatbot architecture. That means having systems in place for orchestration, memory, grounding, and fallback so responses stay accurate, safe, and scalable. Architecture also shapes how well your assistant balances speed with cost and how easily it can grow alongside new use cases or business demands.

Here, we break down what an LLM-powered assistant is, the components that make up its architecture, and how you can design systems that scale with confidence. You’ll also learn why traditional intent-based design struggles to keep up and how newer approaches make assistants smarter and safer.

What is an LLM chatbot?

An LLM chatbot is an assistant powered by a large language model designed to generate or guide responses based on a user’s input. Unlike traditional rule-based or intent-based systems that rely on predefined commands, an LLM chatbot can understand the nuance of natural language and answer questions more naturally.

Think of it this way: a traditional assistant is like a customer service menu. If you say, “reset my password,” it knows where to take you. But if you go off script and say, “I locked myself out after trying too many times,” it may get confused because that phrasing wasn’t mapped to an intent.

An LLM chatbot, by contrast, can handle the unexpected phrasing or new vocabulary without needing to be explicitly programmed for each scenario.

That flexibility is what makes LLM chatbots so powerful, but it’s also why architecture matters. Without the proper guardrails, they can generate irrelevant answers, expose sensitive data, or become unreliable as use cases grow. The real value comes when you pair LLMs’ language abilities with a design that ensures control, compliance, and scalability.

Core components of LLM chatbot architecture

If you’ve ever built a traditional assistant, you know the design often feels like a flowchart: intent here, rule there, then a fixed outcome. LLM-powered assistants don’t work that way. They need a modular architecture with clear functionality that can be mixed, extended, or replaced, depending on enterprise needs.

Orchestration layer

At the core is the orchestration layer. It’s your assistant’s conductor, deciding what to do with each user query. Sometimes the right move is to call a database or an API to fetch the correct information. Other times, it makes sense to let the LLM generate a natural response.

The orchestration layer handles coordination between deterministic logic (hard-coded rules for predictable tasks) and generative output from the LLM.

Imagine a customer asking about their order status. The orchestration layer might first route the query through a database check to pull the exact delivery date. Once that’s done, the LLM steps in to phrase the answer in a conversational tone: “Your package is on the way and should arrive tomorrow.”

This blend ensures that your assistant is accurate and approachable. It’s what prevents enterprise chatbots from sounding like cold ticketing systems and instead makes them feel like capable, human-like partners.

Retrieval and grounding mechanisms

While LLMs are exceptional at generating text, they aren’t always reliable when it comes to facts. They can hallucinate, producing answers that sound confident but are wrong.

Retrieval and grounding mechanisms solve this by supplying relevant information from trusted datasets or vector stores. Using retrieval augmented generation (RAG) or frameworks like LangChain, your assistant can pull knowledge from a knowledge base, apply semantic search, and then phrase answers in natural language.

For instance, if an employee asks about the latest HR policy, the retrieval layer pulls the official document, and the LLM creates a natural explanation around it.

Grounding reduces hallucinations, improves compliance, and builds trust across industries from healthcare to finance. This grounding layer can also guide how you choose enterprise chatbot platform capabilities that align with compliance, performance, and integration requirements.

Memory and session state

A good AI chatbot doesn’t just respond, but remembers. Without memory, every interaction would feel like starting from scratch, forcing users to repeat details or re-explain their intent. Memory and session state let the assistant carry context across turns, keeping the conversation flowing naturally.

This context can be short-term (like recalling what was said earlier in the same chat) or long-term (like storing a user’s preferences, past purchases, or role within the company). Both matter: Session memory makes dialogue coherent, while persistent profiles allow for personalization that feels genuinely useful.

With well-designed memory, your LLM chatbot evolves from a reactive tool into a proactive assistant. It can suggest next steps, tailor responses to the individual, and handle multi-step tasks without losing sight of the bigger picture.

Fallback and escalation logic

Even with all the right structure, things can still go off track. That’s why assistants need fallback and escalation logic. When the system isn’t confident about a response, it can switch to a scripted path, ask clarifying questions, or hand the conversation over to a human agent.

Modern chatbot development tools make it easier to build this safety net into the design, ensuring interactions stay smooth even when the model reaches its limits. For example, in customer support, the assistant might first guide the user through a known troubleshooting flow. If that doesn’t resolve the issue, the assistant can seamlessly escalate to a live agent, passing along the conversation history so the user doesn’t have to start over.

Common LLM deployment patterns in enterprise assistants

With more than 67% of organizations already weaving large language models into their operations, the focus has shifted from if to how. Once the core components are in place, the question becomes: what role should the LLM actually play inside the assistant? Some of the most common deployment patterns include:

- LLM as a response generator: Replaces canned replies with dynamic, natural responses tailored to user input

- LLM as a reasoning engine: Powers decision-making or classification, like routing queries or prioritizing tasks

- LLM as a fallback mechanism: Handles unexpected user inputs that scripted systems can’t manage

- LLM with a retrieval pipeline: Pulls from internal knowledge bases to answer questions with precision and context

- LLM as a rephrasing or safety filter: Cleans up outputs or enforces compliance before messages reach the user

- LLM as a tool orchestrator: Decides when to call APIs or external services, runs those tools, and composes their results into a single coherent reply.

These patterns aren’t mutually exclusive. Many enterprise assistants use a mix, starting with narrow applications of the LLM and gradually layering in more responsibility as guardrails mature. The right choice depends on use case, risk tolerance, and the level of control you require.

Why traditional intent-based design doesn’t scale with LLMs

Back in the day, assistants were almost entirely intent-based. You’d define intents like ‘check_balance,’ ‘reset_password,’ or ‘book_flight,’ then map each to a scripted reply or workflow. If someone typed “What’s my balance?” the system could match it to check_balance and respond correctly.

The trouble came when users phrased the same request differently, like “How much money do I have left?” or “Show me what’s in my account.” Unless those variations were explicitly trained, the assistant would stumble.

As use cases grew, so did complexity. Teams ended up juggling endless lists of intents, synonyms, and training phrases just to keep things functional. It was like building a house of cards: the bigger it got, the harder it was to prevent it from collapsing.

With advancements in generative AI and machine learning, LLMs can interpret a wide range of inputs while still understanding the user’s goal, thereby reducing manual training and enabling faster scaling.

That said, without proper structure, LLMs can go off script, make up facts, or generate responses that don’t meet compliance standards. This is why hybrid architectures, blending LLMs with deterministic controls, are critical for enterprises.

How hybrid architecture gives you the best of both worlds

A hybrid architecture lets you combine deterministic rules with the generative power of LLMs. You don’t have to choose between rigid intent-based design or unpredictable model outputs; you can use each approach where it makes the most sense.

For example, if a customer wants to reset their password, your assistant doesn’t need creativity. Rather, a rule-based flow ensures the process is fast, secure, and correct every time. But if the customer says something less structured, like “I’m having trouble accessing my account, what should I do?” the LLM can step in to interpret their request and guide the conversation naturally.

This balance has several advantages:

- Scalability without scripting every path: You can expand coverage without manually writing responses for every possible query.

- Compliance and safety via grounding and rephrasing: Deterministic rules keep sensitive processes and outputs under control.

- Flexibility for complex user journeys: Your assistant can switch fluidly between rules and generative dialogue, depending on the situation.

Holding it all together is the orchestration layer. It acts as the backbone of your hybrid approach, deciding when to call on rules, when to use the LLM, and how to blend the two into a seamless experience.

Engineering considerations for building LLM-powered assistants

Once your architecture is in place, the real challenge is making it work at scale. For your engineering team, that means balancing speed, cost, and reliability while managing the quirks of LLMs. Here are the key considerations that shape successful deployments:

Latency and performance optimization

LLMs can introduce latency. In a real-time conversation, even a few seconds of lag can feel like forever to your users.

You can reduce response times by:

- Caching frequent replies

- Compressing prompts to cut processing time

- Routing simpler tasks to smaller, distilled models that don’t need as much compute

Done right, these strategies keep your conversations fluid without sacrificing accuracy.

Cost management and usage controls

Every LLM has a cost, and at scale, those charges can add up fast. To keep your assistant efficient, route only queries that need generative reasoning to the LLM, while rules or lightweight models handle the rest.

Filters and fallback logic help prevent unnecessary generations, letting your assistant stay smart without spiraling expenses.

Token limits and input formatting

LLMs have token limits, which cap how much context they can process at once. If a conversation gets long or the prompt is overloaded, key details may be lost.

Design your prompts carefully:

- Trim inputs

- Format dialogue efficiently

- Decide what context is essential at each stage

Sometimes, carrying just a summary of past turns is enough instead of the full transcript.

Monitoring, evaluation, and iteration

Building an LLM-powered assistant isn’t a one-and-done effort. Monitor performance continuously through analytics, testing, and user feedback.

Human-in-the-loop review helps catch subtle issues the model might miss, while structured evaluation identifies where responses drift. Iterating on these insights ensures that your assistant continues to improve and remains reliable as use cases evolve.

What is Rasa’s approach to LLM-first architecture?

Rasa gives you the freedom to use large language models without locking your team into a single vendor or workflow. The Rasa platform is LLM-agnostic, so you can plug in the model of your choice, whether OpenAI, Anthropic, Hugging Face, or a self-hosted Docker option, while keeping complete control over how it operates inside your assistant.

Several features make this flexibility practical for enterprises:

- Orchestration control: Decide when to route a query to an LLM, when to use deterministic logic, or when to escalate.

- Safe fallback with CALM: Handle low-confidence outputs gracefully through scripted chatbot flows or hand-offs.

- Grounding and rephrasing options: Keep responses accurate by pulling in trusted data sources or reformatting LLM output for clarity and compliance.

- Conversation repair: Manage digressions, topic changes, and unexpected utterances without breaking the flow.

- Custom pipelines and modular design: Extend or swap components to match your infrastructure, scaling needs, or regulatory requirements.

- No-code UI: Empower non-technical teams to configure, test, and refine assistants without writing code.

- Flexible architecture: Beyond being a black-box, open-source, and customizable solution, Rasa provides a flexible architecture that you can adapt, extend, and control to fit your unique needs.

- On-prem installation: Rasa supports full on-prem deployment, unlike other conversational AI platforms. This is critical for highly regulated industries like financial services, government, and telecommunications, where meeting regulatory standards and ensuring data compliance are non-negotiable.

With Rasa, you can experiment with LLMs while keeping the guardrails you need for safety, compliance, and transparency.

Learn why architecture is the difference between hype and real value

LLMs are powerful, but they’re only as good as the architecture behind them. With strong orchestration, grounded responses, and smart fallbacks, you can turn a simple chatbot into a reliable, enterprise-grade assistant. Without that structure, even the most advanced model risks going off-topic, breaking compliance, or failing to scale.

The real value comes from thoughtful design. Your assistant needs to sound natural while staying safe, adaptable, and trustworthy as your use cases grow.

That’s why architecture matters just as much as the model itself: it’s the difference between short-lived hype and long-term impact. With Rasa, you get LLM-agnostic flexibility, safe automation through CALM, rephrased and grounded outputs, and a no-code UI that empowers your team without adding complexity.

If you’re ready to build an assistant that balances flexibility with control, connect with the Rasa team to see how an LLM-first approach can work for you.