Building AI assistants that handle complex interactions at scale while maintaining low latency, high performance, and cost efficiency is a significant challenge. While models like GPT-4 deliver strong performance, they come with trade-offs: high costs, rate limitations, and latency issues. These challenges are problematic in real-time scenarios, such as voice-based assistants, where every millisecond counts. But what if you could fine-tune smaller models that balance speed, performance, and cost?

Did you know: Amazon found that every 100ms of latency cost them 1% in sales? Response time directly impacts user experience quality in real-time interactions, especially in voice-based systems.

Cut Latency and Costs by Scaling with Smaller Models

Our previous work demonstrated how CALM's structured approach to building conversational AI excels in latency, scalability, and cost-efficiency (compared to more unstructured, ReAct-style agentic systems). However, scaling assistants for real-time use cases-such as voice interactions-introduces new challenges, especially when near-instantaneous responses are required.

For these real-time use cases, maintaining performance without relying on large, expensive LLMs like GPT-4 is essential. That’s why we’ve introduced a new feature that enables users to fine-tune smaller language models (~8B parameters, such as Llama 3.1 8B!). This shift offers substantial benefits in terms of latency, cost, and control.

Why Smaller Models?

Our customers consistently find that smaller models allow for faster response times and reduced reliance on external APIs, giving them more control over their conversational AI systems. By deploying these models through platforms like Hugging Face or within their own infrastructure, they’ve been able to mitigate rate limitations, reduce API costs, and take charge of their models’ deployment and performance. This enables them to streamline operations, improve latency, and ensure their assistants perform efficiently, even at scale.

The Business Case for Fine-Tuning

Faster Models with No Loss in Accuracy

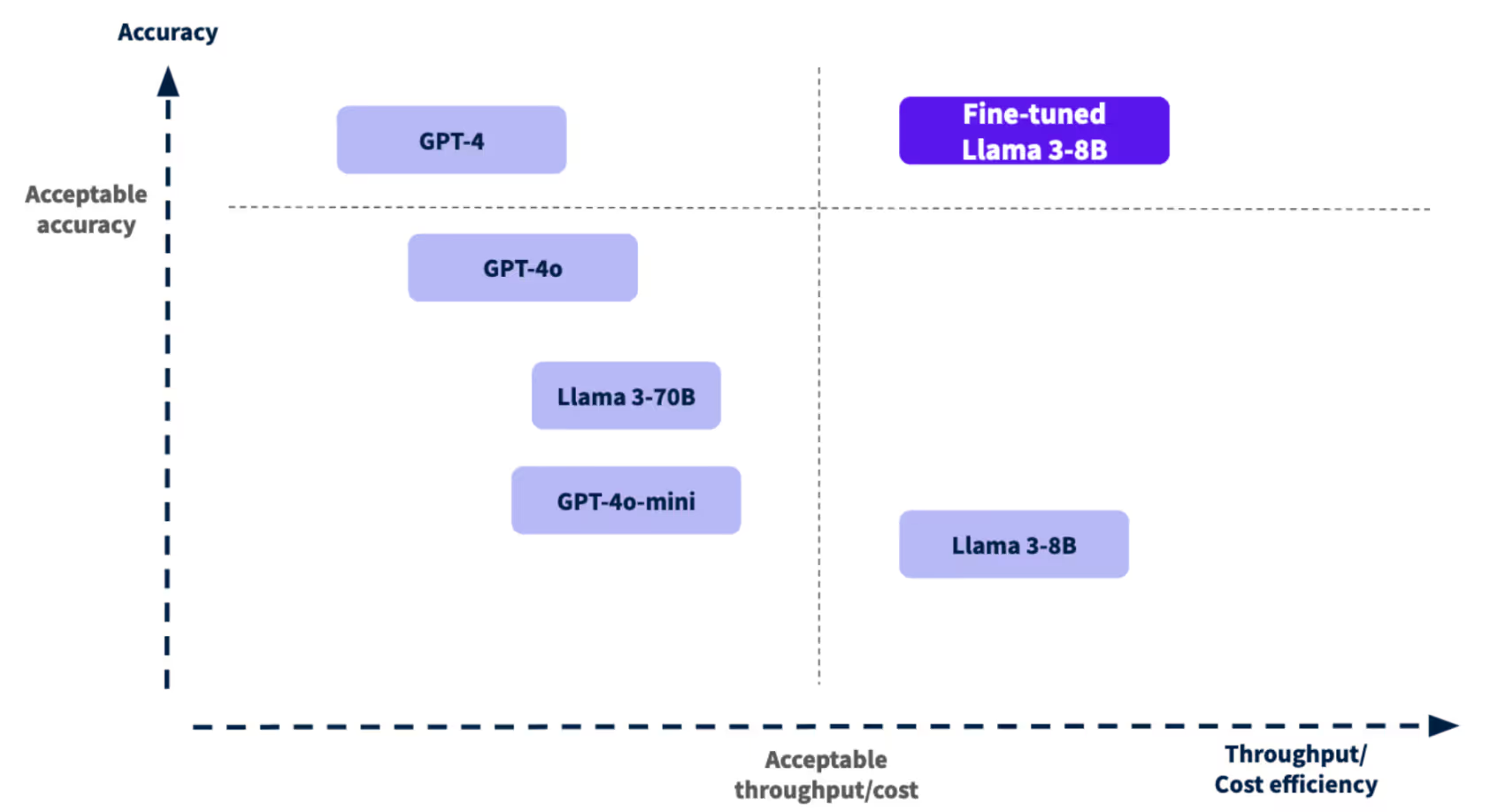

Caption: The figure shows a trade-off between model accuracy and cost efficiency. The Y-axis represents accuracy, with higher values indicating better performance, while the X-axis shows throughput and cost efficiency. GPT-4 is highly accurate but less efficient for high-demand applications. In contrast, the fine-tuned Llama-3-8B strikes a balance, offering strong accuracy with better cost efficiency. The figure highlights that smaller models like Llama-3-8B can provide sufficient accuracy while being more economical.

Fine-tuning smaller models makes them respond faster, which is exactly what you need for voice assistants and busy environments where every second counts. To test this, we compared the following systems' latency performance.

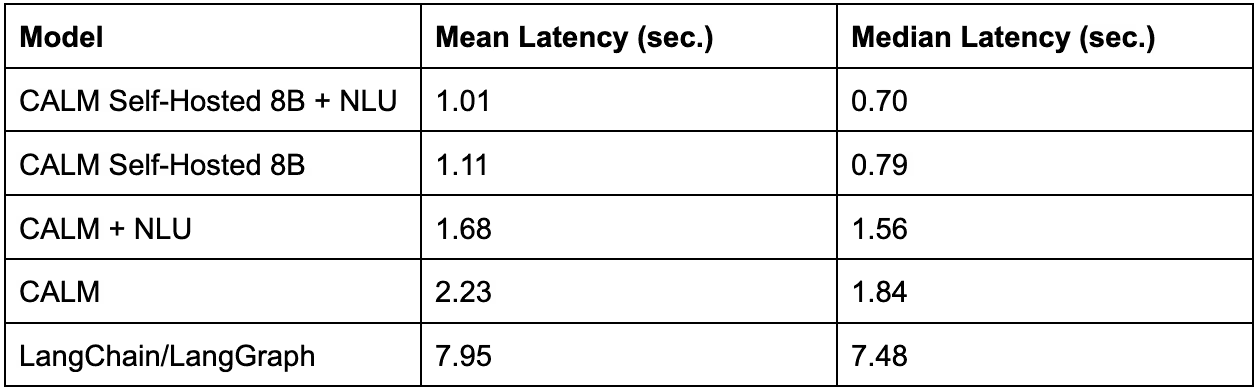

The table below shows the mean and median latencies for various models, listed in ascending order for easier comparison (see the calculations in this notebook):

While powerful, models like GPT-4 often struggle to meet the low-latency demands of real-time applications, such as phone-based voice assistants. Even when integrated with CALM, these larger models can be too slow to provide a seamless user experience. In contrast, CALM’s self-hosted models consistently outperform larger counterparts, especially when integrated with fine-tuned NLU components, making them the ideal choice for fast, reliable, and scalable solutions.

Also, there is no known way to make the Langchain/LangGraph approach work with an 8B model.

Caption: Box plots comparing CALM’s performance across four configurations: CALM LLM, CALM NLU, CALM NLU self-hosted, and CALM self-hosted. The plots illustrate key metrics such as the number of output tokens, number of input tokens, and latencies (seconds). The boxes display the median, interquartile ranges, and whiskers showing variability, while individual data points are plotted to show the distribution. Shorter latencies and optimized token usage highlight the superior performance of CALM’s self-hosted models. For a deeper dive into these metrics and comparisons, check out the methodology in the rest of the post.

Cost Efficiency

Using off the shelf smaller models reduces per token cost. Also, deploying them yourself (for example on Hugging Face inference endpoints or your own infrastructure) allows you to shift from a token based pricing to a per hour pricing for hosting. We have found the latter to be much cheaper when processing conversations at scale. Here’s a quick back-of-the-envelope calculation to illustrate this:

- Assuming your conversational assistant processes 40,000 conversations monthly with 10 concurrent sessions at peak and 3 user turns per conversation on average.

- Running a CALM assistant with GPT-4 as the LLM can result in a total cost of $7,700 for the LLM usage alone in that month.

- Whereas running the same assistant with a self-hosted fine-tuned Llama 3.1 8b model on an A100 GPU can cost somewhere between 2400 - 4800 USD for that month.

The gap widens further as your assistant processes more conversations monthly. Hence, fine-tuning and self-hosting your own model can reduce operational expenses and have more predictable costs compared to per-API call fees.

While there are no per-API call fees for self-hosted models, you do need to factor in the cost of overhead for the infrastructure to maintain your models.

Security, Privacy, and Reliability

Enterprises with strict privacy requirements can deploy these models on their infrastructure-whether on a private cloud or an on-premise setup. This ensures sensitive data stays within the organization's control, a critical concern for industries like healthcare and finance. Self-hosting offers full control over the model’s environment and security protocols, but the models can also be run via Hugging Face or other cloud services for more flexible deployment.

You also need your production models to be reliable and consistently available. Unfortunately, vendor APIs often deprecate models, leading to unexpected downtime and costly migrations. See these pages from Azure and OpenAI for examples of their deprecated models. By opting for self-hosted models, you gain full control over versioning and updates, ensuring your models remain stable and available on your terms.

Unlocking Performance: Fine-Tuning and Command Generation

What fine-tuning offers:

- Custom Performance: Fine-tuning allows smaller models to perform similarly to larger LLMs for specific tasks, making them both cost-effective and high-performing.

- Scalability: The need to efficiently scale increases as your assistant grows. Fine-tuned smaller models are easier to scale and manage, especially in complex environments with multiple flows.

The Command Generator: A Key Component for Efficiency

CALM’s success with smaller models comes from the Command Generator. Rather than relying on the LLM for every conversational task, the Command Generator translates what users are saying into instructions to progress the conversation.

Without this, fine-tuning an LLM would require retraining the model on every piece of business logic, making it operationally prohibitive. CALM’s architecture, combined with the Command Generator, solves this problem by allowing businesses to fine-tune smaller models for specific tasks without overburdening them.

Practical Steps: Fine-Tuning and Deployment Options

Fine-tuning doesn’t have to be complicated. Here’s an outline of the process:

- Prepare Your Dataset: Start by writing sample conversations that can be augmented by synthetic data using the features available.

- Fine-Tuning: With our fine-tuning recipe, the process is semi-automated. After preparing your data, you can fine-tune a smaller LLM on your chosen platform (e.g., AWS, GCP, Hugging Face, or locally).

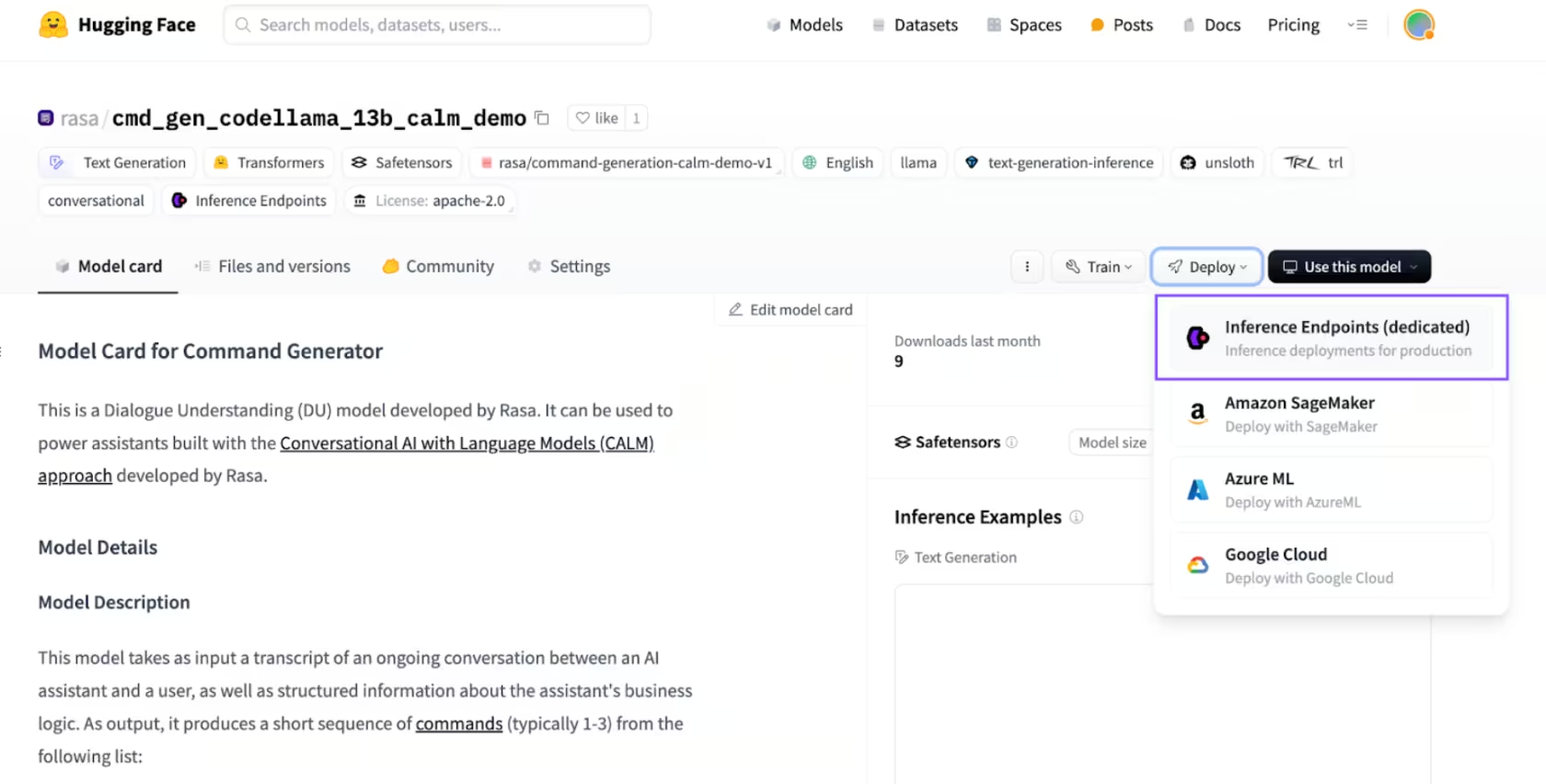

- Deploy the Model: Once fine-tuned, you can choose how to deploy the model-whether through Hugging Face endpoints, self-hosting on a private cloud, or using on-prem infrastructure. Each option offers different advantages in terms of control, cost, and security.

Open-Source Resources: Instruction Tuning Dataset and Baseline Models

To support the community, we’ve open-sourced our instruction tuning dataset on Hugging Face. There are two baseline models: fine-tuned CodeLlama 13B and fine-tuned Llama 3.1 8B. These resources provide a great starting point for developers interested in instruction tuning and building robust, efficient models.

Why These Models?

- CodeLlama 13B: Known for its superior generalization, making it ideal for a range of tasks and fine-tuning applications.

- Llama 3.1 8B: A lighter, faster option that still delivers strong performance, particularly in real-time use cases.

Both these fine-tuned models can be deployed on HuggingFace Inference Endpoints with a single click:

Ready to Fine-Tune Your Own Assistant?

To get started, dive into our open-source instruction tuning dataset our baseline models on Hugging Face and fine-tuning recipe’s documentation.