Note! In Rasa 3.0 we've made a change to the machine learning backend of Rasa Open Source. In some ways, this is but a mere implementation detail. The reasoning in this blogpost did not change. But it helps to be aware that the computational framework during training no longer resembles a linear sequence of steps. As of Rasa 3.0, it represents a directed graph. You can read this blogpost if you'd like to learn more.

The NLU Pipeline

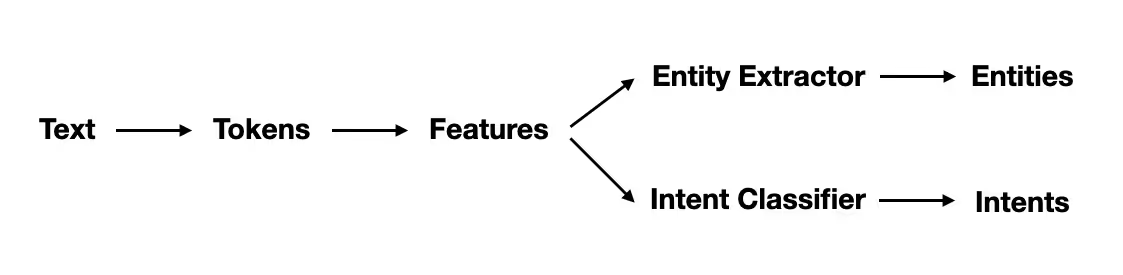

The NLU pipeline is defined in the `config.yml` file in Rasa. This file describes all the steps in the pipeline that will be used by Rasa to detect intents and entities. It starts with text as input and it keeps parsing until it has entities and intents as output.

There are different types of components that you can expect to find in a pipeline. The main ones are:

- Tokenizers

- Featurizers

- Intent Classifiers

- Entity Extractors

We'll discuss what each of these types of components do before discussing how they interact with each other.

Components

1. Tokenizers

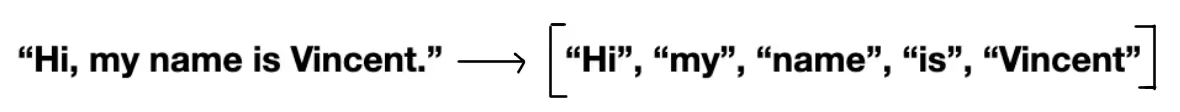

The first step is to split an utterance into smaller chunks of text, known as tokens. This must happen before text is featurized for machine learning, which is why you'll usually have a tokenizer listed first at the start of a pipeline.

Details on Tokenizers.

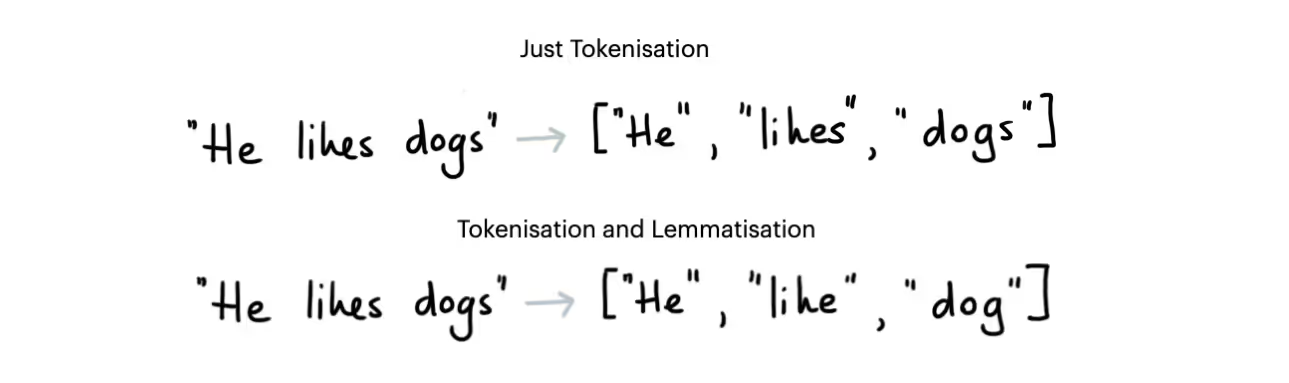

Some tokenizers also add extra information to the tokens. For example, spaCy is able to also generate lemmas of the tokens which can later be used by the CountVectorizer.

The tokenizer splits each individual word in the utterance into a separate token, and commonly the output of the tokenizer is a list of words. We might also get separate tokens for punctuation depending on the tokenizer and the settings that we pass through.

For English, we usually use the WhiteSpaceTokenizer but for non-English it can be common to pick other ones. SpaCy is a good choice for non-English European languages but Rasa also supports Jieba for Chinese.

Note that tokenizers don't change the underlying text, they only separate text into tokens. That means, for example, that capitalisation remains untouched. It might be that you'd like to only encode the lower case text for your pipeline, but adding this kind of information is the job of a featurizer, which we'll discuss next.

2. Featurizers

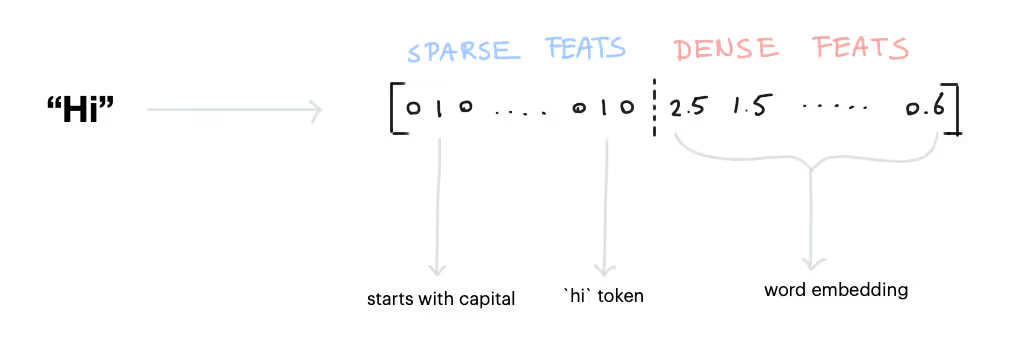

Featurizers generate numeric features for machine learning models. The diagram below shows how the word "Hi" might be encoded.

There are two types of features:

- Sparse Features: usually generated by a CountVectorizer. Note that these counts may represent subwords as well. We also have a LexicalSyntacticFeaturizer that generates window-based features useful for entity recognition. When combined with spaCy, the LecticalSyntacticFeaturizer can be configured to also include part of speech features.

- Dense Features: these consist of many pre-trained embeddings. Commonly from SpaCyFeaturizers or from huggingface via LanguageModelFeaturizers. If you want these to work, you should also include an appropriate tokenizer in your pipeline. More details are in the documentation.

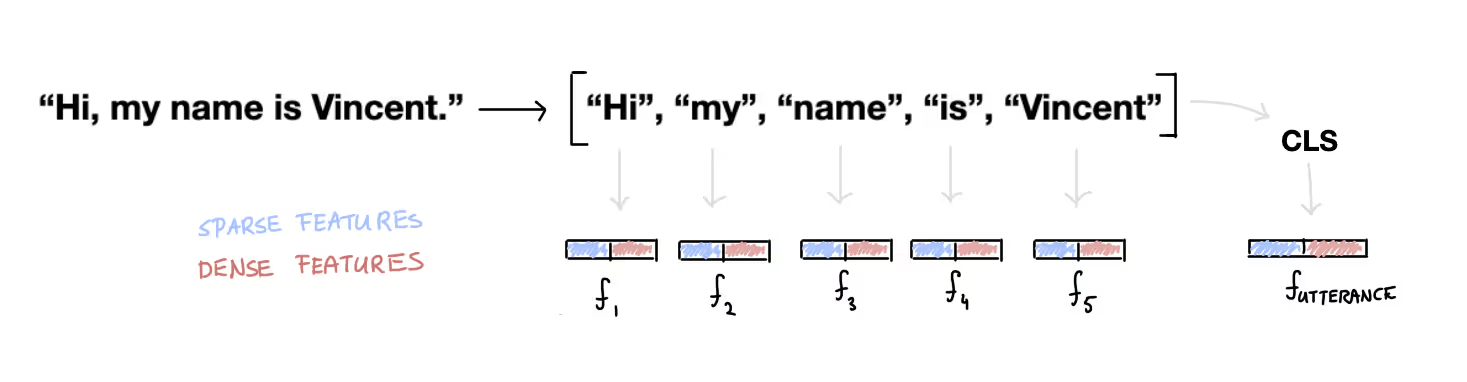

Besides features for tokens, we also generate features for the entire sentence. This is sometimes also referred to as the CLS token.

Details on sentence features.

The sparse features in this CLS token are a sum of all the sparse features in the tokens. The dense features are either a pooled sum/mean of word vectors (in the case of spaCy) or a contextualized representation of the entire text (in the case of huggingface models).

Note that you're completely free to add your own components with custom featurization tools. As an example, there's a community-maintained project called rasa-nlu-examples that has many experimental featurizers for non-English languages. It's not officially supported by Rasa, but can be of help to many users as there are over 275 languages represented.

3. Intent Classifiers

Once we've generated features for all of the tokens and for the entire sentence, we can pass it to an intent classification model. We recommend using Rasa's DIET model which can handle both intent classification as well as entity extraction. It is also able to learn from both the token- as well as sentence features.

Details on DIET.

We should appreciate that the DIET algorithm is special. Most of the algorithms that Rasa hosted in the past did either entity detection or intent classification but they didn't don't do both. That often also meant that intent classification models only looked at the sentence features of the pipeline and ignored the token features.

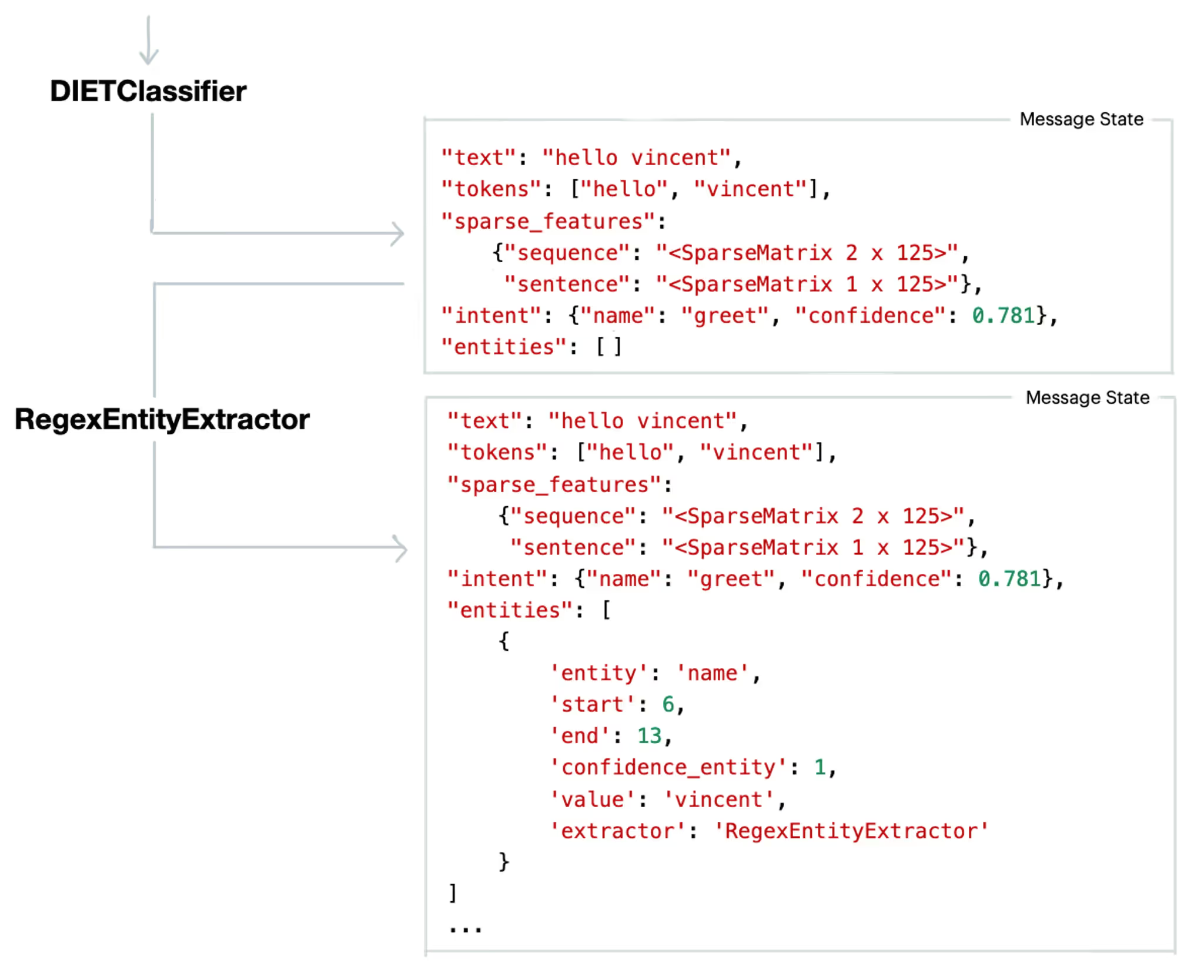

4. Entity Extraction

Even though DIET is capable of learning how to detect entities, we don't necessarily recommend using it for every type of entity out there. For example, entities that follow a structured pattern, like phone numbers, don't really need an algorithm to detect them. You can just handle it with a RegexEntityExtractor instead.

This is why it's common to have more than one type of entity extractor in the pipeline.

Now that we've given an overview of the different types of components in the NLU pipeline we can move on to explain how these components share information to each-other.

Interaction: Message Passing

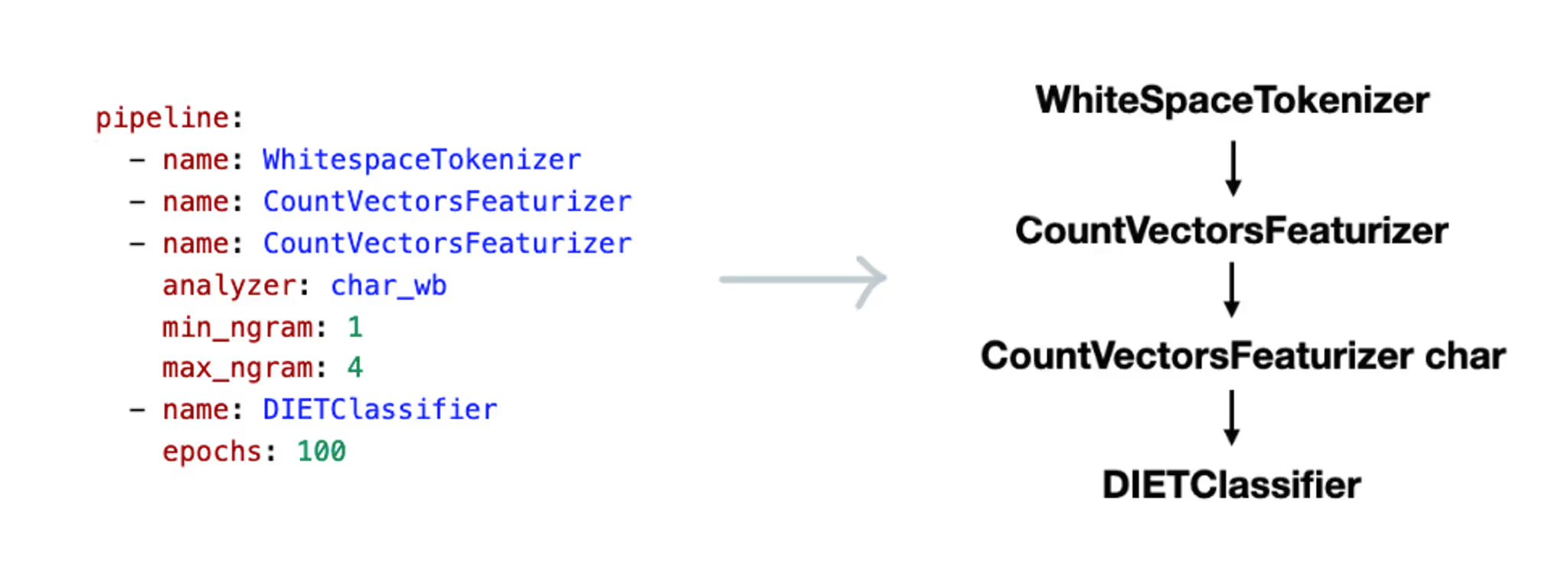

As you can imagine, the components in a Rasa pipeline depend on each other. So you might be wondering how they interact. To understand how this works, it helps to zoom in on an example `config.yml` file.

The NLU pipeline is a sequence of components. These components are trained and processed in the order they are listed in the pipeline. This means that a pipeline configuration can be thought of as a linear sequence of steps that the data needs to pass through.

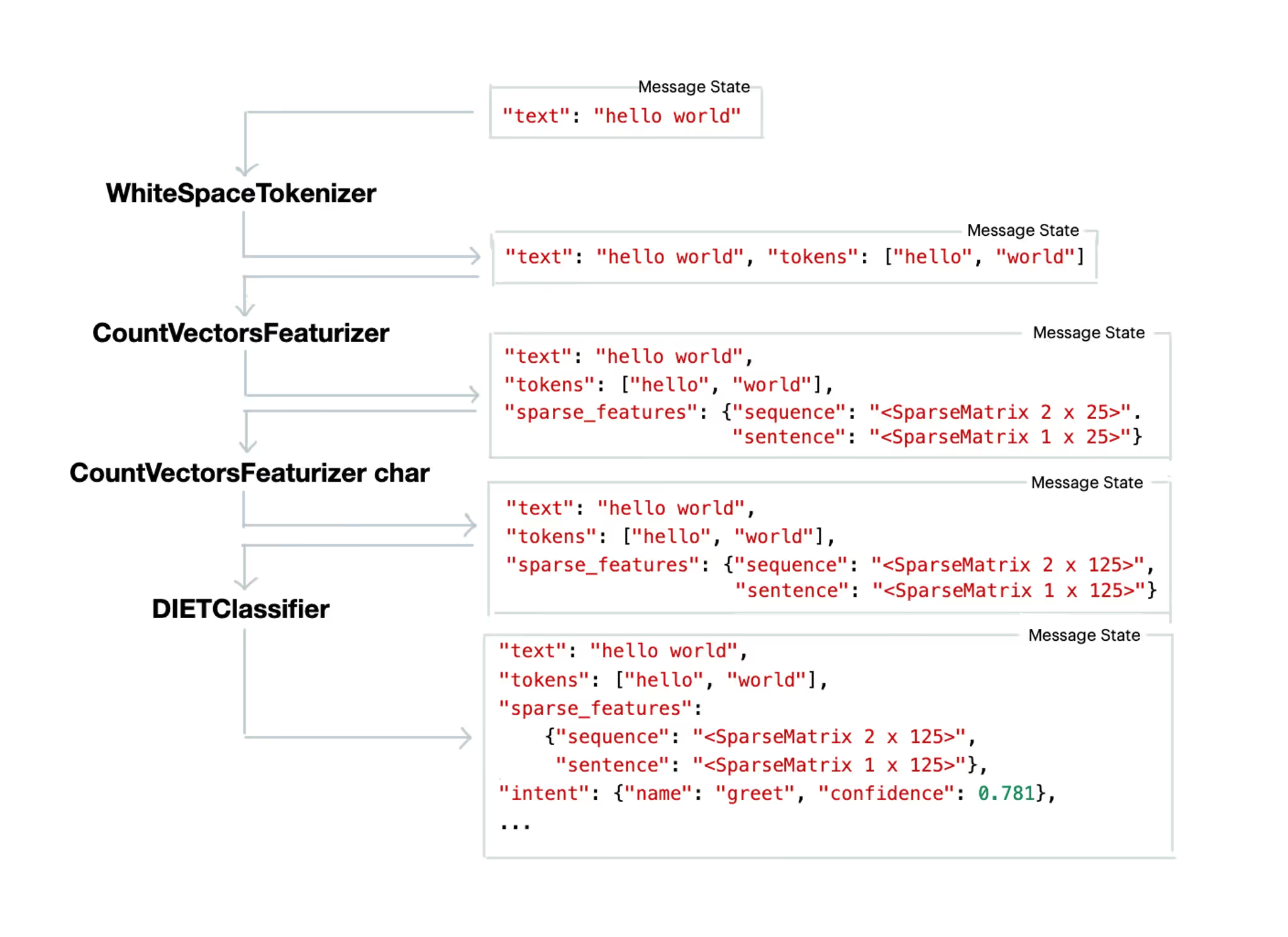

Whenever a user talks to the assistant, Rasa internally keeps track of the state of an utterance via a `Message` object. This object is processed by each step in the pipeline. The diagram below gives an overview of what happens when the Message is processed.

There are a few things to point out in this diagram.

- The message first starts out as a container with just the plain user utterance.

- After the message passes through the tokenizer it is split up into tokens. Note that we're representing the tokens as strings in the diagram while internally they are represented by a `Token` object.

- When the message passes through a CountVectorsFeaturizer you'll notice that sparse features are added. There's a distinction between features for the sequence and the entire sentence. Also, note that after passing through the second featurizer the size of the sparse features increases.

- The DIETClassifier will look for `sparse_features` and `dense_features` in the message in order to make a prediction. After it is done processing it will attach the intent predictions to the message object.

Every time a message passes through a pipeline step the message object will gain new information. That also means that you can keep adding steps to the pipeline if you want to add information to the message. That's also why you can attach extra entity extraction models.

As you can see, every step in the pipeline can add information to the message. That means that you can add multiple entity extraction steps and that can work side-by-side to add entities to the message.

Inspecting the Message-object yourself.

If you're interested in seeing the message state yourself, you can inspect the output of the model via the code below. Note that this code is meant to work in Rasa Open Source 2.x.

Pythoncopy

from rasa.cli.utils import get_validated_path

from rasa.model import get_model, get_model_subdirectories

from rasa.core.interpreter import RasaNLUInterpreter

from rasa.shared.nlu.training_data.message import Message

def load_interpreter(model_dir, model):

path_str = str(pathlib.Path(model_dir) / model)

model = get_validated_path(path_str, "model")

model_path = get_model(model)

_, nlu_model = get_model_subdirectories(model_path)

return RasaNLUInterpreter(nlu_model)

# Loads the model

mod = load_interpreter(model_dir, model)

# Parses new text

msg = Message({TEXT: text})

for p in interpreter.interpreter.pipeline:

p.process(msg)

print(msg.as_dict())

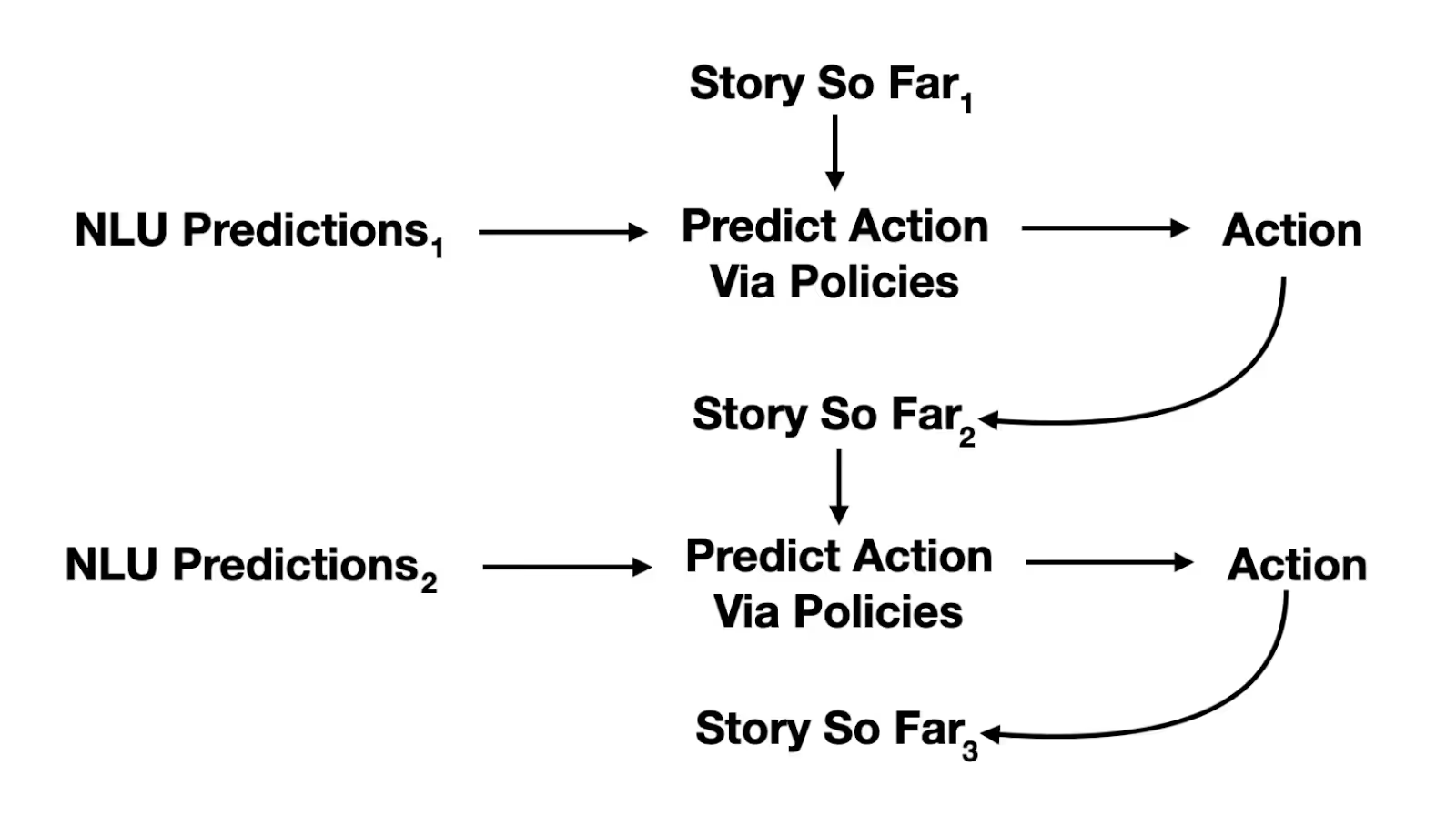

Predicting Actions

With the NLU pipeline, we detect intents and entities. But this pipeline doesn't predict the next action in the conversation. That's what the policy pipeline is for. Policies make use of the NLU predictions as well as the state of the conversation so far to predict what action to take next.

If you're interested in how these policies generate actions you might appreciate our blog post written on the topic here.

Conclusion

In this blog post, we've reviewed how components within a Rasa NLU pipeline interact with each other. It's good to understand how components interact in the pipeline because it will help you decide which components are relevant to your assistant.

It's also important to understand that you can completely customize the pipeline. You can remove components if you don't need them and you can even write your own components if you want to use your own tools. This can be especially relevant if you're working with a non-English language and you'd like to use custom language tools that you're familiar with. If you're interested in seeing examples of this we recommend checking out the rasa-nlu-examples repository. That repository has many tokenizers, featurizers and models that you're free to draw inspiration from for your own projects.

Happy Hacking!